the mail, the indices, and the mailboxes.db. Each can be on

separate storage with optimal price vs performance characteristics

for the proposed load.

We have 48000 mailboxes on behalf of 2000 users, with typically 1700

imapd processes during the day and ~1000 overnight. There's about

3.4TB of mail stored.

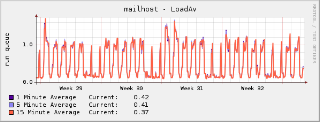

We run Cyrus 2.3.9 (12p1 is due for the next time the machine has a

maintenance window) in a Solaris zone (container) on a Sun T2000 with

16GB of RAM. The load on the machine is typically low:

We have mailboxes.db and the metapartitions on ZFS, along with the

zone iteself. The pool is drawn from space on four 10000rpm SAS

drives internal to the machine:

NAME STATE READ WRITE CKSUM

pool1 ONLINE 0 0 0

mirror ONLINE 0 0 0

c0t0d0s4 ONLINE 0 0 0

c0t1d0s4 ONLINE 0 0 0

mirror ONLINE 0 0 0

c0t2d0s4 ONLINE 0 0 0

c0t3d0s4 ONLINE 0 0 0

They're fairly busy, mostly with writes (1 second resolution):

capacity operations bandwidth

pool used avail read write read write

---------- ----- ----- ----- ----- ----- -----

pool1 39.4G 38.6G 10 77 472K 509K

pool1 39.4G 38.6G 0 65 0 1.09M

pool1 39.4G 38.6G 2 521 1.98K 3.03M

pool1 39.4G 38.6G 2 170 255K 951K

pool1 39.4G 38.6G 0 37 0 249K

pool1 39.4G 38.6G 0 35 0 310K

pool1 39.4G 38.6G 2 22 16.3K 430K

pool1 39.4G 38.6G 9 660 284K 4.65M

pool1 39.4G 38.6G 0 118 506 296K

pool1 39.4G 38.6G 0 2 0 27.7K

pool1 39.4G 38.6G 4 39 96.0K 990K

pool1 39.4G 38.6G 1 89 1013 997K

pool1 39.4G 38.6G 3 775 56.4K 5.06M

pool1 39.4G 38.6G 0 160 0 531K

pool1 39.4G 38.6G 0 20 0 118K

pool1 39.4G 38.6G 0 11 0 83.2K

pool1 39.4G 38.6G 0 41 0 595K

pool1 39.4G 38.6G 0 624 1013 3.46M

This indicates that most client-side reads are being serviced from

cache (as you'd expect with 16GB of RAM). We have 21.4GB of meta data

on disk, which we run with ZFS compression to reduce IO bandwidth (at

the expense of CPU utilisation, of which we have plenty spare). The

ZFS compression ratio is about 1.7x: cyrus.cache, in particular, is

very compressible.

The message store is out on the `archive' QoS of a Pillar Axiom AX500,

so the data's living in the slow regions of SATA drives, that would

otherwise go to waste. We see ~20ms for all reads, because they are

essentially random and have to come up from the spindle of the NFS

server. Writes go to mirrored RAM on the server at take ~2ms.

Because most clients cache content these days, the load on those

partitions is much lower. Ten seconds' of sar output yields:

10:47:34 device %busy avque r+w/s blks/s avwait avserv

[...]

nfs73 16 0.2 10 84 0.0 15.6

nfs86 43 0.4 24 205 0.0 18.5

nfs87 1 0.0 3 152 0.0 7.2

nfs96 2 0.0 6 369 0.0 6.9

nfs101 0 0.0 1 35 0.0 5.0

nfs102 0 0.0 0 0 0.0 0.0

ian

----

Cyrus Home Page: http://cyrusimap.web.cmu.edu/

Cyrus Wiki/FAQ: http://cyrusimap.web.cmu.edu/twiki

List Archives/Info: http://asg.web.cmu.edu/cyrus/mailing-list.html