Hi Reed,

Thankyou so much for the input and support. We have tried using the variable suggested by you, but could not see any impact on the current system.

"ceph fs set cephfs allow_standby_replay true " it did not create any impact in the failover time

Furthermore we have tried more scenarios that we tested using our test :

scenario 1:

- In this we have tried to see the logs at the new node on which the mds will failover to, i.e in this case if we reboot cephnode2 so new active MDS will be Cephnode1. Checking logs for cephnode1 in two scenarios:

- 1. normal reboot of Cephnode2 by keeping the I/O operation in progress,

- we see that log at cephnode1 instantiates immediately and then wait for sometime (around 15 seconds for some beacon time) + some additional 6-7 seconds during which it activated MDS on cephnode1 and resumes I/O. Refer logs as :

- 2021-04-29T15:49:42.480+0530 7fa747690700 1 mds.cephnode1 Updating MDS map to version 505 from mon.2

2021-04-29T15:49:42.482+0530 7fa747690700 1 mds.0.505 handle_mds_map i am now mds.0.505

2021-04-29T15:49:42.482+0530 7fa747690700 1 mds.0.505 handle_mds_map state change up:boot --> up:replay

2021-04-29T15:49:42.482+0530 7fa747690700 1 mds.0.505 replay_start

2021-04-29T15:49:42.482+0530 7fa747690700 1 mds.0.505 recovery set is

2021-04-29T15:49:42.482+0530 7fa747690700 1 mds.0.505 waiting for osdmap 486 (which blacklists prior instance)

2021-04-29T15:49:55.686+0530 7fa74568c700 1 mds.beacon.cephnode1 MDS connection to Monitors appears to be laggy; 15.9769s since last acked beacon

2021-04-29T15:49:55.686+0530 7fa74568c700 1 mds.0.505 skipping upkeep work because connection to Monitors appears laggy

2021-04-29T15:49:57.533+0530 7fa749e95700 0 mds.beacon.cephnode1 MDS is no longer laggy

2021-04-29T15:49:59.599+0530 7fa740e83700 0 mds.0.cache creating system inode with ino:0x100

2021-04-29T15:49:59.599+0530 7fa740e83700 0 mds.0.cache creating system inode with ino:0x1

2021-04-29T15:50:00.456+0530 7fa73f680700 1 mds.0.505 Finished replaying journal

2021-04-29T15:50:00.456+0530 7fa73f680700 1 mds.0.505 making mds journal writeable

2021-04-29T15:50:00.959+0530 7fa747690700 1 mds.cephnode1 Updating MDS map to version 506 from mon.2

2021-04-29T15:50:00.959+0530 7fa747690700 1 mds.0.505 handle_mds_map i am now mds.0.505

2021-04-29T15:50:00.959+0530 7fa747690700 1 mds.0.505 handle_mds_map state change up:replay --> up:reconnect

2021-04-29T15:50:00.959+0530 7fa747690700 1 mds.0.505 reconnect_start

2021-04-29T15:50:00.959+0530 7fa747690700 1 mds.0.505 reopen_log

2021-04-29T15:50:00.959+0530 7fa747690700 1 mds.0.server reconnect_clients -- 2 sessions

2021-04-29T15:50:00.964+0530 7fa747690700 0 log_channel(cluster) log [DBG] : reconnect by client.6892 v1:10.0.4.96:0/1646469259 after 0.00499997

2021-04-29T15:50:00.972+0530 7fa747690700 0 log_channel(cluster) log [DBG] : reconnect by client.6990 v1:10.0.4.115:0/2776266880 after 0.0129999

2021-04-29T15:50:00.972+0530 7fa747690700 1 mds.0.505 reconnect_done

2021-04-29T15:50:02.005+0530 7fa747690700 1 mds.cephnode1 Updating MDS map to version 507 from mon.2

2021-04-29T15:50:02.005+0530 7fa747690700 1 mds.0.505 handle_mds_map i am now mds.0.505

2021-04-29T15:50:02.005+0530 7fa747690700 1 mds.0.505 handle_mds_map state change up:reconnect --> up:rejoin

2021-04-29T15:50:02.005+0530 7fa747690700 1 mds.0.505 rejoin_start

2021-04-29T15:50:02.008+0530 7fa747690700 1 mds.0.505 rejoin_joint_start

2021-04-29T15:50:02.040+0530 7fa740e83700 1 mds.0.505 rejoin_done

2021-04-29T15:50:03.050+0530 7fa747690700 1 mds.cephnode1 Updating MDS map to version 508 from mon.2

2021-04-29T15:50:03.050+0530 7fa747690700 1 mds.0.505 handle_mds_map i am now mds.0.505

2021-04-29T15:50:03.050+0530 7fa747690700 1 mds.0.505 handle_mds_map state change up:rejoin --> up:clientreplay

2021-04-29T15:50:03.050+0530 7fa747690700 1 mds.0.505 recovery_done -- successful recovery!

2021-04-29T15:50:03.050+0530 7fa747690700 1 mds.0.505 clientreplay_start

2021-04-29T15:50:03.094+0530 7fa740e83700 1 mds.0.505 clientreplay_done

2021-04-29T15:50:04.081+0530 7fa747690700 1 mds.cephnode1 Updating MDS map to version 509 from mon.2

2021-04-29T15:50:04.081+0530 7fa747690700 1 mds.0.505 handle_mds_map i am now mds.0.505

2021-04-29T15:50:04.081+0530 7fa747690700 1 mds.0.505 handle_mds_map state change up:clientreplay --> up:active

2021-04-29T15:50:04.081+0530 7fa747690700 1 mds.0.505 active_start

2021-04-29T15:50:04.085+0530 7fa747690700 1 mds.0.505 cluster recovered.

- hard reset/power-off of Cephnode2 by keeping the I/O operation in progress:

- In this case we see that the system logs at cephnode 1(on which new MDS will be activated) gets activated after 15+ seconds of power-off.

- Time at which power-off was it : 2021-04-29-16-17-37

- Time at which the logs started to show in cephnode 1 (refer logs) i.e log started nearly after 15 seconds of hardware reset:

- 2021-04-29T16:17:51.983+0530 7f5ba3a38700 1 mds.cephnode1 Updating MDS map to version 518 from mon.0

2021-04-29T16:17:51.984+0530 7f5ba3a38700 1 mds.0.518 handle_mds_map i am now mds.0.518

2021-04-29T16:17:51.984+0530 7f5ba3a38700 1 mds.0.518 handle_mds_map state change up:boot --> up:replay

2021-04-29T16:17:51.984+0530 7f5ba3a38700 1 mds.0.518 replay_start

2021-04-29T16:17:51.984+0530 7f5ba3a38700 1 mds.0.518 recovery set is

2021-04-29T16:17:51.984+0530 7f5ba3a38700 1 mds.0.518 waiting for osdmap 504 (which blacklists prior instance)

2021-04-29T16:17:54.044+0530 7f5b9ca2a700 0 mds.0.cache creating system inode with ino:0x100

2021-04-29T16:17:54.045+0530 7f5b9ca2a700 0 mds.0.cache creating system inode with ino:0x1

2021-04-29T16:17:55.025+0530 7f5b9ba28700 1 mds.0.518 Finished replaying journal

2021-04-29T16:17:55.025+0530 7f5b9ba28700 1 mds.0.518 making mds journal writeable

2021-04-29T16:17:56.060+0530 7f5ba3a38700 1 mds.cephnode1 Updating MDS map to version 519 from mon.0

2021-04-29T16:17:56.060+0530 7f5ba3a38700 1 mds.0.518 handle_mds_map i am now mds.0.518

2021-04-29T16:17:56.060+0530 7f5ba3a38700 1 mds.0.518 handle_mds_map state change up:replay --> up:reconnect

2021-04-29T16:17:56.060+0530 7f5ba3a38700 1 mds.0.518 reconnect_start

2021-04-29T16:17:56.060+0530 7f5ba3a38700 1 mds.0.518 reopen_log

2021-04-29T16:17:56.060+0530 7f5ba3a38700 1 mds.0.server reconnect_clients -- 2 sessions

2021-04-29T16:17:56.068+0530 7f5ba3a38700 0 log_channel(cluster) log [DBG] : reconnect by client.6990 v1:10.0.4.115:0/2776266880 after 0.00799994

2021-04-29T16:17:56.069+0530 7f5ba3a38700 0 log_channel(cluster) log [DBG] : reconnect by client.6892 v1:10.0.4.96:0/1646469259 after 0.00899994

2021-04-29T16:17:56.069+0530 7f5ba3a38700 1 mds.0.518 reconnect_done

2021-04-29T16:17:57.099+0530 7f5ba3a38700 1 mds.cephnode1 Updating MDS map to version 520 from mon.0

2021-04-29T16:17:57.099+0530 7f5ba3a38700 1 mds.0.518 handle_mds_map i am now mds.0.518

2021-04-29T16:17:57.099+0530 7f5ba3a38700 1 mds.0.518 handle_mds_map state change up:reconnect --> up:rejoin

2021-04-29T16:17:57.099+0530 7f5ba3a38700 1 mds.0.518 rejoin_start

2021-04-29T16:17:57.103+0530 7f5ba3a38700 1 mds.0.518 rejoin_joint_start

2021-04-29T16:17:57.472+0530 7f5b9d22b700 1 mds.0.518 rejoin_done

2021-04-29T16:17:58.138+0530 7f5ba3a38700 1 mds.cephnode1 Updating MDS map to version 521 from mon.0

2021-04-29T16:17:58.138+0530 7f5ba3a38700 1 mds.0.518 handle_mds_map i am now mds.0.518

2021-04-29T16:17:58.138+0530 7f5ba3a38700 1 mds.0.518 handle_mds_map state change up:rejoin --> up:clientreplay

2021-04-29T16:17:58.138+0530 7f5ba3a38700 1 mds.0.518 recovery_done -- successful recovery!

2021-04-29T16:17:58.138+0530 7f5ba3a38700 1 mds.0.518 clientreplay_start

2021-04-29T16:17:58.157+0530 7f5b9d22b700 1 mds.0.518 clientreplay_done

2021-04-29T16:17:59.178+0530 7f5ba3a38700 1 mds.cephnode1 Updating MDS map to version 522 from mon.0

2021-04-29T16:17:59.178+0530 7f5ba3a38700 1 mds.0.518 handle_mds_map i am now mds.0.518

2021-04-29T16:17:59.178+0530 7f5ba3a38700 1 mds.0.518 handle_mds_map state change up:clientreplay --> up:active

2021-04-29T16:17:59.178+0530 7f5ba3a38700 1 mds.0.518 active_start

2021-04-29T16:17:59.181+0530 7f5ba3a38700 1 mds.0.518 cluster recovered.

In both the test cases above we saw some extra delay of around 15 seconds + 8-10 seconds. (total 21-25 seconds for failover in case of power-off/reboot),

Query: Any specific config that may need to be tweaked/tried to reduce this time for MDS to know that it has to activate and start the standby MDS Node?)

Scenario 2:

- Only stop MDS Daemon Service on Active Node

- In this scenario when we only tried stopping systemctl service for the MDS Node on Active Node, we have very good reading of around 5-7 Seconds for failover.

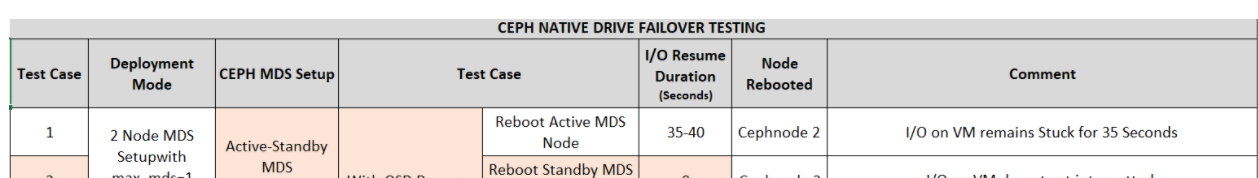

Deployment Mode CEPH MDS Setup Test Case I/O Resume Duration

(Seconds)Node affected 2 Node MDS Setupwith max_mds=1 Active-Standby MDS with Active Node MDS Demon stop 5-7 cephnode 1

Please suggest/advice if we can try to configure to achieve minimal failover duration in the first two scenarios.

Best Regards,

Lokendra

On Thu, Apr 29, 2021 at 1:47 AM Reed Dier <reed.dier@xxxxxxxxxxx> wrote:

I don't have anything of merit to add to this, but it would be an interesting addition to your testing to see if active+standby-replay makes any difference with test-case1.I don't think it would be applicable to any of the other use-cases, as a standby-replay MDS is bound to a single rank, meaning its bound to a single active MDS, and can't function as a standby for active:active.Good luck and look forward to hearing feedback/more results.ReedOn Apr 27, 2021, at 8:40 AM, Lokendra Rathour <lokendrarathour@xxxxxxxxx> wrote:_______________________________________________Hi Team,We have setup two Node Ceph Cluster using Native Cephfs Driver with Details as:

- 3 Node / 2 Node MDS Cluster

- 3 Node Monitor Quorum

- 2 Node OSD

- 2 Nodes for Manager

Cephnode3 have only Mon and MDS (only for test case 4-7) rest two nodes i.e. cephnode1 and cephnode2 have (mgr,mds,mon,rgw)

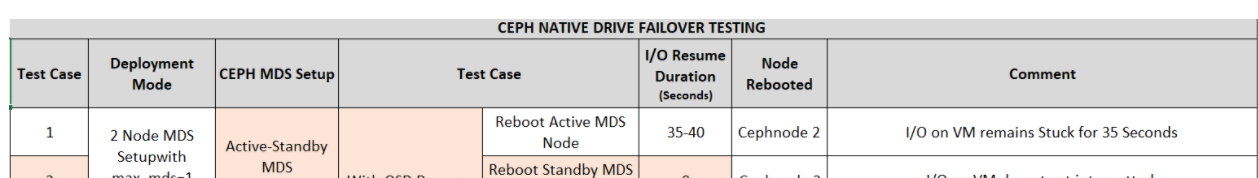

We have tested following failover scenarios for Native Cephfs Driver by mounting for any one sub-volume on a VM or client with continuous I/O operations(Directory creation after every 1 Second):<image.png>In the table above we have few queries as:

- Refer test case 2 and test case 7, both are similar test case with only difference in number of Ceph MDS with time for both the test cases is different. It should be zero. But time is coming as 17 seconds for testcase 7.

- Is there any configurable parameter/any configuration which we need to make in the Ceph cluster to get the failover time reduced to few seconds?

In current default deployment we are getting something around 35-40 seconds.

Best Regards,--

ceph-users mailing list -- ceph-users@xxxxxxx

To unsubscribe send an email to ceph-users-leave@xxxxxxx

_______________________________________________ ceph-users mailing list -- ceph-users@xxxxxxx To unsubscribe send an email to ceph-users-leave@xxxxxxx