Hi Team,

We have setup two Node Ceph Cluster using Native Cephfs Driver with Details as:

- 3 Node / 2 Node MDS Cluster

- 3 Node Monitor Quorum

- 2 Node OSD

- 2 Nodes for Manager

Cephnode3 have only Mon and MDS (only for test case 4-7) rest two nodes i.e. cephnode1 and cephnode2 have (mgr,mds,mon,rgw)

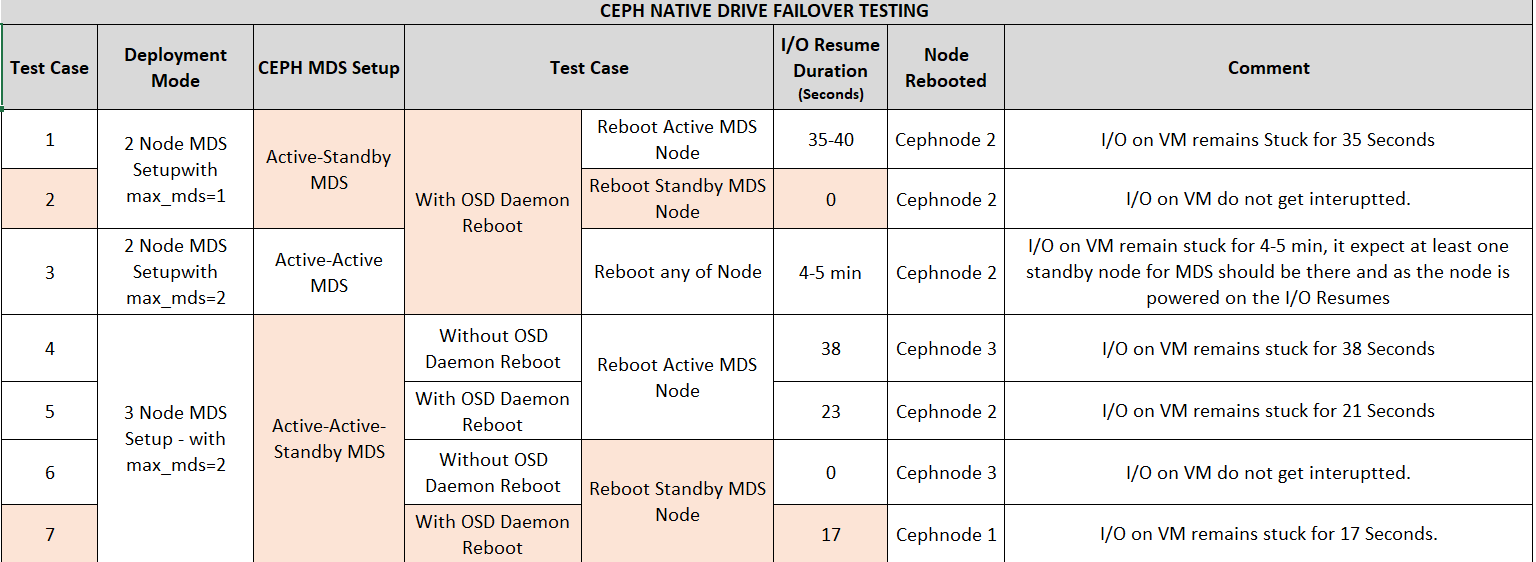

We have tested following failover scenarios for Native Cephfs Driver by mounting for any one sub-volume on a VM or client with continuous I/O operations(Directory creation after every 1 Second):

In the table above we have few queries as:

- Refer test case 2 and test case 7, both are similar test case with only difference in number of Ceph MDS with time for both the test cases is different. It should be zero. But time is coming as 17 seconds for testcase 7.

- Is there any configurable parameter/any configuration which we need to make in the Ceph cluster to get the failover time reduced to few seconds?

In current default deployment we are getting something around 35-40 seconds.

Best Regards,

_______________________________________________ ceph-users mailing list -- ceph-users@xxxxxxx To unsubscribe send an email to ceph-users-leave@xxxxxxx