Apologies, I think the images I attached did not go through. The text in

the email describes it all, but here they are again in case you want

the visuals:

- Laura

On Fri, Oct 28, 2022 at 6:07 PM Laura Flores <lflores@xxxxxxxxxx> wrote:

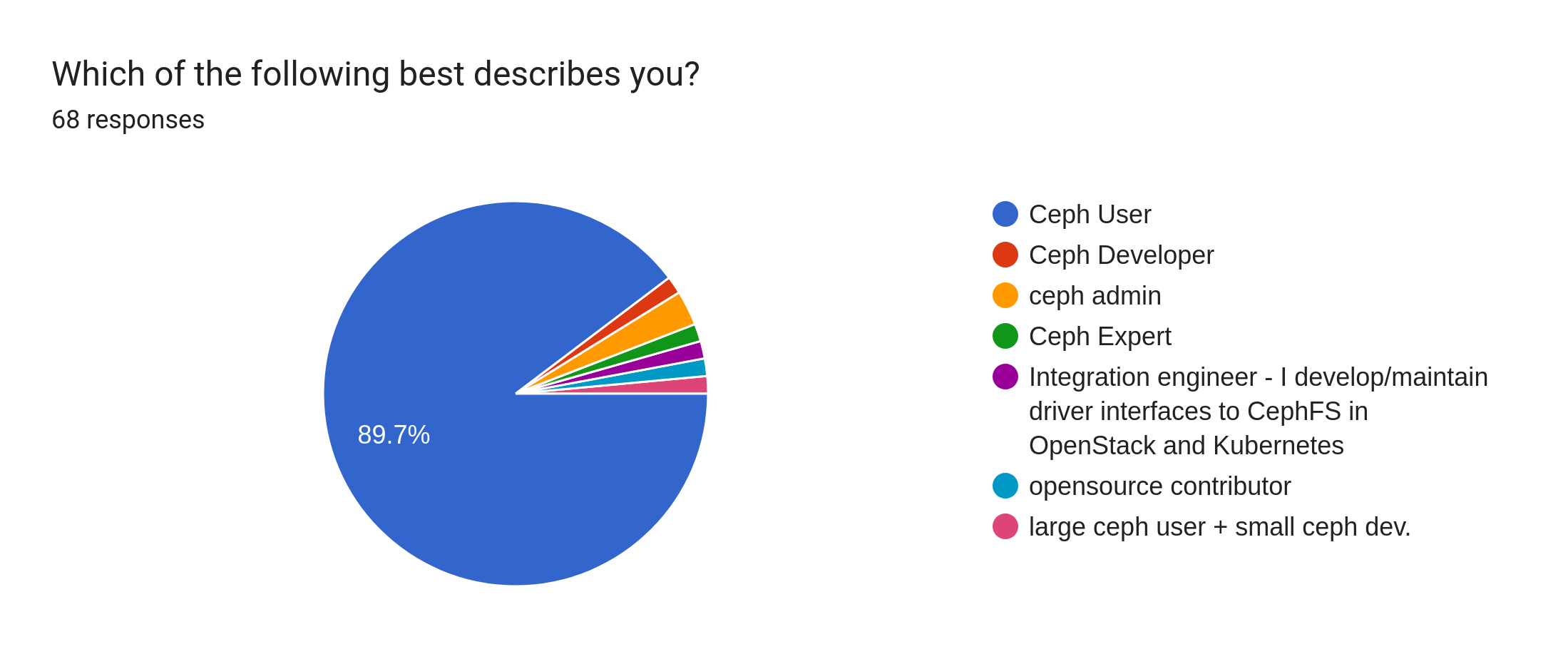

Hi Ceph Developers!I wanted to provide an initial summary of results from the survey before a more detailed blog post is published. Thanks to everyone who submitted responses! Developers and doc writers value your responses immensely.--------------------------We got a total of 68 responses from the user / dev / sme-storage community.Question 1: Which of the following best describes you?

- 89.7% of the responses, or 61 people, responded "Ceph User"

- 1.5% of the responses, or 1 person, responded "Ceph Developer"

- 8.8% of the responses, or 6 people, specified "Other", where they could type a more specific response:

- 2.9%, or 2 people responded "ceph admin"

- 1.5%, or 1 person, responded "Ceph Expert"

- 1.5%, or 1 person, responded "Integration engineer - I develop/maintain driver interfaces to CephFS in OpenStack and Kubernetes"

- 1.5%, or 1 person, responded "opensource contributor"

- 1.5%, or 1 person, responded "large ceph user + small ceph dev."

Question 2: Do you have experience using a Ceph cluster(s)?

- 100%, or 68 people, responded "Yes"

- 0%, or 0 people, responded "No"

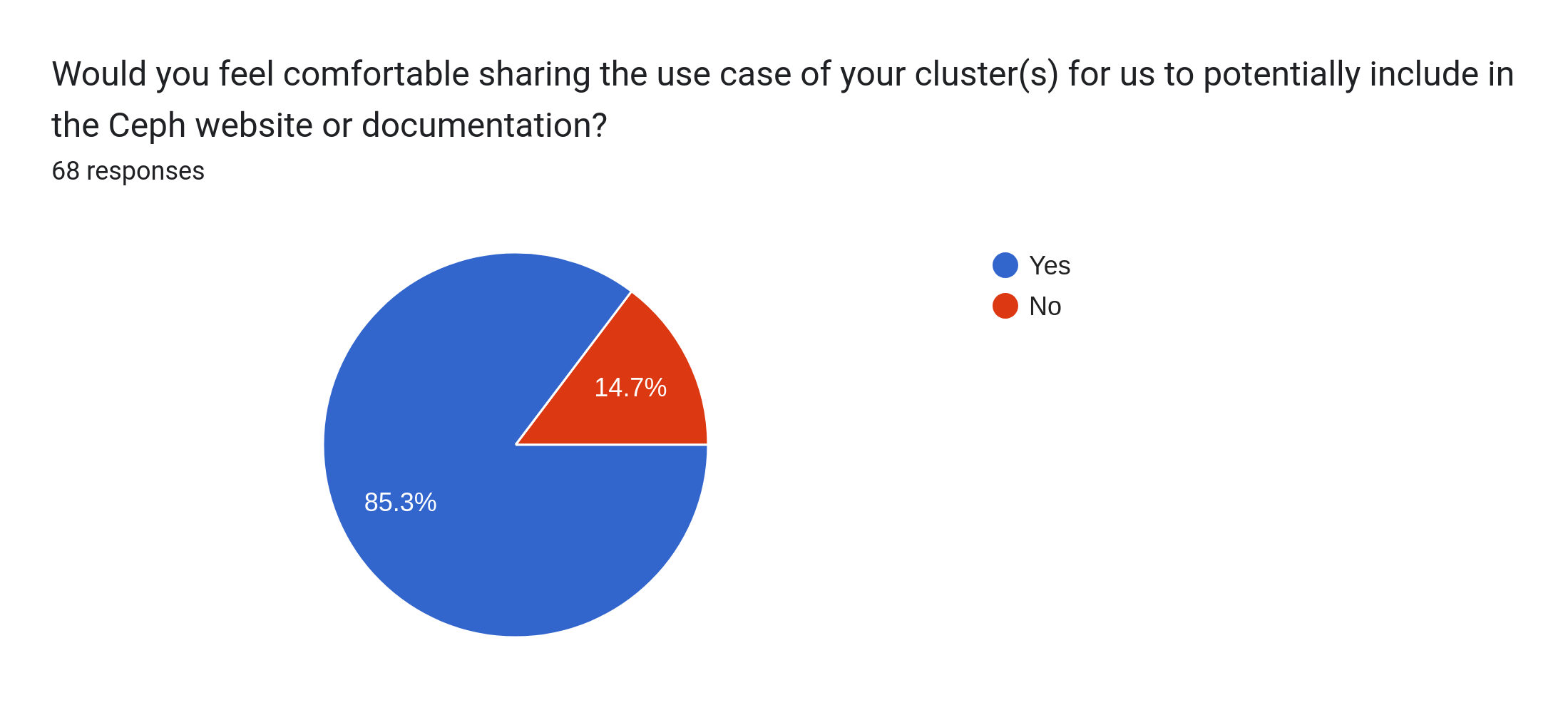

Question 3: Would you feel comfortable sharing the use case of your cluster(s) for us to potentially include in the Ceph website or documentation?

- 85.3%, or 58 people, responded "Yes"

- 14.7%, or 10 people, responded "No"

Question 4: Please describe the use case of your Ceph cluster(s):People who answered "No" to the previous question were exited from the quiz early, since we only wanted to collect responses from people who felt comfortable sharing their use case publicly.As such, 58 people (those who answered "Yes" to the previous question) provided their use case.People were also given the option to either provide their name for credit, or remain anonymous.Here are some use cases that really caught my eye! (More will be summarized in the upcoming blog post):

- "CEPHFS is used as the backend of the IRI Data Library, storing about 700TB of climate, geophysical, health and agriculture data in multiple formats. We needed a central backend storage that multiple compute clients can connect to in order to handle the millions of data downloads from our servers per month. https://iri.columbia.edu/resources/data-library/" ~ Anonymous Ceph User

- "At CERN, we use Ceph for block, object, and filesystem storage.

The main use cases are:

- RBDs for OpenStack VMs (bootable volumes, storage volumes, glance images)

- RBDs for other Storage services (e.g., AFS and NFS are provided by running VMs with RBDs attached used as backend storage for the overlaying service)

- CephFS for provisioning of Persistent Volumes to Kubernetes // OKD pods (via Openstack Manila integration)

- CephFS as replacement for traditional networked POSIX filesystems (e.g., NFS)

- CephFS for HPC resources (hyperconverged cluster with storage living on the same servers used for compute)

- Object storage for container registries, GitLab artifacts, monitoring (prometheus, cortex)

- Object storage for world-wide software distribution via CVMFS (https://cernvm.cern.ch/fs/)

- Object storage as backup destination (S3-to-S3 with rclone, filesystem-to-S3 with restic)" ~ Enrico Bocchi; Ceph User

- "Data Store for Geographically Redundant Voicemail Server

We use:

- Ceph FS (mounted on a Linux handful of clients via elrepo's LT kernel)

- Ceph S3 with Multisite (5-10 buckets, frequent read/writes)

- Pubsub (one zone in each site, syncing from RGW local zone)

Happy to provide more detail if required - wasn't sure how much to include!" ~ Alex Kershaw (Microsoft); Ceph User

- "We are a small MSP hosting an off-site storage for Veeam Backup repositories, using block and object storage in ceph. We evaluated several different distributed file systems but Ceph was the best option for backups where the load is about 75% write file IO. Leveraging XFS formatted block images to take advantage of Veeam's fast clone features. We use rados gateways for object storage to store Veeam Backups for Office365 for our tenants. The features that ceph provides are perfect for maintaining separate storage for each of our clients, whether that is separate blocks or different users and buckets in object storage. We just broke the 1 petabyte mark in our ceph cluster and have no plans to move to a different solution for the foreseeable future." ~ Anonymous Ceph User

- "Data archival as a replacement for a tape library" ~ Anonymous Ceph User

- "I have mostly been working with the clusters created by rhcsdashboard/ceph-dev to help me develop and test features for Ceph dashboard" ~ Ngwa Sedrick Meh, opensource contributor

- "I use the ceph cluster/s for early integration testing. We have a continuous integration system that deploys several versions of Ceph clusters from packages, via ceph-ansible and via cephadm. We then run provisioning/lifecycle management control plane operators on these Ceph clusters via OpenStack API." ~ Goutham Pacha Ravi; Integration engineer (I develop/maintain driver interfaces to CephFS in OpenStack and Kubernetes)

- "Large scale storage for a research facility. Ceph's ability to run on cheaper commodity hardware and scalability is valuable to us." ~ Andrew Ferris; Ceph User

- "Backing storage for an OpenStack cloud for National Science Foundation researchers" ~ Mike Lowe

- "Our use case is a CephFS filesystem used for a nonprofit organization to store large video files.

The system needs to be resilient and be able to cope with hardware failures that can not be fixed immediately as there are not always technicians on hand.

IO performance requirements are not very high (clients on 1GBit ethernet, and at most three in parallel). Price per TB of useful space is a factor that has to be considered.

The system today is built on 10 Asus P11C Series motherboards with 3.6GHz i3-9100 CPU:s and 32 GB ram each. Each MB has a small NVME for OS and a 1TB NVME that is shared as cache for 6 SATA 3.5"" HDD for storage. MON, MGR and other services are distributed among the 10 machines. Data is stored with replica x 3 policy.

A separate machine with a 10GBit NIC is acting as CIFS gateway to the end users." ~ Anonymous Ceph User

- "Video storage" ~ Anonymous Ceph User

- "My Ceph cluster serves as the storage layer for my homelab. I have Ceph RBD volumes used as root disks for a number of netbooting hosts. I'm also using RBDs as CSI volumes for workloads in my Nomad cluster.

CephFS serves as a remote filesystem for cases where I need concurrent access from several nodes.

Finally, I am using the RadosGW to supply S3 storage to my Nomad workloads. It is also used as a target for all of my local backups." ~ Anonymous Ceph User

- "Local testing of applications and microservices that consume object storage using the S3 API. Running a minimal Ceph RGW on the developer's notebook, as a VM or container, in a similar way developers use to run other data services such as MySQL databases. A closely-related use case is running a minimal Ceph RGW to support integration testing on a CI/CD pipeline." ~ Anonymous Ceph User

- "Serving up large scale (+10PB) scientific data sets for researchers and scientists. Primarily Turbulence and Oceanography data via CephFS and S3 object storage." ~ Lance Joseph; Ceph User

------------------------------------Thanks to the community for your engagement. And thank you for choosing Ceph!Look out for a polished blog post coming up soon on ceph.io. :)- Laura FloresOn Fri, Oct 28, 2022 at 4:35 PM Laura Flores <lflores@xxxxxxxxxx> wrote:Hey everyone.The survey will close in roughly half an hour. Thanks for all the amazing responses!We plan to have a blog post up soon on ceph.io to summarize results.- Laura FloresOn Thu, Oct 20, 2022 at 10:29 AM Laura Flores <lflores@xxxxxxxxxx> wrote:Dear Ceph developers,Ceph developers and doc writers are looking for responses from people in the user/dev community who have experience with a Ceph cluster. Our question: What is the use case of your Ceph cluster?Since the first official Argonaut release in 2012, Ceph has greatly expanded its features and user base. With the next major release on the horizon, developers are now more curious than ever to know how people are using their clusters in the wild.Our goal is to share these insightful results with the community, as well as make it easy for beginning developers (e.g. students from Google Summer of Code, Outreachy, or Grace Hopper) to understand all the ways that Ceph can be used.In completing this survey, you'll have the option of providing your name or remaining anonymous. If your use case is chosen to include on the website or documentation, we will be sure to honor your choice of being recognized or remaining anonymous.Follow this link [3] to begin the survey. Feel free to reach out to me with any questions!- Laura Flores1. Ceph website: https://ceph.io/2. Ceph documentation: https://docs.ceph.com/en/latest/

--Laura Flores

She/Her/Hers

Software Engineer, Ceph Storage

Chicago, IL

--Laura Flores

She/Her/Hers

Software Engineer, Ceph Storage

Chicago, IL

--Laura Flores

She/Her/Hers

Software Engineer, Ceph Storage

Chicago, IL

--

Laura Flores

She/Her/Hers

Software Engineer, Ceph Storage

Chicago, IL

| |

_______________________________________________ Dev mailing list -- dev@xxxxxxx To unsubscribe send an email to dev-leave@xxxxxxx