Hello.

Thanks to Rober's reply to 'Influencing the osd.id' I've learned two new commands today. I can now see that 'ceph osd metadata' confirms that I have two OSDs pointing to the same physical disk name:

root@ceph09:/# ceph osd metadata 12 | grep sdi

"bluestore_bdev_devices": "sdi",

"device_ids": "nvme0n1=SAMSUNG_MZPLL1T6HEHP-00003_S3HBNA0KA03264,sdi=SEAGATE_ST12000NM0027_ZJV5TX470000C9470ZWA",

"device_paths": "nvme0n1=/dev/disk/by-path/pci-0000:83:00.0-nvme-1,sdi=/dev/disk/by-path/pci-0000:41:00.0-sas-phy18-lun-0",

"devices": "nvme0n1,sdi",

"objectstore_numa_unknown_devices": "nvme0n1,sdi",

root@ceph09:/# ceph osd metadata 9 | grep sdi

"bluestore_bdev_devices": "sdi",

"device_ids": "nvme1n1=Samsung_SSD_983_DCT_M.2_1.92TB_S48DNC0N701016D,sdi=SEAGATE_ST12000NM0027_ZJV5SMTQ0000C9128FE0",

"device_paths": "nvme1n1=/dev/disk/by-path/pci-0000:01:00.0-nvme-1,sdi=/dev/disk/by-path/pci-0000:41:00.0-sas-phy6-lun-0",

"devices": "nvme1n1,sdi",

"objectstore_numa_unknown_devices": "sdi",

However, even though OSD 12 is saying sdi, at least it is pointing to the serial number of the failed disk. However, the disk with that serial number is currently residing at /dev/sdc.

Is there a way to force the record for the destroyed OSD to point to /dev/sdc?

Thanks.

-Dave

--

On Mon, Oct 28, 2024 at 11:47 AM Dave Hall <kdhall@xxxxxxxxxxxxxx> wrote:

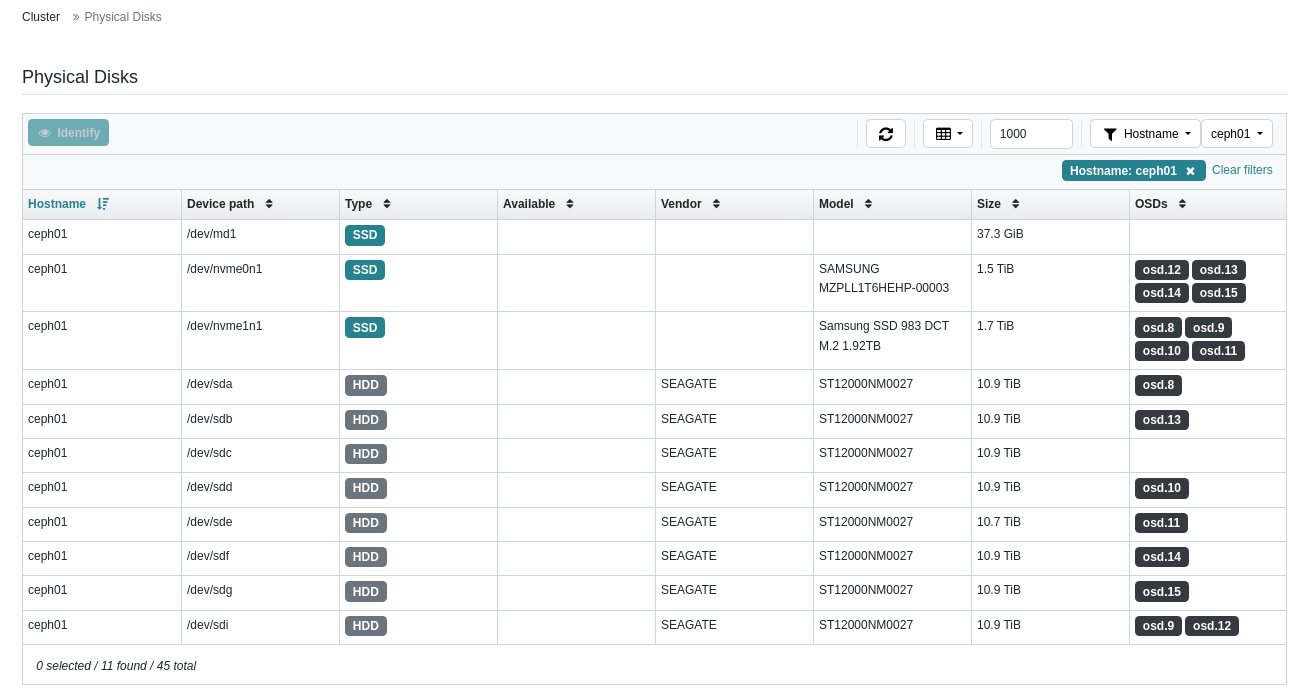

Hello.The following is on a Reef Podman installation:In attempting to deal over the weekend with a failed OSD disk, I have somehow managed to have two OSDs pointing to the same HDD, as shown below.To be sure, the failure occurred on OSD.12, which was pointing to /dev/sdi.I disabled the systemd unit for OSD.12 because it kept restarting. I then destroyed it.When I physically removed the failed disk and rebooted the system, the disk enumeration changed. So, before the reboot, OSD.12 was using /dev/sdi. After the reboot, OSD.9 moved to /dev/sdi.I didn't know that I had an issue until 'ceph-volume lvm prepare' failed. It was in the process of investigating this that I found the above. Right now I have reinserted the failed disk and rebooted, hoping that OSD.12 would find its old disk by some other means, but no joy.My concern is that if I run 'ceph osd rm' I could take out OSD.9. I could take the precaution of marking OSD.9 out and let it drain, but I'd rather not. I am, perhaps, more inclined to manually clear the lingering configuration associated with OSD.12 if someone could send me the list of commands. Otherwise, I'm open to suggestions.Thanks.-Dave--

_______________________________________________ ceph-users mailing list -- ceph-users@xxxxxxx To unsubscribe send an email to ceph-users-leave@xxxxxxx