Hello.

The following is on a Reef Podman installation:

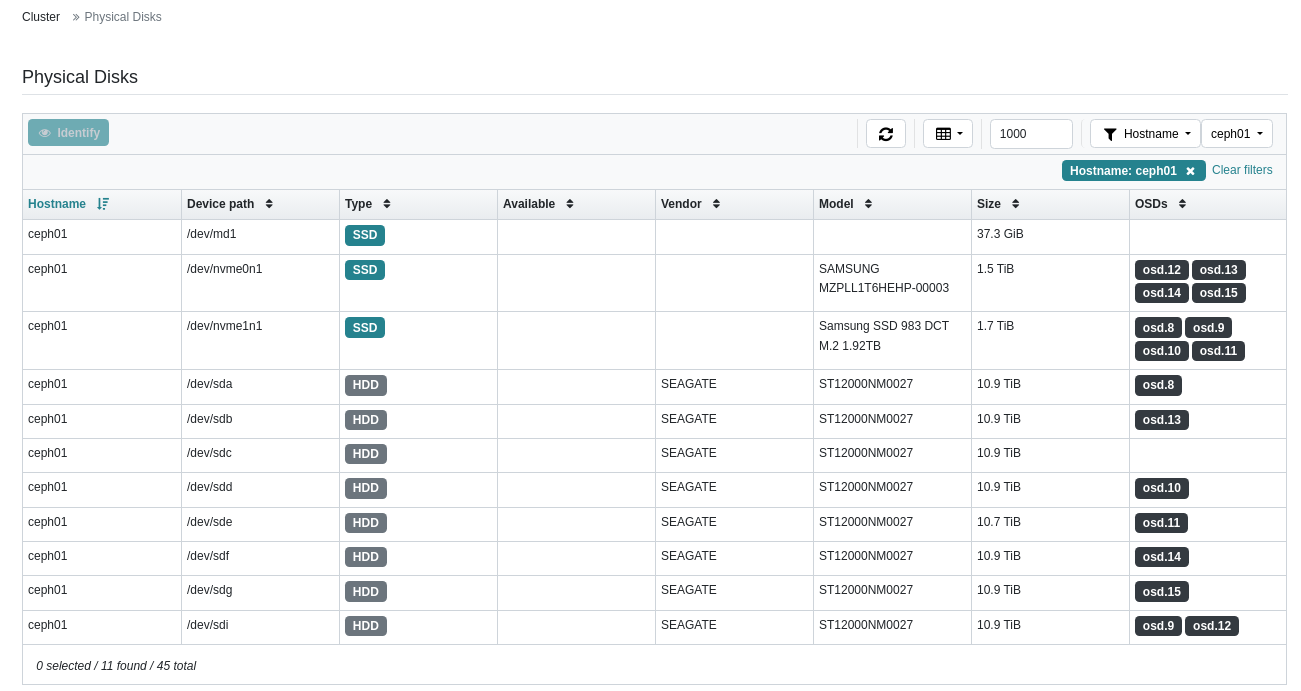

In attempting to deal over the weekend with a failed OSD disk, I have somehow managed to have two OSDs pointing to the same HDD, as shown below.

To be sure, the failure occurred on OSD.12, which was pointing to /dev/sdi.

I disabled the systemd unit for OSD.12 because it kept restarting. I then destroyed it.

When I physically removed the failed disk and rebooted the system, the disk enumeration changed. So, before the reboot, OSD.12 was using /dev/sdi. After the reboot, OSD.9 moved to /dev/sdi.

I didn't know that I had an issue until 'ceph-volume lvm prepare' failed. It was in the process of investigating this that I found the above. Right now I have reinserted the failed disk and rebooted, hoping that OSD.12 would find its old disk by some other means, but no joy.

My concern is that if I run 'ceph osd rm' I could take out OSD.9. I could take the precaution of marking OSD.9 out and let it drain, but I'd rather not. I am, perhaps, more inclined to manually clear the lingering configuration associated with OSD.12 if someone could send me the list of commands. Otherwise, I'm open to suggestions.

Thanks.

-Dave

--

_______________________________________________ ceph-users mailing list -- ceph-users@xxxxxxx To unsubscribe send an email to ceph-users-leave@xxxxxxx