Cheers everybody.

What we've tried yesterday:

- compact all OSDs

- change disk scheduler from mq-deadline to none

- disable kernel mitigation for cpu vulnerabilities

none of these settings really brought the numbers close to the octopus.

Next thing I could try is to use the alternate tuning of this blog post: https://ceph.io/en/news/blog/2022/rocksdb-tuning-deep-dive/

Are there any other ides?

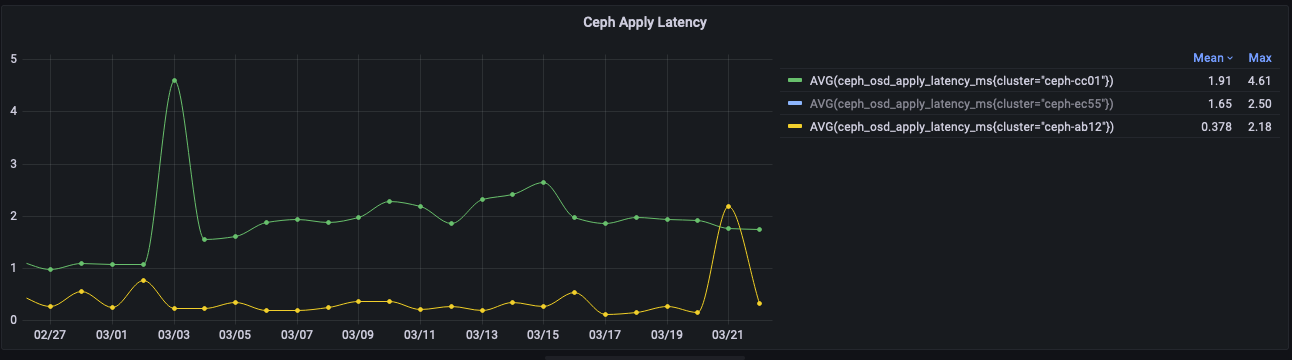

This is the graph for the avg commit latency (resolution 1d) for the last month. Green is pacific, yellow is nautilus. We've update from octopus to pacific where the first high peak is.

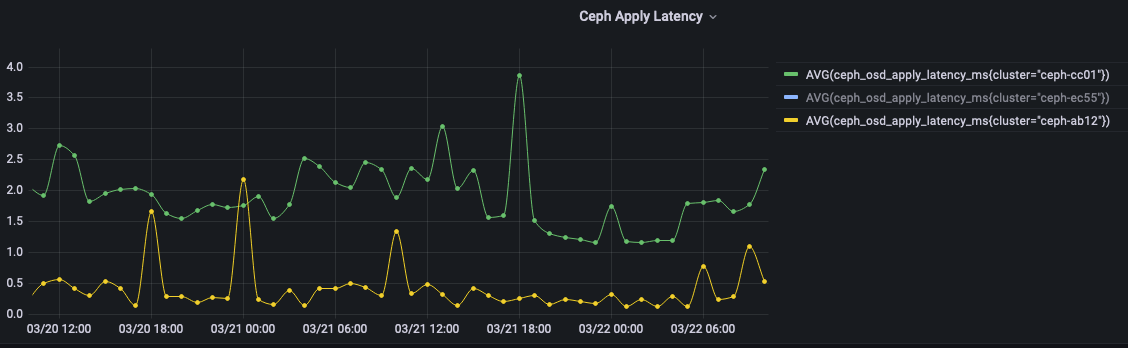

same data for the last two days with 1h resolution

Am Di., 21. März 2023 um 15:31 Uhr schrieb Boris Behrens <bb@xxxxxxxxx>:

Hi Igor,i've offline compacted all the OSDs and reenabled the bluefs_buffered_ioIt didn't change anything and the commit and apply latencies are around 5-10 times higher than on our nautlus cluster. The pacific cluster got a 5 minute mean over all OSDs 2.2ms, while the nautilus cluster is around 0.2 - 0.7 ms.I also see these kind of logs. Google didn't really help:2023-03-21T14:08:22.089+0000 7efe7b911700 3 rocksdb: [le/block_based/filter_policy.cc:579] Using legacy Bloom filter with high (20) bits/key. Dramatic filter space and/or accuracy improvement is available with format_version>=5.Am Di., 21. März 2023 um 10:46 Uhr schrieb Igor Fedotov <igor.fedotov@xxxxxxxx>:Hi Boris,

additionally you might want to manually compact RocksDB for every OSD.

Thanks,

Igor

On 3/21/2023 12:22 PM, Boris Behrens wrote:

Disabling the write cache and the bluefs_buffered_io did not change anything. What we see is that larger disks seem to be the leader in therms of slowness (we have 70% 2TB, 20% 4TB and 10% 8TB SSDs in the cluster), but removing some of the 8TB disks and replace them with 2TB (because it's by far the majority and we have a lot of them) disks did also not change anything. Are there any other ideas I could try. Customer start to complain about the slower performance and our k8s team mentions problems with ETCD because the latency is too high. Would it be an option to recreate every OSD? Cheers Boris Am Di., 28. Feb. 2023 um 22:46 Uhr schrieb Boris Behrens <bb@xxxxxxxxx>:Hi Josh, thanks a lot for the breakdown and the links. I disabled the write cache but it didn't change anything. Tomorrow I will try to disable bluefs_buffered_io. It doesn't sound that I can mitigate the problem with more SSDs. Am Di., 28. Feb. 2023 um 15:42 Uhr schrieb Josh Baergen < jbaergen@xxxxxxxxxxxxxxxx>:Hi Boris, OK, what I'm wondering is whether https://tracker.ceph.com/issues/58530 is involved. There are two aspects to that ticket: * A measurable increase in the number of bytes written to disk in Pacific as compared to Nautilus * The same, but for IOPS Per the current theory, both are due to the loss of rocksdb log recycling when using default recovery options in rocksdb 6.8; Octopus uses version 6.1.2, Pacific uses 6.8.1. 16.2.11 largely addressed the bytes-written amplification, but the IOPS amplification remains. In practice, whether this results in a write performance degradation depends on the speed of the underlying media and the workload, and thus the things I mention in the next paragraph may or may not be applicable to you. There's no known workaround or solution for this at this time. In some cases I've seen that disabling bluefs_buffered_io (which itself can cause IOPS amplification in some cases) can help; I think most folks do this by setting it in local conf and then restarting OSDs in order to gain the config change. Something else to consider is https://docs.ceph.com/en/quincy/start/hardware-recommendations/#write-caches , as sometimes disabling these write caches can improve the IOPS performance of SSDs. Josh On Tue, Feb 28, 2023 at 7:19 AM Boris Behrens <bb@xxxxxxxxx> wrote:Hi Josh, we upgraded 15.2.17 -> 16.2.11 and we only use rbd workload. Am Di., 28. Feb. 2023 um 15:00 Uhr schrieb Josh Baergen <jbaergen@xxxxxxxxxxxxxxxx>:Hi Boris, Which version did you upgrade from and to, specifically? And what workload are you running (RBD, etc.)? Josh On Tue, Feb 28, 2023 at 6:51 AM Boris Behrens <bb@xxxxxxxxx> wrote:Hi, today I did the first update from octopus to pacific, and it lookslike theavg apply latency went up from 1ms to 2ms. All 36 OSDs are 4TB SSDs and nothing else changed. Someone knows if this is an issue, or am I just missing a configvalue?Cheers Boris _______________________________________________ ceph-users mailing list -- ceph-users@xxxxxxx To unsubscribe send an email to ceph-users-leave@xxxxxxx-- Die Selbsthilfegruppe "UTF-8-Probleme" trifft sich diesmal abweichendim groüen Saal.-- Die Selbsthilfegruppe "UTF-8-Probleme" trifft sich diesmal abweichend im groüen Saal.

--Die Selbsthilfegruppe "UTF-8-Probleme" trifft sich diesmal abweichend im groüen Saal.

--

Die Selbsthilfegruppe "UTF-8-Probleme" trifft sich diesmal abweichend im groüen Saal.

_______________________________________________ ceph-users mailing list -- ceph-users@xxxxxxx To unsubscribe send an email to ceph-users-leave@xxxxxxx