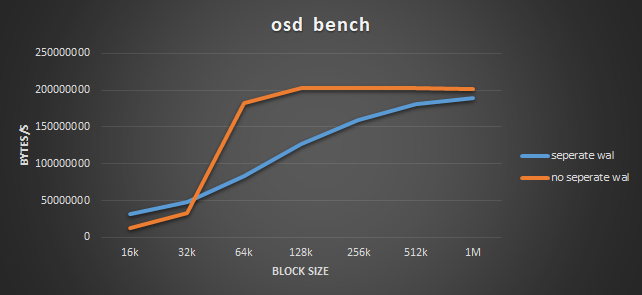

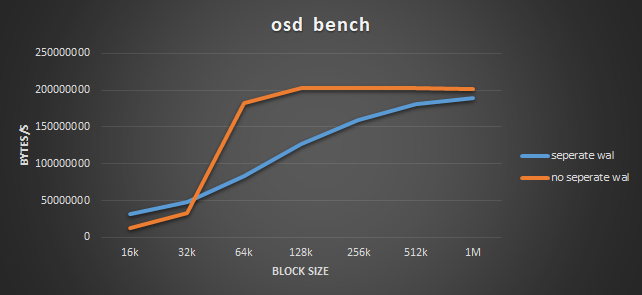

I suppose that the write operations may use wal more when block size is small.

rainning <tweetypie@xxxxxx> 于2020年7月16日周四 上午10:39写道:

I tested osd bench with different block size: 1MB, 512KB, 256KB, 128KB, 64KB, and 32KB. osd.2 is one from the cluster where osds have better 4KB osd bench, and osd.30 is from the cluster where osds have lower 4KB osd bench. Before 32KB, osd.30 was better than osd.2, however, there was a big drop on osd.30 with 32KB block size.

root@cmn01:~# ceph tell osd.2 bench 1073741824 1048576

{

"bytes_written": 1073741824,

"blocksize": 1048576,

"bytes_per_sec": 188747963

}

root@cmn01:~# ceph tell osd.2 bench 1073741824 524288

{

"bytes_written": 1073741824,

"blocksize": 524288,

"bytes_per_sec": 181071543

}root@cmn01:~# ceph tell osd.2 bench 786432000 262144

{

"bytes_written": 786432000,

"blocksize": 262144,

"bytes_per_sec": 159007035

}

root@cmn01:~# ceph tell osd.2 bench 393216000 131072

{

"bytes_written": 393216000,

"blocksize": 131072,

"bytes_per_sec": 127179122

}root@cmn01:~# ceph tell osd.2 bench 196608000 65536

{

"bytes_written": 196608000,

"blocksize": 65536,

"bytes_per_sec": 83365482

}

root@cmn01:~# ceph tell osd.2 bench 98304000 32768

{

"bytes_written": 98304000,

"blocksize": 32768,

"bytes_per_sec": 48351258

}root@cmn01:~# ceph tell osd.2 bench 49152000 16384

{

"bytes_written": 49152000,

"blocksize": 16384,

"bytes_per_sec": 31725841

}------------------------------------------------------------------------------------------------------------------root@stor-mgt01:~# ceph tell osd.30 bench 1073741824 1048576

{

"bytes_written": 1073741824,

"blocksize": 1048576,

"elapsed_sec": 5.344805,

"bytes_per_sec": 200894474.890259,

"iops": 191.587901

}

root@stor-mgt01:~# ceph tell osd.30 bench 1073741824 524288

{

"bytes_written": 1073741824,

"blocksize": 524288,

"elapsed_sec": 5.303052,

"bytes_per_sec": 202476205.680661,

"iops": 386.192714

}root@stor-mgt01:~# ceph tell osd.30 bench 786432000 262144

{

"bytes_written": 786432000,

"blocksize": 262144,

"elapsed_sec": 3.878248,

"bytes_per_sec": 202780204.655892,

"iops": 773.545092

}root@stor-mgt01:~# ceph tell osd.30 bench 393216000 131072

{

"bytes_written": 393216000,

"blocksize": 131072,

"elapsed_sec": 1.939532,

"bytes_per_sec": 202737591.242988,

"iops": 1546.765070

}root@stor-mgt01:~# ceph tell osd.30 bench 196608000 65536

{

"bytes_written": 196608000,

"blocksize": 65536,

"elapsed_sec": 1.081617,

"bytes_per_sec": 181772360.338257,

"iops": 2773.626104

}

root@stor-mgt01:~# ceph tell osd.30 bench 98304000 32768

{

"bytes_written": 98304000,

"blocksize": 32768,

"elapsed_sec": 2.908703,

"bytes_per_sec": 33796507.598640,

"iops": 1031.387561

}

root@stor-mgt01:~# ceph tell osd.30 bench 49152000 16384

{

"bytes_written": 49152000,

"blocksize": 16384,

"elapsed_sec": 3.907744,

"bytes_per_sec": 12578102.861185,

"iops": 767.706473

}------------------ 原始邮件 ------------------发件人: "rainning" <tweetypie@xxxxxx>;发送时间: 2020年7月16日(星期四) 上午9:42收件人: "Zhenshi Zhou"<deaderzzs@xxxxxxxxx>;抄送: "ceph-users"<ceph-users@xxxxxxx>;主题: 回复: Re: osd bench with or without a separate WAL device deployedHi Zhenshi,I did try with bigger block size. Interestingly, the one whose 4KB osd bench was lower performed slightly better in 4MB osd bench.Let me try some other bigger block sizes, e.g. 16K, 64K, 128K, 1M etc, to see if there is any pattern.Moreover, I did compare two SSDs, they respectively are INTEL SSDSC2KB480G8 and INTEL SSDSC2KB960G8. Performance wise, there is no much difference.Thanks,Ning------------------ 原始邮件 ------------------发件人: "Zhenshi Zhou" <deaderzzs@xxxxxxxxx>;发送时间: 2020年7月16日(星期四) 上午9:24收件人: "rainning"<tweetypie@xxxxxx>;抄送: "ceph-users"<ceph-users@xxxxxxx>;主题: Re: osd bench with or without a separate WAL device deployedMaybe you can try writing with bigger block size and compare the results.

For bluestore, the write operations contain two modes. One is COW, the

other is RMW. AFAIK only RMW uses wal in order to prevent data from

being interrupted.

rainning <tweetypie@xxxxxx> 于2020年7月15日周三 下午11:04写道:

> Hi Zhenshi, thanks very much for the reply.

>

> Yes I know it is ood that the bluestore is deployed only with a separate

> db device but no a WAL device. The cluster was deployed in k8s using rook.

> I was told it was because the rook we used didn't support that.

>

> Moreover, the comparison was made on osd bench, so the network should not

> be the case. As far as the storage node hardware, although two clusters are

> indeed different, their CPUs and HDDs do have almost same performance

> numbers. I haven't compared SSDs that are used as db/WAL devices, it might

> cause difference, but I am not sure if it can make two times difference.

>

> ---Original---

> *From:* "Zhenshi Zhou"<deaderzzs@xxxxxxxxx>

> *Date:* Wed, Jul 15, 2020 18:39 PM

> *To:* "rainning"<tweetypie@xxxxxx>;

> *Cc:* "ceph-users"<ceph-users@xxxxxxx>;

> *Subject:* Re: osd bench with or without a separate WAL

> device deployed

>

> I deployed the cluster either with separate db/wal or put db/wal/data

> together. Never tried to have only a seperate db.

> AFAIK wal does have an effect on writing but I'm not sure if it could be

> two times of the bench value. Hardware and

> network environment are also important factors.

>

> rainning <tweetypie@xxxxxx> 于2020年7月15日周三 下午4:35写道:

>

> > Hi all,

> >

> >

> > I am wondering if there is any performance comparison done on osd bench

> > with and without a separate WAL device deployed given that there is

> always

> > a separate db device deployed on SSD in both cases.

> >

> >

> > The reason I am asking this question is that we have two clusters and

> osds

> > in one have separate db and WAL device deployed on SSD but osds in

> another

> > only have a separate db device deployed. And we found 4KB osd bench (i.e.

> > ceph tell osd.X bench 12288000 4096) for the ones having a separate WAL

> > device was two times of the ones without a separate WAL device. Is the

> > performance difference caused by the separate WAL device?

> >

> >

> > Thanks,

> > Ning

> > _______________________________________________

> > ceph-users mailing list -- ceph-users@xxxxxxx

> > To unsubscribe send an email to ceph-users-leave@xxxxxxx

> >

> _______________________________________________

> ceph-users mailing list -- ceph-users@xxxxxxx

> To unsubscribe send an email to ceph-users-leave@xxxxxxx

>

_______________________________________________

ceph-users mailing list -- ceph-users@xxxxxxx

To unsubscribe send an email to ceph-users-leave@xxxxxxx

_______________________________________________ ceph-users mailing list -- ceph-users@xxxxxxx To unsubscribe send an email to ceph-users-leave@xxxxxxx