_______________________________________________Hello folks,

I am trying to use an entire ssd partition for journal disk ie example /dev/sdf1 partition(70GB). But when I look up the osd config using below command I see ceph-deploy sets journal_size as 5GB. More confusing, I see the OSD logs showing the correct size in blocks in the /var/log/ceph/ceph-osd.x.log

So my question is, whether ceph is using the entire disk partition or just 5GB(default value of ceph deploy) for my OSD journal ?

I know I can set per OSD or global OSD value for journal size in ceph.conf . I am using Jewel 10.2.7

ceph --admin-daemon /var/run/ceph/ceph-osd.3.asok config get osd_journal_size

{

"osd_journal_size": "5120"

}

I tried the below, but the get osd_journal_size shows as 0, which is what its set, so still confused more.

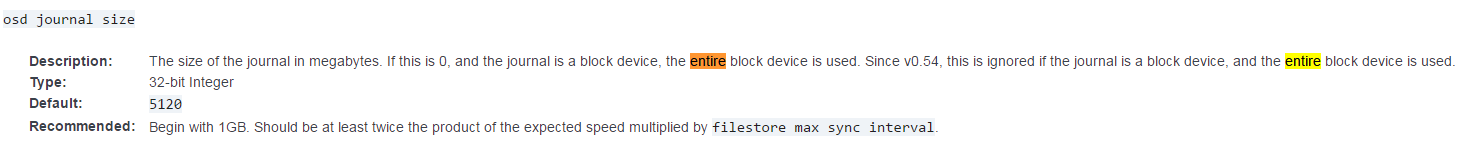

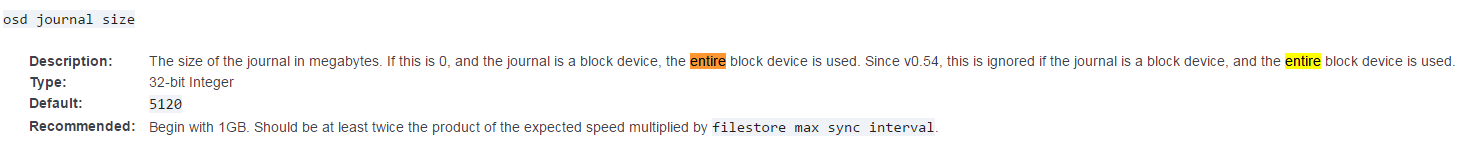

http://docs.ceph.com/docs/master/rados/configuration/osd-config-ref/

Any info is appreciated.

PS: I search to find similar issue, but no response on that thread.

--

Deepak

This email message is for the sole use of the intended recipient(s) and may contain confidential information. Any unauthorized review, use, disclosure or distribution is prohibited. If you are not the intended recipient, please contact the sender by reply email and destroy all copies of the original message.

ceph-users mailing list

ceph-users@xxxxxxxxxxxxxx

http://lists.ceph.com/listinfo.cgi/ceph-users-ceph.com

_______________________________________________ ceph-users mailing list ceph-users@xxxxxxxxxxxxxx http://lists.ceph.com/listinfo.cgi/ceph-users-ceph.com