|

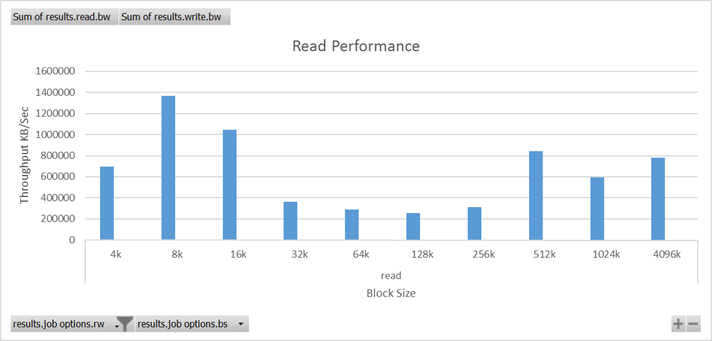

Hi, I am showing below fio results for Sequential Read on my Ceph cluster. I am trying to understand this pattern: - why there is a dip in the performance for block sizes 32k-256k? - is this an expected performance graph? - have you seen this kind of pattern before

My cluster details: Ceph: Hammer release Cluster: 6 nodes (dual Intel sockets) each with 20 OSDs and 4 SSDs (5 OSD journals on one SSD) Client network: 10Gbps Cluster network: 10Gbps FIO test:

- 2 Client servers - Sequential Read - Run time of 600 seconds - Filesize = 1TB - 10 rbd images per client - Queue depth=16 Any ideas on tuning this cluster? Where should I look first? Thanks, - epk Legal Disclaimer: The information contained in this message may be privileged and confidential. It is intended to be read only by the individual or entity to whom it is addressed or by their designee. If the reader of this message is not the intended recipient, you are on notice that any distribution of this message, in any form, is strictly prohibited. If you have received this message in error, please immediately notify the sender and delete or destroy any copy of this message! |

_______________________________________________ ceph-users mailing list ceph-users@xxxxxxxxxxxxxx http://lists.ceph.com/listinfo.cgi/ceph-users-ceph.com