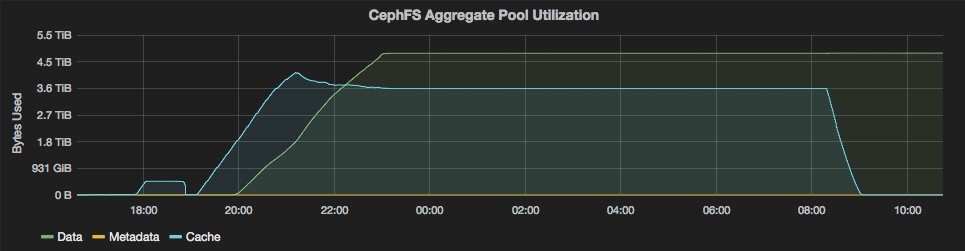

| Greetings experts, I've got a test set up with CephFS configured to use an erasure coded pool + cache tier on 0.94.2. I have been writing lots of data to fill the cache to observe the behavior and performance when it starts evicting objects to the erasure-coded pool. The thing I have noticed is that after deleting the files via 'rm' through my CephFS kernel client, the cache is emptied but the objects that were evicted to the EC pool stick around. I've attached an image that demonstrates what I'm seeing. Is this intended behavior, or have I misconfigured something? Thanks, Lincoln Bryant  |

_______________________________________________ ceph-users mailing list ceph-users@xxxxxxxxxxxxxx http://lists.ceph.com/listinfo.cgi/ceph-users-ceph.com