- Where swift data is written

Ans : .rgw for containers and .rgw.buckets for objects- how we could achieve a better distribution over the disks

Hi Hugo

Many, many thanks! It was the /auth missing in the URL for ssbench

What I can say so far:

1) It is important to disable the login on the radosgw, we have the following two statements in ceph.conf

rgw_enable_ops_log = false

debug_rgw = 0

2) In our case disk are the limiting factor, not the gateway

Device: rrqm/s wrqm/s r/s w/s rsec/s wsec/s avgrq-sz avgqu-sz await svctm %util

sdb 0.00 13.00 1.00 172.67 16.00 31469.67 181.30 0.89 5.15 0.10 1.80

sdd 0.00 20.67 1.33 159.00 21.33 27435.67 171.25 0.85 5.29 0.13 2.03

sdc 0.00 27.33 0.67 212.67 8.00 31138.00 146.00 0.95 4.47 0.23 4.83

sde 0.00 11.00 0.33 98.00 5.33 15908.33 161.83 0.29 3.00 0.08 0.83

sdf 0.00 22.00 0.33 125.33 5.33 21594.67 171.88 0.50 4.10 0.11 1.33

sdg 0.00 31.33 0.33 137.67 5.33 16311.67 118.24 0.24 4.07 0.07 0.90

sdh 0.00 2.67 1.67 173.33 26.67 35229.00 201.46 118.39 650.48 5.71 100.00

sdi 0.00 18.00 0.00 232.67 0.00 46053.00 197.94 116.51 231.63 4.19 97.50

sdj 0.00 21.33 0.00 138.33 0.00 24360.67 176.10 0.71 4.99 0.22 3.10

sdk 0.00 7.67 0.00 124.67 0.00 22994.67 184.45 0.54 4.35 0.14 1.73

sdl 0.00 77.00 3.00 278.67 48.00 37646.33 133.83 1.22 4.31 0.14 3.90

sdm 0.00 2.67 0.33 70.00 2.67 12532.00 178.22 0.24 3.41 0.08 0.57

sda 0.00 57.33 0.00 21.00 0.00 626.67 29.84 0.02 0.73 0.21 0.43

3) With 36 x 4TB SAS disks we get: Average requests per second: 784.6 with –u 100

I wonder

- Where swift data is written

- how we could achieve a better distribution over the disks

ceph osd dump | grep 'rep size'

pool 0 'data' rep size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 64 pgp_num 64 last_change 1 owner 0 crash_replay_interval 45

pool 1 'metadata' rep size 2 min_size 1 crush_ruleset 1 object_hash rjenkins pg_num 64 pgp_num 64 last_change 1 owner 0

pool 2 'rbd' rep size 2 min_size 1 crush_ruleset 2 object_hash rjenkins pg_num 64 pgp_num 64 last_change 1 owner 0

pool 3 '' rep size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 129 owner 0

pool 4 '.rgw.root' rep size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 1000 pgp_num 8 last_change 2249 owner 0

pool 5 '.rgw' rep size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 1000 pgp_num 8 last_change 2242 owner 0

pool 6 '.rgw.gc' rep size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 1000 pgp_num 8 last_change 2245 owner 0

pool 7 '.users.uid' rep size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 136 owner 18446744073709551615

pool 8 '.users' rep size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 138 owner 18446744073709551615

pool 9 '.rgw.buckets.index' rep size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 1000 pgp_num 8 last_change 2239 owner 0

pool 10 '.rgw.buckets' rep size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 1000 pgp_num 1000 last_change 2236 owner 0

pool 14 '.users.swift' rep size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 2643 owner 18446744073709551615

From: Kuo Hugo [mailto:tonytkdk@xxxxxxxxx]

Sent: Mittwoch, 11. September 2013 12:15

To: Fuchs, Andreas (SwissTXT); ceph-users@xxxxxxxxxxxxxx

Subject: Re: [RadosGW] Performance for Concurrency Connections

For ref :

Benchmark resultCould someone help me to improve the performance of high concurrency use case ?

Any suggestion would be excellent.!

+Hugo Kuo+

2013/9/11 Kuo Hugo <tonytkdk@xxxxxxxxx>

Export needed variables by :

export ST_AUTH=http://p01-2/auth

export ST_USER=demo:swift

export ST_KEY=BHL1OxwdC2o737QPAOFR90b8oruqr\/aZJDvW8hAJ

+Hugo Kuo+

2013/9/11 Fuchs, Andreas (SwissTXT) <Andreas.Fuchs@xxxxxxxxxxx>

Hi Hugo

Thanks for your reply.

I have a pretty similar setup, only difference is:

1) 12 disks per node, so a total of 36disks/osd’s

2) The SSD’s I got (one per server) is not faster than a single disk, so putting the jornals of 12 osd’s to one of those slow ssd’s is creating a bottleneck, so for the moment we have the journals on the osd’s

3) Thest on rados block devices look promising

But I’m stuck with ssbench and permission, I have a rados user whih I can successfully test with cyberduck, I created a swift subuser user, but when I start ssbench with:

ssbench-master run-scenario -f 1kb-put.sc -u 1 -o 10000 -k --workers 1 -A http://10.100.218.131 -U testuser:swift -K O3AdvL9OINHX2fDGeUeSf+GVfvq3YUrzR+BRHM32

I get

INFO:Spawning local ssbench-worker (logging to /tmp/ssbench-worker-local-0.log) with ssbench-worker --zmq-host 127.0.0.1 --zmq-work-port 13579 --zmq-results-port 13580 --concurrency 1 --batch-size 1 0

INFO:Starting scenario run for "1KB-put"

Traceback (most recent call last):

File "/usr/bin/ssbench-master", line 597, in <module>

args.func(args)

File "/usr/bin/ssbench-master", line 222, in run_scenario

run_results=run_results)

File "/usr/lib/python2.6/site-packages/ssbench/master.py", line 320, in run_scenario

storage_urls, c_token = self._authenticate(auth_kwargs)

File "/usr/lib/python2.6/site-packages/ssbench/master.py", line 280, in _authenticate

storage_url, token = client.get_auth(**auth_kwargs)

File "/usr/lib/python2.6/site-packages/ssbench/swift_client.py", line 294, in get_auth

kwargs.get('snet'))

File "/usr/lib/python2.6/site-packages/ssbench/swift_client.py", line 220, in get_auth_1_0

http_reason=resp.reason)

ssbench.swift_client.ClientException: Auth GET failed: http://10.100.218.131:80 200 OK

how did you declare user and key?

Regards

Andi

From: Kuo Hugo [mailto:tonytkdk@xxxxxxxxx]

Sent: Dienstag, 10. September 2013 17:59

To: Fuchs, Andreas (SwissTXT)

Subject: Re: [RadosGW] Performance for Concurrency Connections

Hi Andreas,

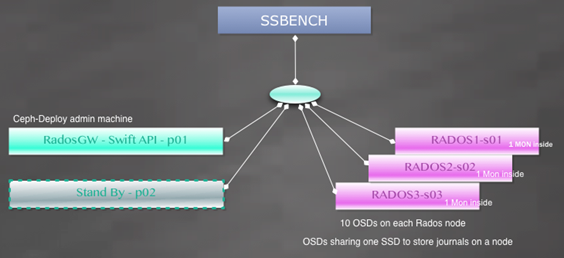

1) I deployed the cluster by *ceph-deploy* tool from node p01

2) Three Monitor servers distributed to three Rados nodes (s01,s02,s03)

3) Each node has a 120GB SSD which parted into 10 partitions for OSD's journal (sdf1~sdf10 GPT)

4) Each OSD is using a single HDD. There're 10 OSDs on a node. So that we have 30 OSDs in total.

5) Checked the cluster status by ceph -w , It looks good.

6) Install RadosGW on p01

7) Created .rgw & .rgw.buckets manually with size=3 min_size=2

8) Added user demo and sub-user demo:swift.

9) Install ssbench and swift common tool on SSBENCH node.

10) running ssbench with 1KB object

[1kb-put.sc scenario sample file ]

{

"name": "1KB-put",

"sizes": [{

"name": "1KB",

"size_min": 1024,

"size_max": 1024

}],

"initial_files": {

"1KB": 1000

},

"operation_count": 500,

"crud_profile": [1, 0, 0, 0],

"user_count": 10

}

[My ssbench command]

ssbench-master run-scenario -f 1kb-put.sc -u 100 -o 10000 -k --workers 20

the -u parameter means the concurrency clients .

+Hugo Kuo+

2013/9/10 Fuchs, Andreas (SwissTXT) <Andreas.Fuchs@xxxxxxxxxxx>

Hi Hugo

I have exactly the same setting as you. Can you provide some more details on how you did setup the test, I really like to reproduce and verify.

Also our radosgw is public reachable 193.218.100.130 maybe you have a minute or two to benchmark our radosgw J

Regards

Andi

From: ceph-users-bounces@xxxxxxxxxxxxxx [mailto:ceph-users-bounces@xxxxxxxxxxxxxx] On Behalf Of Kuo Hugo

Sent: Dienstag, 10. September 2013 15:26

To: ceph-users@xxxxxxxxxxxxxx

Subject: [RadosGW] Performance for Concurrency Connections

Hi folks,

I'm doing some performance benchmark for RadosGW.

My benchmark tools is ssbench & swift-bench.

I found that the best reqs/sec performance is on concurrency 100.

32 CPU threads on RadosGW

24 CPU threads on each Rados Node

The Network is all 10Gb

For 1KB object PUT :

Concurrency-50 : 538 reqs/sec

Concurrency-100 : 1159.1 reqs/sec

Concurrency-200 : 502.5 reqs/sec

Concurrency-500 : 204 reqs/sec

Concurrency-1000 : 153 reqs/sec

I think the bottleneck is on RadosGW. How to improve it for high concurrency cases ?

Appreciate

+Hugo Kuo+

_______________________________________________ ceph-users mailing list ceph-users@xxxxxxxxxxxxxx http://lists.ceph.com/listinfo.cgi/ceph-users-ceph.com