export ST_AUTH=http://p01-2/authexport ST_USER=demo:swiftexport ST_KEY=BHL1OxwdC2o737QPAOFR90b8oruqr\/aZJDvW8hAJ

Hi Hugo

Thanks for your reply.

I have a pretty similar setup, only difference is:

1) 12 disks per node, so a total of 36disks/osd’s

2) The SSD’s I got (one per server) is not faster than a single disk, so putting the jornals of 12 osd’s to one of those slow ssd’s is creating a bottleneck, so for the moment we have the journals on the osd’s

3) Thest on rados block devices look promising

But I’m stuck with ssbench and permission, I have a rados user whih I can successfully test with cyberduck, I created a swift subuser user, but when I start ssbench with:

ssbench-master run-scenario -f 1kb-put.sc -u 1 -o 10000 -k --workers 1 -A http://10.100.218.131 -U testuser:swift -K O3AdvL9OINHX2fDGeUeSf+GVfvq3YUrzR+BRHM32

I get

INFO:Spawning local ssbench-worker (logging to /tmp/ssbench-worker-local-0.log) with ssbench-worker --zmq-host 127.0.0.1 --zmq-work-port 13579 --zmq-results-port 13580 --concurrency 1 --batch-size 1 0

INFO:Starting scenario run for "1KB-put"

Traceback (most recent call last):

File "/usr/bin/ssbench-master", line 597, in <module>

args.func(args)

File "/usr/bin/ssbench-master", line 222, in run_scenario

run_results=run_results)

File "/usr/lib/python2.6/site-packages/ssbench/master.py", line 320, in run_scenario

storage_urls, c_token = self._authenticate(auth_kwargs)

File "/usr/lib/python2.6/site-packages/ssbench/master.py", line 280, in _authenticate

storage_url, token = client.get_auth(**auth_kwargs)

File "/usr/lib/python2.6/site-packages/ssbench/swift_client.py", line 294, in get_auth

kwargs.get('snet'))

File "/usr/lib/python2.6/site-packages/ssbench/swift_client.py", line 220, in get_auth_1_0

http_reason=resp.reason)

ssbench.swift_client.ClientException: Auth GET failed: http://10.100.218.131:80 200 OK

how did you declare user and key?

Regards

Andi

From: Kuo Hugo [mailto:tonytkdk@xxxxxxxxx]

Sent: Dienstag, 10. September 2013 17:59

To: Fuchs, Andreas (SwissTXT)

Subject: Re: [RadosGW] Performance for Concurrency Connections

Hi Andreas,

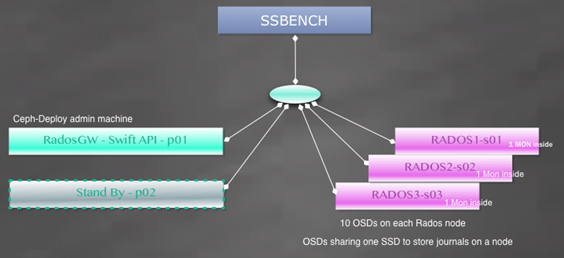

1) I deployed the cluster by *ceph-deploy* tool from node p01

2) Three Monitor servers distributed to three Rados nodes (s01,s02,s03)

3) Each node has a 120GB SSD which parted into 10 partitions for OSD's journal (sdf1~sdf10 GPT)

4) Each OSD is using a single HDD. There're 10 OSDs on a node. So that we have 30 OSDs in total.

5) Checked the cluster status by ceph -w , It looks good.

6) Install RadosGW on p01

7) Created .rgw & .rgw.buckets manually with size=3 min_size=2

8) Added user demo and sub-user demo:swift.

9) Install ssbench and swift common tool on SSBENCH node.

10) running ssbench with 1KB object

[1kb-put.sc scenario sample file ]

{

"name": "1KB-put",

"sizes": [{

"name": "1KB",

"size_min": 1024,

"size_max": 1024

}],

"initial_files": {

"1KB": 1000

},

"operation_count": 500,

"crud_profile": [1, 0, 0, 0],

"user_count": 10

}

[My ssbench command]

ssbench-master run-scenario -f 1kb-put.sc -u 100 -o 10000 -k --workers 20

the -u parameter means the concurrency clients .

+Hugo Kuo+

2013/9/10 Fuchs, Andreas (SwissTXT) <Andreas.Fuchs@xxxxxxxxxxx>

Hi Hugo

I have exactly the same setting as you. Can you provide some more details on how you did setup the test, I really like to reproduce and verify.

Also our radosgw is public reachable 193.218.100.130 maybe you have a minute or two to benchmark our radosgw J

Regards

Andi

From: ceph-users-bounces@xxxxxxxxxxxxxx [mailto:ceph-users-bounces@xxxxxxxxxxxxxx] On Behalf Of Kuo Hugo

Sent: Dienstag, 10. September 2013 15:26

To: ceph-users@xxxxxxxxxxxxxx

Subject: [RadosGW] Performance for Concurrency Connections

Hi folks,

I'm doing some performance benchmark for RadosGW.

My benchmark tools is ssbench & swift-bench.

I found that the best reqs/sec performance is on concurrency 100.

32 CPU threads on RadosGW

24 CPU threads on each Rados Node

The Network is all 10Gb

For 1KB object PUT :

Concurrency-50 : 538 reqs/sec

Concurrency-100 : 1159.1 reqs/sec

Concurrency-200 : 502.5 reqs/sec

Concurrency-500 : 204 reqs/sec

Concurrency-1000 : 153 reqs/sec

I think the bottleneck is on RadosGW. How to improve it for high concurrency cases ?

Appreciate

+Hugo Kuo+

_______________________________________________ ceph-users mailing list ceph-users@xxxxxxxxxxxxxx http://lists.ceph.com/listinfo.cgi/ceph-users-ceph.com