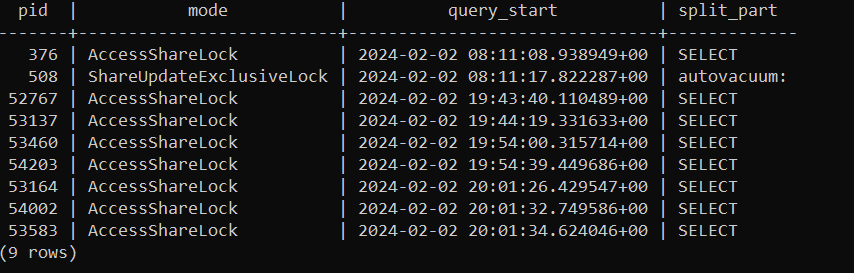

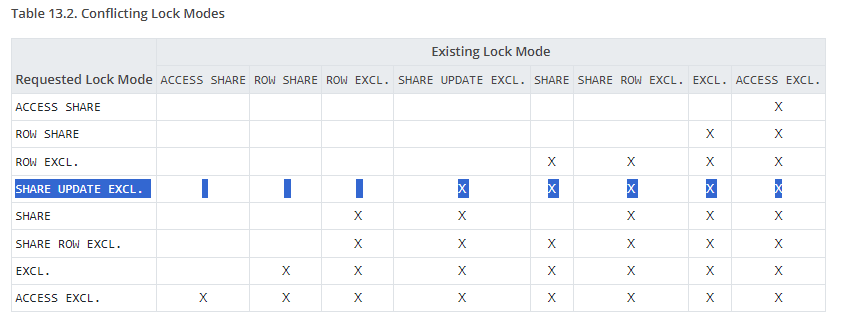

Thanks Greg, I really appreciated you message.I executed the query you shared, and it is showing exactly the same type of lock you talked, it help me a lot. ThanksIt is a usual behavior in some busy databases, I am trying to avoid cancel sessions. I would like also double check my understanding about locks on this documentation ( https://www.postgresql.org/docs/14/explicit-locking.html)Based on my understanding on table 13.2 SHARE UPDATE EXCLUSIVE (ShareUpdateExclusiveLock) should not be blocked by ACCESS SHARE (AccessShareLock). Am I wrong about it? If I am not wrong why it still locking it? Note: Information below come from the link above.Table-Level Lock Modes - SHARE UPDATE EXCLUSIVE (ShareUpdateExclusiveLock) - Conflicts with the SHARE UPDATE EXCLUSIVE, SHARE, SHARE ROW EXCLUSIVE, EXCLUSIVE, and ACCESS EXCLUSIVE lock modes. This mode protects a table against concurrent schema changes and VACUUM runs. - Acquired by VACUUM (without FULL), ANALYZE, CREATE INDEX CONCURRENTLY, CREATE STATISTICS, COMMENT ON, REINDEX CONCURRENTLY, and certain ALTER INDEX and ALTER TABLE variants (for full details see the documentation of these commands). - ACCESS SHARE (AccessShareLock) - Conflicts with the ACCESS EXCLUSIVE lock mode only. - The SELECT command acquires a lock of this mode on referenced tables. In general, any query that only reads a table and does not modify it will acquire this lock mode. Note: This information below come from another database, the first lock was done.select pid, mode, query_start, SPLIT_PART(trim(query),' ', 1) from pg_locks join pg_stat_activity using (pid) where relation::regclass::text = ' mytable' order by 3; pid | mode | query_start | split_part-------+--------------------------+-------------------------------+------------- 376 | AccessShareLock | 2024-02-02 08:11:08.938949+00 | SELECT 508 | ShareUpdateExclusiveLock | 2024-02-02 08:11:17.822287+00 | vacuum 52767 | AccessShareLock | 2024-02-02 19:43:40.110489+00 | SELECT 53137 | AccessShareLock | 2024-02-02 19:44:19.331633+00 | SELECT 53460 | AccessShareLock | 2024-02-02 19:54:00.315714+00 | SELECT 54203 | AccessShareLock | 2024-02-02 19:54:39.449686+00 | SELECT 53164 | AccessShareLock | 2024-02-02 20:01:26.429547+00 | SELECT 54002 | AccessShareLock | 2024-02-02 20:01:32.749586+00 | SELECT 53583 | AccessShareLock | 2024-02-02 20:01:34.624046+00 | SELECT On Friday, February 2, 2024 at 01:37:19 p.m. EST, Greg Sabino Mullane <htamfids@xxxxxxxxx> wrote: On Wed, Jan 31, 2024 at 2:05 PM abrahim abrahao <a_abrahao@xxxxxxxxxxxx> wrote: There is a three-day vacuum freeze on a partition table with wait_event = BufferPin, no transactions (active or idle) older than it that are not blocked, but at least one select query is executing at all times related of this partition table. ... Is there a wait to figure out which session vacuum freeze to wait for? The vacuum needs a chance to get in and make changes to the table, but it's not being blocked at the traditional lock level that shows up in pg_blocking_pids. You can see what is going on with this: select pid, mode, query_start, query from pg_locks join pg_stat_activity using (pid) where relation::regclass::text = 'mytable' order by 3; That may show your vacuum process with a ShareUpdateExclusiveLock and some other processes with other locks, probably AccessShareLock. Those other pids need to all finish or be killed - and not have any overlap between them. In other words, that vacuum process needs to have exclusive access to the table for a split second, no matter if the other process locked the table before or after the vacuum started. One crude solution would be to cancel any other existing backends interested in that table: select pg_cancel_backend(pid), now()-query_start, query from pg_locks join pg_stat_activity using (pid) where relation::regclass::text = 'mytable' and lower(query) !~ 'vacuum'; Not a good long-term solution, but depending on how often the table is updated, you might have other options. Perhaps disable autovacuum for this table and do a manual vacuum (e.g. in a cron script) that kills the other backends as per above, or runs during a time with not-constant reads on the table. Or have something that is able to pause the application. Or if this is a partitioned table that might get dropped in the future or at least not queried heavily, do not worry about vacuuming it now. Cheers,Greg