Greetings,

I was trying to use postgresql database as a backend with Ejabberd XMPP server for load test (Using TSUNG).

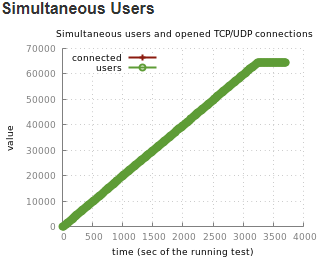

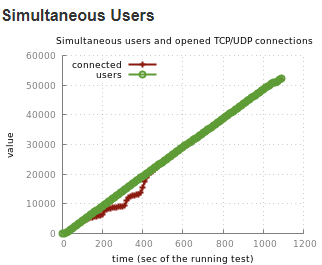

Noticed, while using Mnesia the “simultaneous users and open TCP/UDP connections” graph in Tsung report is showing consistency, but while using Postgres, we see drop in connections during 100 to 500 seconds of runtime, and then recovering and staying consistent.

I have been trying to figure out what the issue could be without any success. I am kind of a noob in this technology, and hoping for some help from the good people from the community to understand the problem and how to fix this. Below are some details..

· Postgres server utilization is low ( Avg load 1, Highest Cpu utilization 26%, lowest freemem 9000)

Tsung graph:

Graph 1: Postgres 12 Backen

Graph 1: Postgres 12 BackenGraph 2: Mnesia backend

· Ejabberd Server: Ubuntu 16.04, 16 GB ram, 4 core CPU.

· Postgres on remote server: same config

· Errors encountered during the same time: error_connect_etimedout (same outcome for other 2 tests)

· Tsung Load: 512 Bytes message size, user arrival rate 50/s, 80k registered users.

· Postgres server utilization is low ( Avg load 1, Highest Cpu utilization 26%, lowest freemem 9000)

· Same tsung.xm and userlist used for the tests in Mnesia and Postgres.

Postgres Configuration used:

shared_buffers = 4GB

effective_cache_size = 12GB

maintenance_work_mem = 1GB

checkpoint_completion_target = 0.9

wal_buffers = 16MB

default_statistics_target = 100

random_page_cost = 4

effective_io_concurrency = 2

work_mem = 256MB

min_wal_size = 1GB

max_wal_size = 2GB

max_worker_processes = 4

max_parallel_workers_per_gather = 2

max_parallel_workers = 4

max_parallel_maintenance_workers = 2max_connections=50000

Kindly help understanding this behavior. Some advice on how to fix this will be a big help .

Thanks,

Dipanjan

Hi Dipanjan

Please do not post to all the postgresql mailing list lets keep this on one list at a time, Keep this on general list

Am i reading this correctly 10,000 to 50,000 open connections.

Postgresql really is not meant to serve that many open connections.

Due to design of Postgresql each client connection can use up to the work_mem of 256MB plus additional for parallel processes. Memory will be exhausted long before 50,0000 connections is reached

I'm not surprised Postgresql and the server is showing issues long before 10K connections is reached. The OS is probably throwing everything to the swap file and see connections dropped or time out.

Should be using a connection pooler to service this kind of load so the Postgresql does not exhaust resources just from the open connections.

On Tue, Feb 25, 2020 at 11:29 AM Dipanjan Ganguly <dipagnjan@xxxxxxxxx> wrote: