On Wed, Apr 13, 2016 at 2:43 PM, drum.lucas@xxxxxxxxx <drum.lucas@xxxxxxxxx> wrote:

Hi all,At the moment I'm having 100% I/O during the day. My server has SATA HDs, and it can't be changed now.So, to solve the problem (or at least try) I was thinking about double the RAM, and by doing that, increasing the cache.The server has 128GB today:shared_buffers = 51605MB (I'll change it to 32GB)

effective_cache_size = 96760MBQuestion:I know that might not be the best option, but by increasing the RAM and the CACHE would help, right?

We're gonna need better stats. iostat, iotop, vmstat etc will all break down your io between reads and writes, random vs sequential etc.

If you're at 100% IO Util, and iostat says you're writing is taking up 20 or 30% of the time, then no, adding cache probably won't help.

Start looking into adding SSDs. They are literally 20 to 1000 times faster at a lot of io stuff than spinning drives. And they're relatively cheap for what they do.

Note that a software RAID-5 array of SSDs can stomp a hardware controller running RAID-10 with spinning disks easily, and RAID-5 is pretty much as slow as RAID gets.

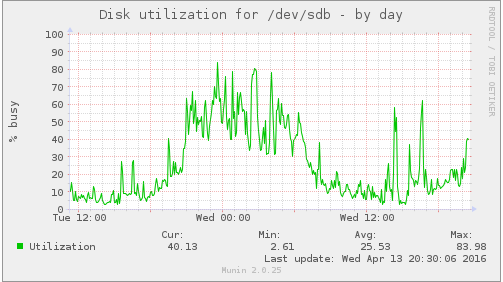

Here's a few minutes of "iostat -xd 10 /dev/sdb" on one of my big servers at work. These machines have a RAID-5 of 10x750GB SSDs under LSI MegaRAIDs with caching turned off. (much faster that way). The array created thus is 6.5TB and it's 83% full. Note that archiving and pg_xlog are on separate volumes as well.

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

sdb 0.00 236.30 1769.10 5907.30 20366.80 69360.80 23.38 36.38 4.74 0.34 6.06 0.09 71.00

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

sdb 0.00 236.30 1769.10 5907.30 20366.80 69360.80 23.38 36.38 4.74 0.34 6.06 0.09 71.00

So we're seeing 1769 reads/s, 5907 writes/s and we're reading ~20MB/s and writing ~70MB/s. In the past this kind of performance from spinning disks required massive caching and cabinets full of hard drives. When first testing these boxes we got literally a fraction of this performance with 20 spinning disks in RAID-10, and they had 512GB of RAM. Management at first wanted to throw more memory at it, these machines go to 1TB RAM, but we tested with 1TB RAM and the difference was literally a few % points going from 512GB to 1TB RAM.

If your iostat output looks anything like mine, with lots of wkB/s and w/s then adding memory isn't going to do much.