You need to find why the autovacuum is not completing. Use the following to find the tables that have not been updated - its more than likely to be the xmin horizon issue due to long running uncommitted transactions.

WITH constants AS (SELECT current_setting('autovacuum_vacuum_scale_factor')::float8 AS autovacuum_vacuum_scale_factor, current_setting('autovacuum_vacuum_threshold')::float8 AS autovacuum_vacuum_threshold, 1000000 AS autovacuum_vacuum_threshold_trigger ) SELECT t.schemaname AS "SchemaName", t.relname AS "TableName", TO_CHAR(t.n_tup_ins, 'fm999G999G999G990') AS "Inserts", TO_CHAR(t.n_tup_upd, 'fm999G999G999G990') AS "Updates", TO_CHAR(t.n_tup_del, 'fm999G999G999G990') AS "Deletes", TO_CHAR(c.reltuples, 'fm999G999G999G990') AS "AnalyzedTuples", CASE WHEN c.reltuples < t.n_live_tup THEN '<' WHEN c.reltuples > t.n_live_tup THEN '>' ELSE '' END AS "Stale", TO_CHAR(t.n_live_tup,'fm999G999G999G990') AS "LiveTuples", TO_CHAR(t.n_dead_tup,'fm999G999G999G990') AS "DeadTuples", TO_CHAR(LEAST(99.999,n_dead_tup::float4/GREATEST(1,n_live_tup)::float4*100),'fm990D00%') AS "DeadRatio", --Limit to 1000% TO_CHAR(c.reltuples * COALESCE(SPLIT_PART(c.reloptions[1],'=',2)::float8,autovacuum_vacuum_scale_factor) + autovacuum_vacuum_threshold,'fm999G999G990') AS "AutoVacuumTrigger", CASE WHEN t.n_dead_tup > c.reltuples * COALESCE(SPLIT_PART(c.reloptions[1],'=',2)::float8,autovacuum_vacuum_scale_factor) + autovacuum_vacuum_threshold THEN '*' ELSE '' END AS "ShouldVacuum", TO_CHAR(COALESCE(SPLIT_PART(c.reloptions[1],'=',2)::float8,autovacuum_vacuum_scale_factor)*100,'fm999G990D00%') AS "CurrentScaleFactor", CASE WHEN c.reltuples * COALESCE(SPLIT_PART(c.reloptions[1],'=',2)::float8,autovacuum_vacuum_scale_factor) + autovacuum_vacuum_threshold > autovacuum_vacuum_threshold_trigger THEN TO_CHAR(autovacuum_vacuum_threshold_trigger,'fm999G999G990') --threshold instead of scale factor ELSE TO_CHAR(COALESCE(SPLIT_PART(c.reloptions[1],'=',2)::float8,autovacuum_vacuum_scale_factor)*100,'fm999G990D00%') END AS "SuggestedScaleFactor", t.last_analyze, t.last_autoanalyze, t.last_vacuum, t.last_autovacuum, t.analyze_count AS analyzecount, t.autoanalyze_count AS autoanalyzecount, t.vacuum_count AS vacuumcount, t.autovacuum_count AS autovacuumcount, pg_size_pretty(pg_total_relation_size(c.oid)) AS totalSize, pg_size_pretty(pg_indexes_size(c.oid)) AS indexSize, pg_size_pretty(COALESCE(pg_total_relation_size(c.reltoastrelid),0)) AS ToastSize FROM pg_stat_user_tables AS t JOIN pg_class AS c ON c.relname=t.relname JOIN pg_catalog.pg_namespace AS n ON n.oid = c.relnamespace AND n.nspname=t.schemaname CROSS JOIN constants WHERE (t.n_live_tup > 0 AND t.n_dead_tup > GREATEST(100000,c.reltuples * autovacuum_vacuum_scale_factor + autovacuum_vacuum_threshold) --more than 10k dead tuples or dead tuples exceed threshold ) -- OR c.reltuples * autovacuum_vacuum_scale_factor + autovacuum_vacuum_threshold > autovacuum_vacuum_threshold_trigger -- the autovacuum threshold is over threshold - might need reducing ORDER BY 1,2;

If you manually vacuum verbose analyze for one of the tables this reports on, it should then tell you the minimum xmin value (which is the horizon).

If you then look in pg_stat_activity it should tell you the transactions that are preventing the horizon from moving forward select * from pg_stat_activity where backend_xmin::text::bigint <= <minimum_xmin value here> order by xact_start eg select * from pg_stat_activity where backend_xmin::text::bigint <= 422050991 order by xact_start

regards Captain.Glumbo 😊

From: Avihai Shoham <avihai.shoham@xxxxxxxxx>

Hi All,

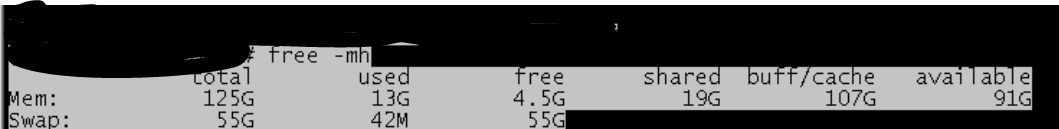

We use postgres 9.6.9 we set the following autovacuum setting , but still the DB grow overtime

autovacuum = on

we have

any idea ? DB size grew from 11G to 25G in 2wks. I read that we may need to increase the max_fsm_pages setting. not sure if it is needed if we have autovacuum or how to do it if needed?

Thank you all Avihai |