Hello,

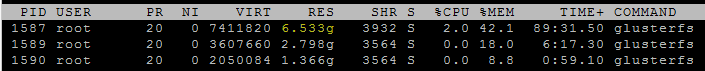

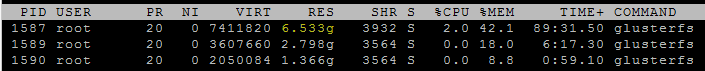

I also experience high memory usage on my gluster clients. Sample :

Can I help in testing/debugging ?

2016-01-12 7:24 GMT+01:00 Soumya Koduri <skoduri@xxxxxxxxxx>:

On 01/11/2016 05:11 PM, Oleksandr Natalenko wrote:

Brief test shows that Ganesha stopped leaking and crashing, so it seemsThanks for checking.

to be good for me.

Nevertheless, back to my original question: what about FUSE client? It

is still leaking despite all the fixes applied. Should it be considered

another issue?

For fuse client, I tried vfs drop_caches as suggested by Vijay in an earlier mail. Though all the inodes get purged, I still doesn't see much difference in the memory footprint drop. Need to investigate what else is consuming so much memory here.

Thanks,

Soumya

_______________________________________________

11.01.2016 12:26, Soumya Koduri написав:

I have made changes to fix the lookup leak in a different way (as

discussed with Pranith) and uploaded them in the latest patch set #4

- http://review.gluster.org/#/c/13096/

Please check if it resolves the mem leak and hopefully doesn't result

in any assertion :)

Thanks,

Soumya

On 01/08/2016 05:04 PM, Soumya Koduri wrote:

I could reproduce while testing deep directories with in the mount

point. I root caus'ed the issue & had discussion with Pranith to

understand the purpose and recommended way of taking nlookup on inodes.

I shall make changes to my existing fix and post the patch soon.

Thanks for your patience!

-Soumya

On 01/07/2016 07:34 PM, Oleksandr Natalenko wrote:

OK, I've patched GlusterFS v3.7.6 with 43570a01 and 5cffb56b (the most_______________________________________________

recent

revisions) and NFS-Ganesha v2.3.0 with 8685abfc (most recent revision

too).

On traversing GlusterFS volume with many files in one folder via NFS

mount I

get an assertion:

===

ganesha.nfsd: inode.c:716: __inode_forget: Assertion `inode->nlookup >=

nlookup' failed.

===

I used GDB on NFS-Ganesha process to get appropriate stacktraces:

1. short stacktrace of failed thread:

https://gist.github.com/7f63bb99c530d26ded18

2. full stacktrace of failed thread:

https://gist.github.com/d9bc7bc8f6a0bbff9e86

3. short stacktrace of all threads:

https://gist.github.com/f31da7725306854c719f

4. full stacktrace of all threads:

https://gist.github.com/65cbc562b01211ea5612

GlusterFS volume configuration:

https://gist.github.com/30f0129d16e25d4a5a52

ganesha.conf:

https://gist.github.com/9b5e59b8d6d8cb84c85d

How I mount NFS share:

===

mount -t nfs4 127.0.0.1:/mail_boxes /mnt/tmp -o

defaults,_netdev,minorversion=2,noac,noacl,lookupcache=none,timeo=100

===

On четвер, 7 січня 2016 р. 12:06:42 EET Soumya Koduri wrote:

Entries_HWMark = 500;

Gluster-users mailing list

Gluster-users@xxxxxxxxxxx

http://www.gluster.org/mailman/listinfo/gluster-users

Gluster-users mailing list

Gluster-users@xxxxxxxxxxx

http://www.gluster.org/mailman/listinfo/gluster-users

_______________________________________________ Gluster-users mailing list Gluster-users@xxxxxxxxxxx http://www.gluster.org/mailman/listinfo/gluster-users