Hi Atin,

Please find the below details :-

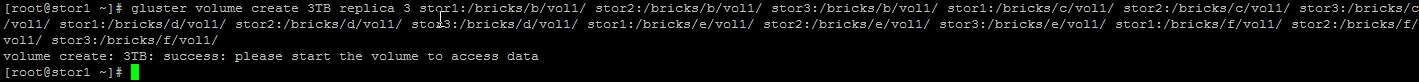

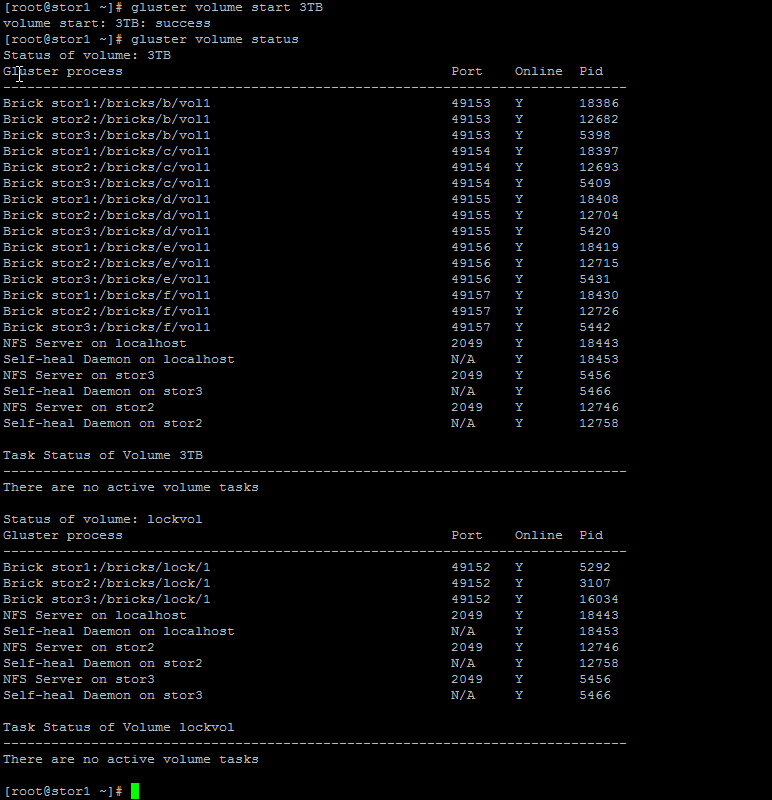

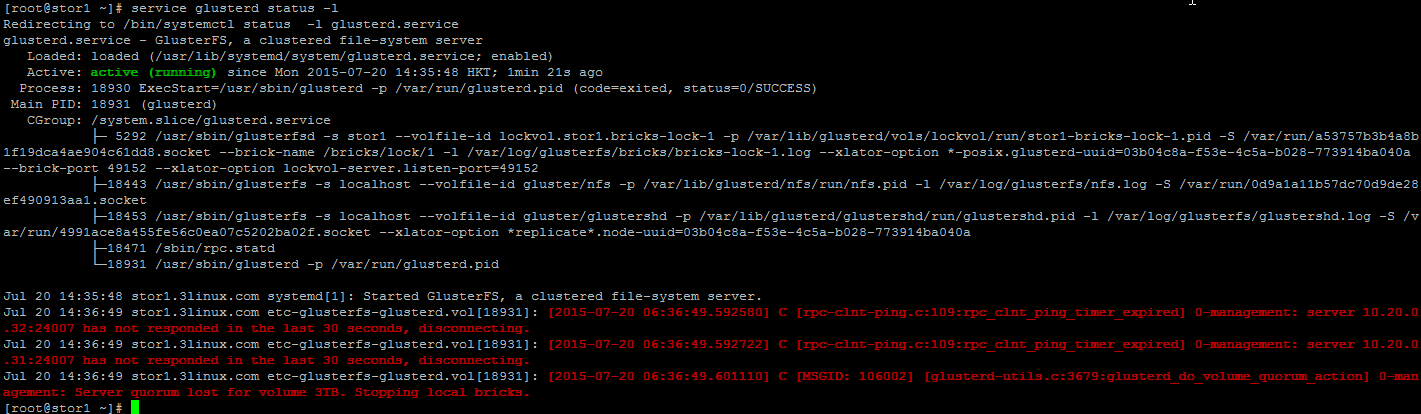

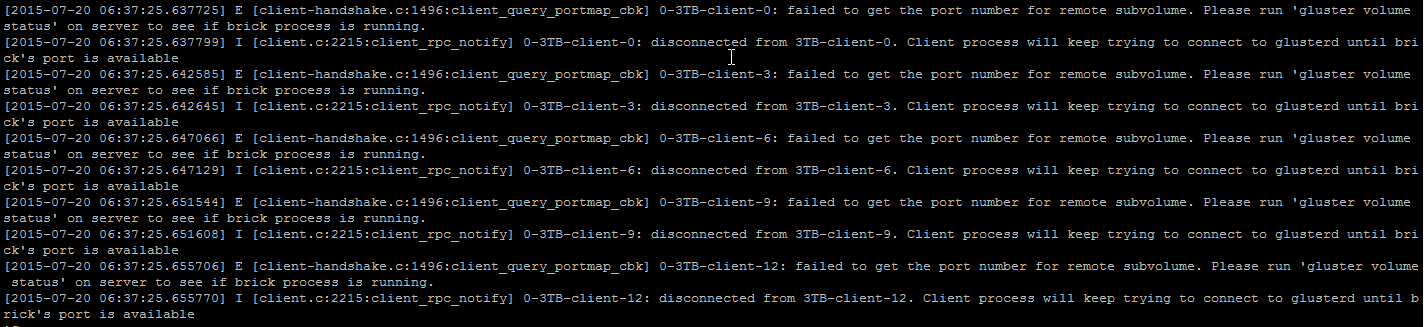

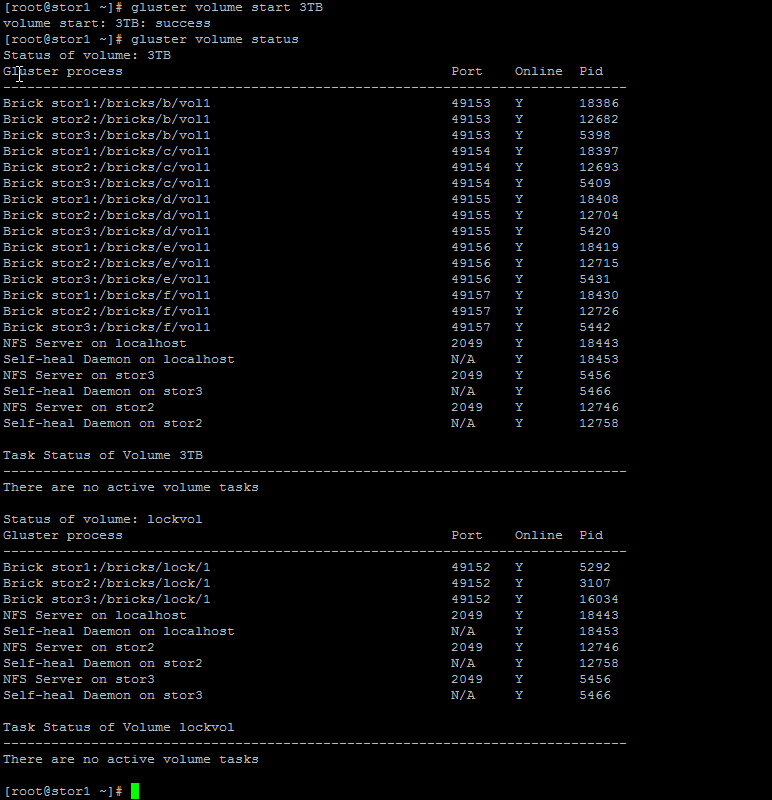

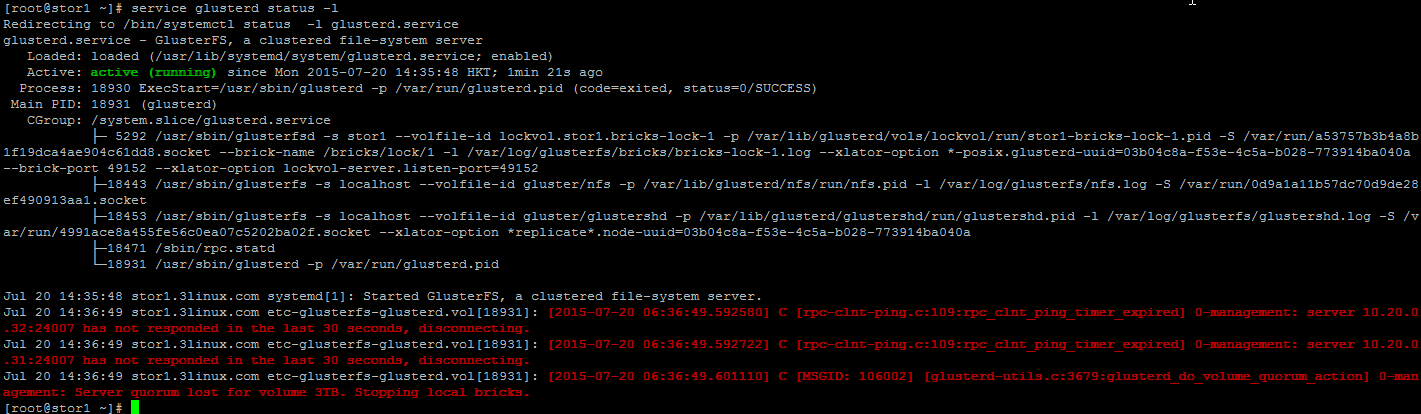

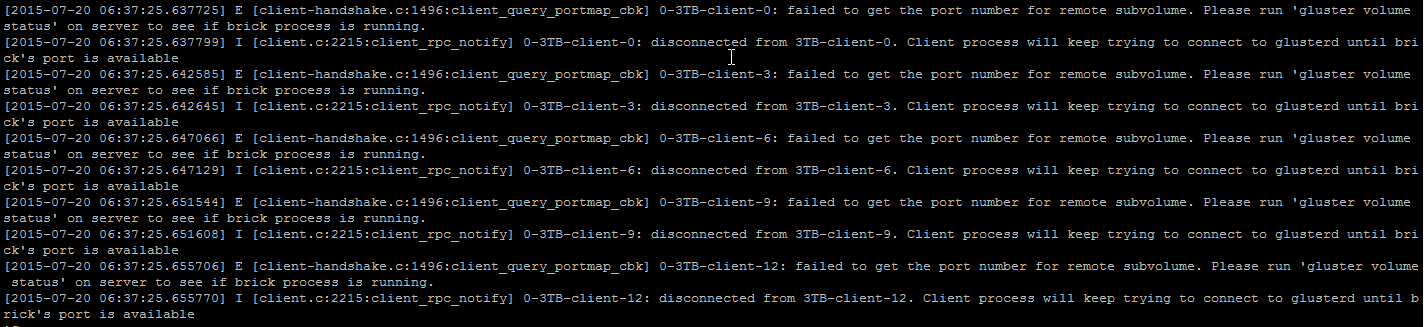

Now when i set the optimize for the virt storage under ovirt and restart glusterd service on any node...it start failing the quorum..

Thanks,

Punit

On Mon, Jul 20, 2015 at 10:44 AM, Punit Dambiwal <hypunit@xxxxxxxxx> wrote:

HI Atin,Apologies for the delay response...1. When you added the brick was the command successful?>> Yes..it was successful..

2. If volume status is failing what's output its throwing in the console

and how about the glusterd log?>> I will reproduce the issue again and update you..On Mon, Jul 13, 2015 at 11:46 AM, Atin Mukherjee <amukherj@xxxxxxxxxx> wrote:

On 07/13/2015 05:19 AM, Punit Dambiwal wrote:

> Hi Sathees,

>

> With 3 bricks i can get the gluster volume status....but after added more

> bricks....can not get gluster volume status....

The information is still incomplete in respect to analyze the problem.

Further questions:

1. When you added the brick was the command successful?

2. If volume status is failing what's output its throwing in the console

and how about the glusterd log?

~Atin

-->>>> Gluster-users@xxxxxxxxxxx>

> On Sun, Jul 12, 2015 at 11:09 AM, SATHEESARAN <sasundar@xxxxxxxxxx> wrote:

>

>> On 07/11/2015 02:46 PM, Atin Mukherjee wrote:

>>

>>>

>>> On 07/10/2015 03:03 PM, Punit Dambiwal wrote:

>>>

>>>> Hi,

>>>>

>>>> I have deployed one replica 3 storage...but i am facing some issue with

>>>> quorum...

>>>>

>>>> Let me elaborate more :-

>>>>

>>>> 1. I have 3 node machines and every machine has 5 HDD(Bricks)...No

>>>> RAID...Just JBOD...

>>>> 2. Gluster working fine when just add 3 HDD as below :-

>>>>

>>>> B HDD from server 1

>>>> B HDD from server 2

>>>> B HDD from server 3

>>>>

>>>> But when i add more bricks as below :-

>>>>

>>>> -----------------------

>>>> [root@stor1 ~]# gluster volume info

>>>>

>>>> Volume Name: 3TB

>>>> Type: Distributed-Replicate

>>>> Volume ID: 5be9165c-3402-4083-b3db-b782da2fb8d8

>>>> Status: Stopped

>>>> Number of Bricks: 5 x 3 = 15

>>>> Transport-type: tcp

>>>> Bricks:

>>>> Brick1: stor1:/bricks/b/vol1

>>>> Brick2: stor2:/bricks/b/vol1

>>>> Brick3: stor3:/bricks/b/vol1

>>>> Brick4: stor1:/bricks/c/vol1

>>>> Brick5: stor2:/bricks/c/vol1

>>>> Brick6: stor3:/bricks/c/vol1

>>>> Brick7: stor1:/bricks/d/vol1

>>>> Brick8: stor2:/bricks/d/vol1

>>>> Brick9: stor3:/bricks/d/vol1

>>>> Brick10: stor1:/bricks/e/vol1

>>>> Brick11: stor2:/bricks/e/vol1

>>>> Brick12: stor3:/bricks/e/vol1

>>>> Brick13: stor1:/bricks/f/vol1

>>>> Brick14: stor2:/bricks/f/vol1

>>>> Brick15: stor3:/bricks/f/vol1

>>>> Options Reconfigured:

>>>> nfs.disable: off

>>>> user.cifs: enable

>>>> auth.allow: *

>>>> performance.quick-read: off

>>>> performance.read-ahead: off

>>>> performance.io-cache: off

>>>> performance.stat-prefetch: off

>>>> cluster.eager-lock: enable

>>>> network.remote-dio: enable

>>>> cluster.quorum-type: auto

>>>> cluster.server-quorum-type: server

>>>> storage.owner-uid: 36

>>>> storage.owner-gid: 36

>>>> --------------------------------

>>>>

>>>> Brick added successfully without any error but after 1 min quorum failed

>>>> and gluster stop working...

>>>>

>>> Punit,

>>

>> And what do you mean by quorum failed ?

>> What is effect that you are seeing ?

>> Could you provide output of 'gluster volume status' as well ?

>>

>> -- Sathees

>>

>>

>> What do log files say?

>>>

>>>> Thanks,

>>>> Punit

>>>>

>>>>

>>>>

>>>> _______________________________________________

>>>> Gluster-users mailing list

>>>> http://www.gluster.org/mailman/listinfo/gluster-users

>>>>

>>>>

>>

>

~Atin

Attachment:

273 20-Jul-15.jpg

Description: JPEG image

Attachment:

274 20-Jul-15.jpg

Description: JPEG image

Attachment:

275 20-Jul-15.jpg

Description: JPEG image

Attachment:

277 20-Jul-15.jpg

Description: JPEG image

_______________________________________________ Gluster-users mailing list Gluster-users@xxxxxxxxxxx http://www.gluster.org/mailman/listinfo/gluster-users