Hi folks –

I’m in serious need of help. First, I’m completely new to Gluster and am getting my feet wet due to an emergency power shutdown of our data center which did not allow for an orderly shutdown of the cluster. The head node OS is RHEL 5.5.

I’m including a procedure provided by our former system admin that I’ve attempted to follow as follows:

Gluster Full Reset

These are steps to reset Gluster. This should be a last option in fixing Gluster. If there are issues with a single node, please read the document to Remove/Restore a single node from Gluster.

-

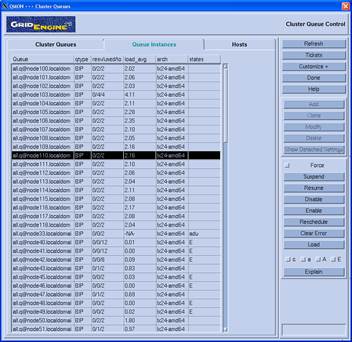

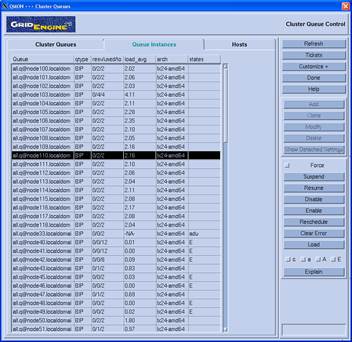

qmon - disable nodes

-

Via ssh(use putty or cygwin), connect to qmon and disable all nodes so jobs are not scheduled . (This requires root access to the server).

-

Login to the server. Type in the command qmon. Click on the icon for queue

control. Click on the queue instances tab. Select all active nodes and select the disable button to the right.

-

dismount scratch: Using the act_exec command unmount the scratch to all nodes within the cluster

-

act_exec -g nodes "umount -l /scratch"

-

Stop gluster daemon on all nodes: act_exec -g nodes "service glusterd stop"

-

Remove the glusterd folder on all nodes on the cluster: act_exec -g nodes "cd /etc ; rm -rf glusterd"

-

ssh to node40

-

>From the head node type: ssh node40.

-

Start the ssh daemon on this node: service glusterd start

-

stop the volume with the following command: gluster volume stop scratch-vol

-

>From node40, delete the volume using command: gluster volume delete scratch-vol

-

>From head, cleanup scratchstore using command: act_exec -g nodes "rm -rf /scratchstore/*"

-

>From node40, check the peer status using command: gluster peer status. There should not be any node available. If so, remove using command:

gluster peer detach (node name). If there are no nodes listed do the following:

-

Start gluster on all nodes (from Head): act_exec -g nodes "service glusterd start"

-

Run a gluster peer probe on the nodes to set as trusted. (Check /root/glusterfullreset/range.sh on head for script

-

From node40, recreate the volume using the following command:

-

From node40, start the volume using command:

gluster volume start scratch-vol

-

From node40, use the following command to recreate the scratch directory and pest directory:

mkdir /scratch/pest /scratch/sptr

-

From node40, assign full permissions to the directories:

chmod –R 777 /scratch/pest /scratch/sptr

-

Remount scratch to all nodes

-

From the head node connect back to qmon and enable all nodes.

Here’s the problems I’m now having:

·

When I execute step 5. The gluster daemon appears to start but stops after several seconds.

·

If I run act_exec –g nodes service gluster start the daemon will only continue to run on a few nodes.

·

When I ssh to some nodes I get an error “-bash: /act/Modules/3.2.6/init/bash: No such file or directory

-bash: module: command not found”. On other nodes when I ssh I get normal login.

Note that when the power was restored and the cluster came back up I found that NTP was not configured correctly (was using system date) so I corrected that.

Also, we have no documentation for the cluster shutdown, is there a best practice for our future reference?

This has been down since last weekend so your insights are appreciated.

Best regards,

Stan McKenzie

Navarro-Intera, LLC

Under contract to the U. S. Department of Energy, NNSA, Nevada Field Office

(702) 295-1645 (Office)