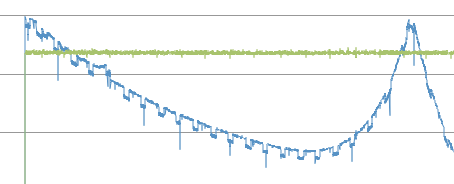

Colleagues, I executed 2-hour runs of 4KRR to understand performance changes across the time on the specific very fast NVMe SSD with 1.6TB capacity. I noticed the side effect of “norandommap” parameter performing full span test on the block device. Here is the example of the result with random map (I.e. without "norandommap" option) within 120 minutes windows. [cid:E6872B64-35D1-4447-A0CF-32E6411D9BDB] (IOPS in blue) As soon as I enabled “norandommap” option the curve has changed into the straight line as expected. Some technical details: I’m running Centos 7 with 3.18 kernel, SSD of course in the precondition state. FIO 2.2.2 (I unfortunately got higher CPU utilization with 2.2.4 which I’ll report separately). Config file: [global] name=4k random read filename=/dev/nvme0n1 ioengine=libaio direct=1 bs=4k rw=randread iodepth=16 numjobs=8 buffered=0 size=100% randrepeat=0 norandommap refill_buffers group_reporting [job1] -- Andrey Kudryavtsev, SSD Solution Architect Intel Corp.