|

I am currently trying to run Ceph on RDMA, either RoCE 1 or 2. However, I am experiencing issues with this.

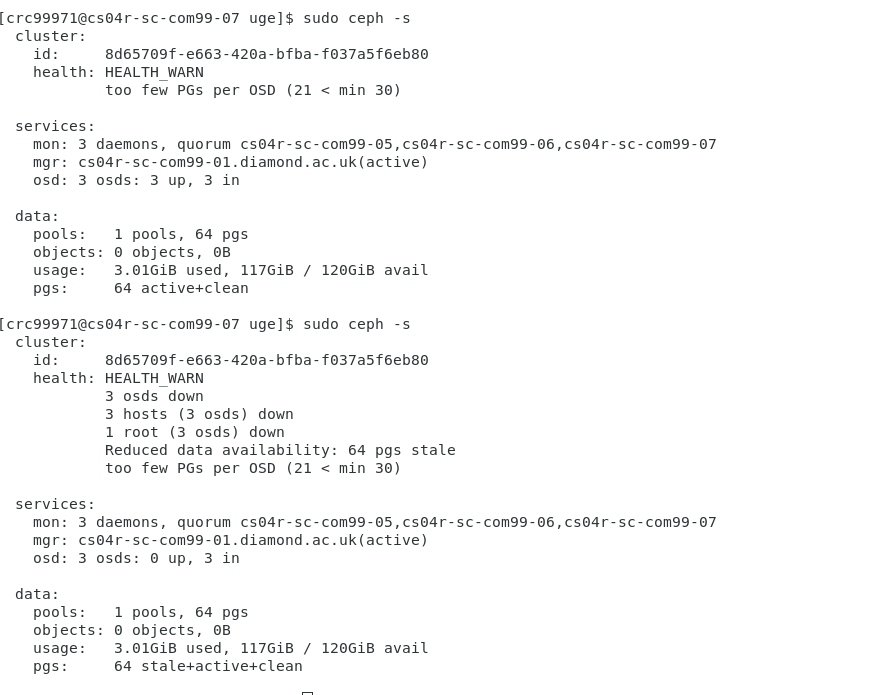

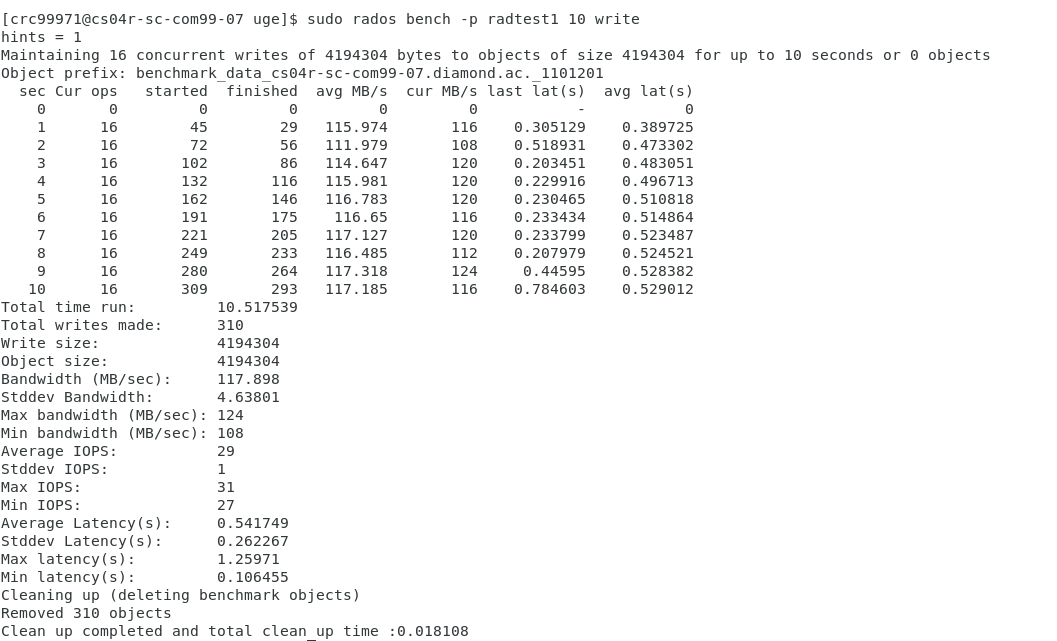

When using Ceph on RDMA I experience issues where OSD’s will randomly become unreachable even if the cluster is left alone alone, it also is not properly talking over RDMA and using Ethernet when the config states it should as shown by the same results in the

bench marking of the two setups.

After reloading the cluster

After 5m 9s the cluster went from being healthy to down.

This problem even happens when running a bench mark test on the cluster, OSD’s will just fall over. Another curious issue is that it is not properly talking over RDMA as well and instead using the Ethernet.

Next test:

The config used for the RDMA is a so:

[global]

fsid = aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa mon_initial_members = node1, node2, node3 mon_host =xxx.xxx.xxx.xxx,xxx.xxx.xxx.xxx, xxx.xxx.xxx.xxx auth_cluster_required = cephx auth_service_required =cephx auth_client_required = cephx public_network = xxx.xxx.xxx.xxx/24 cluster_network = yyy.yyy.yyy.yyy/16 ms_cluster_type =async+rdma ms_public_type = async+posix ms_async_rdma_device_name = mlx4_0 [osd.0] ms_async_rdma_local_gid = xxxx [osd.1] ms_async_rdma_local_gid = xxxx [osd.2] ms_async_rdma_local_gid =xxxx

sudo ceph --admin-daemon /var/run/ceph/ceph-osd.0.asok config show | grep ms_cluster OUTPUT "ms_cluster_type": "async+rdma",

sudo ceph daemon osd.0 perf dump AsyncMessenger::RDMAWorker-1 OUTPUT { "AsyncMessenger::RDMAWorker-1": { "tx_no_mem": 0, "tx_parital_mem": 0, "tx_failed_post": 0, "rx_no_registered_mem": 0, "tx_chunks": 9, "tx_bytes": 2529, "rx_chunks": 0, "rx_bytes": 0, "pending_sent_conns": 0 } }

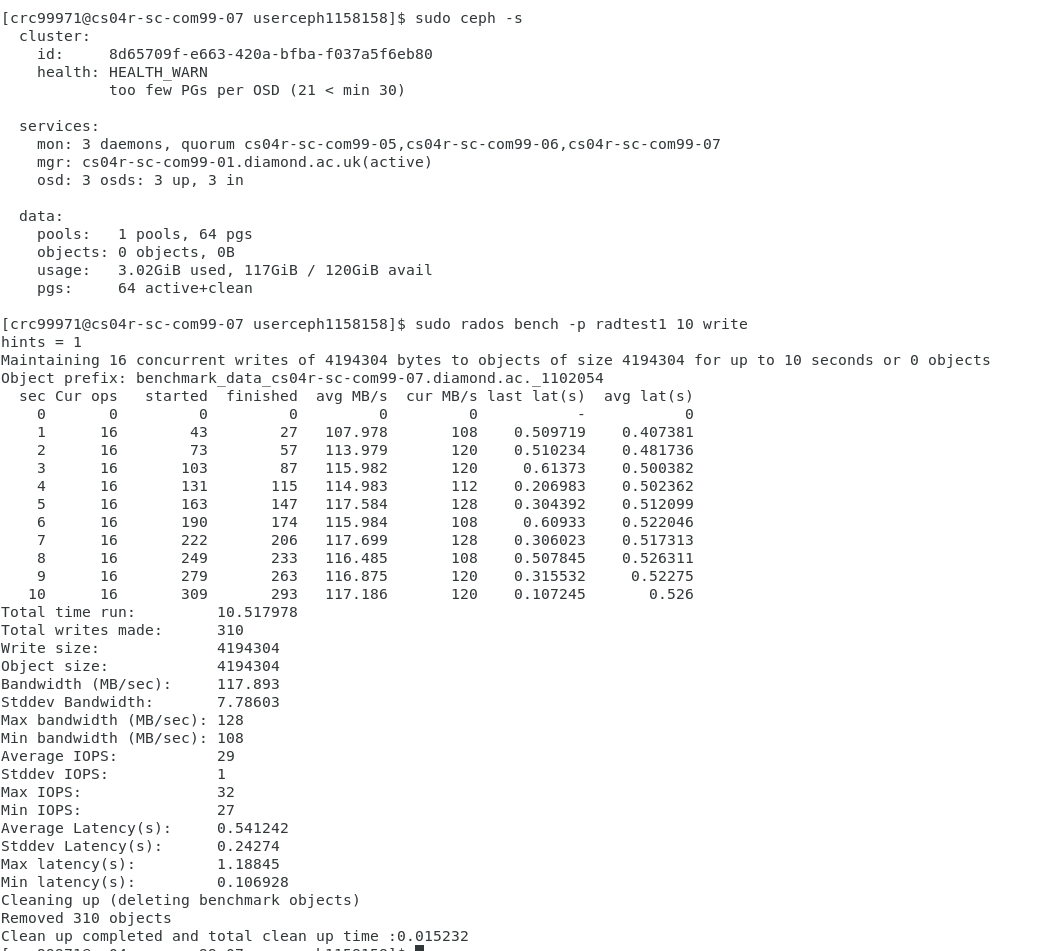

When running over Ethernet I have a completely stable system with the current benchmarks as so

Config setup when using Ethernet is

The Config setup when using Ethernet is

[global] fsid = aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa mon_initial_members = node1, node2, node3 mon_host =xxx.xxx.xxx.xxx,xxx.xxx.xxx.xxx, xxx.xxx.xxx.xxx auth_cluster_required = cephx auth_service_required =cephx auth_client_required = cephx public_network = xxx.xxx.xxx.xxx/24 cluster_network = yyy.yyy.yyy.yyy/16 ms_cluster_type =async+posix ms_public_type = async+posix ms_async_rdma_device_name = mlx4_0 [osd.0] ms_async_rdma_local_gid = xxxx [osd.1] ms_async_rdma_local_gid = xxxx [osd.2] ms_async_rdma_local_gid =xxxx Tests to check the system is using async+posix sudo ceph --admin-daemon /var/run/ceph/ceph-osd.0.asok config show | grep ms_cluster OUTPUT "ms_cluster_type": "async+posix"

sudo ceph daemon osd.0 perf dump AsyncMessenger::RDMAWorker-1 OUTPUT {}

This clearly a issue with RDMA and not with the OSD's shown by the fact the system is completely fine over Ethernet and not with RDMA.

Any guidance or ideas on how to approach this problem to make Ceph work with RDMA would be greatly appreciated.

Regards

Gabryel Mason-Williams, Placement Student

Address: Diamond Light Source Ltd., Diamond House, Harwell Science & Innovation Campus, Didcot, Oxfordshire OX11 0DE

Email: gabryel.mason-williams@xxxxxxxxxxxxx

-- This e-mail and any attachments may contain confidential, copyright and or privileged material, and are for the use of the intended addressee only. If you are not the intended addressee or an authorised recipient of the addressee please notify us of receipt by returning the e-mail and do not use, copy, retain, distribute or disclose the information in or attached to the e-mail. |

_______________________________________________ Dev mailing list -- dev@xxxxxxx To unsubscribe send an email to dev-leave@xxxxxxx