Den tors 16 jan. 2025 kl 00:08 skrev Bruno Gomes Pessanha <bruno.pessanha@xxxxxxxxx>:

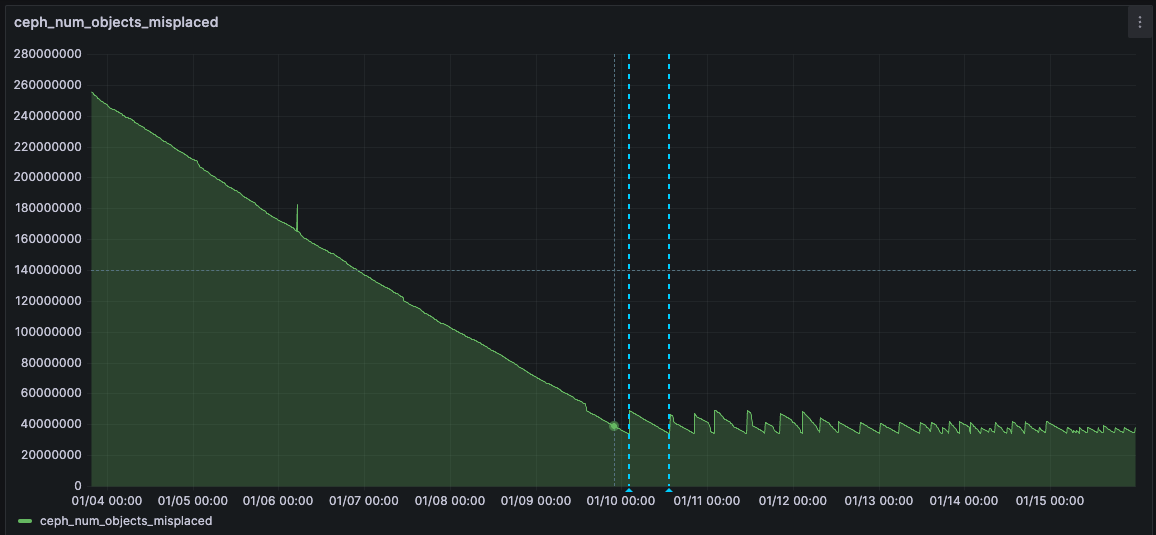

Hi everyone. Yes. All the tips definitely helped! Now I have more free space in the pools, the number of misplaced PG's decreased a lot and lower std deviation of the usage of OSD's. The storage looks way healthier now. Thanks a bunch!I'm only confused by the number of misplaced PG's which never goes below 5%. Every time it hits 5% it goes up and down like shown in this quite interesting graph:Any idea why that might be?I had the impression that it might be related to the autobalancer that kicks in and pg's are misplaced again. Or am I missing something?

Yes, this seems to be the balancer keeping 5% of your PGs moving to the "correct" places. If you run an upmap remapper (or had PGs hindered by backfill_toofull) then the balancer might have a long list it wants to move, but it "only" does 5% at most at a time.

May the most significant bit of your life be positive.

_______________________________________________ ceph-users mailing list -- ceph-users@xxxxxxx To unsubscribe send an email to ceph-users-leave@xxxxxxx