Hi Anthony,

thanks for the suggested strategy! I'll have a look to see how and what I can do to fix it.

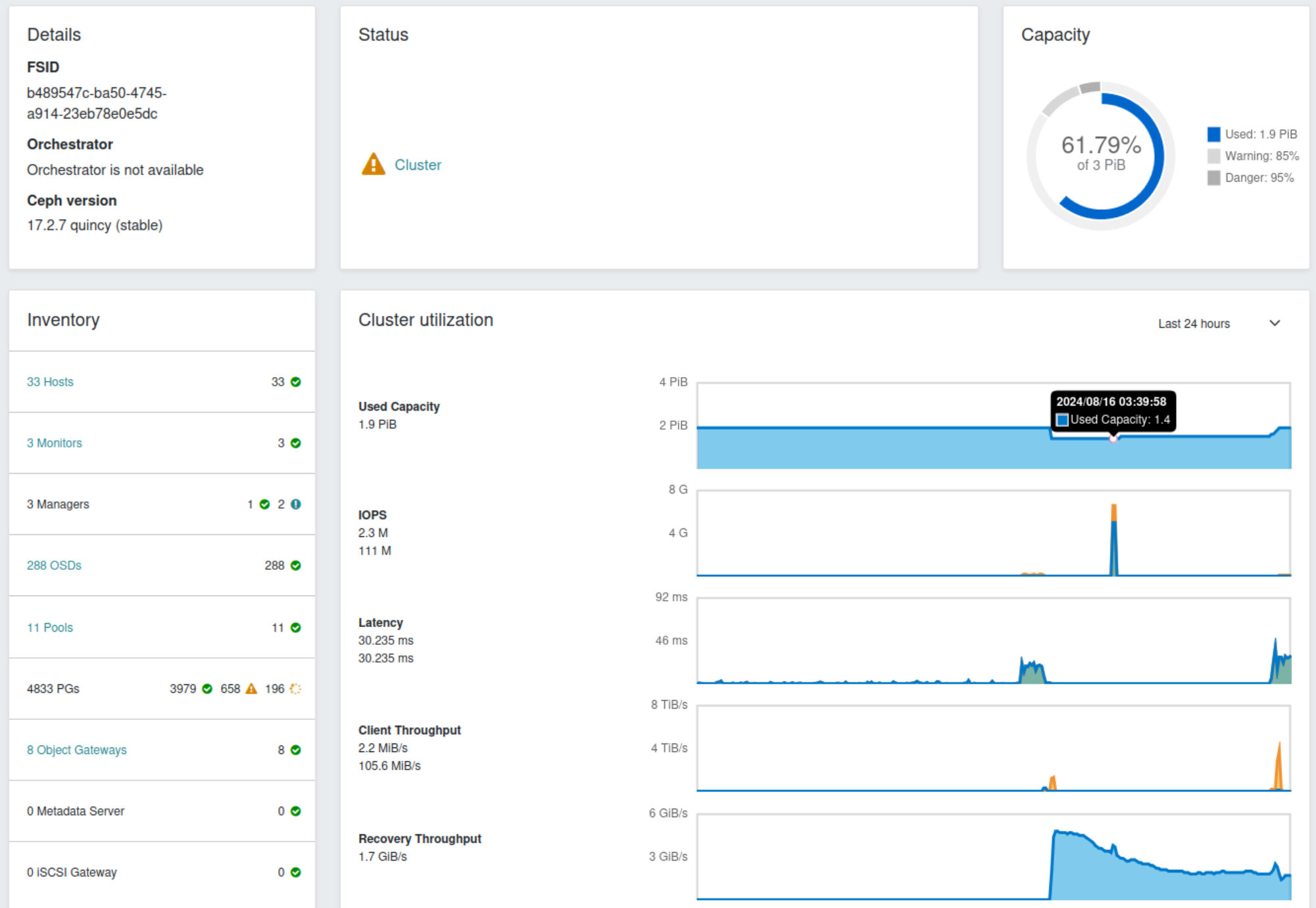

To answer your question about pgs/osd, we have 288 OSDs (in 24 osd servers) with about ten pools (besides the .mgr pool), with various PGs per pool (depending on amount stored in it), we have pg autoscaler on and the main 2 pools are 2048 pgs/pool and there's one with 512 and the rest is 32 pgs per pool (very little data).

We mainly use EC-5+4 and RBD 3copy

Cheers

/Simon

On Sun, 18 Aug 2024 at 16:53, Anthony D'Atri <aad@xxxxxxxxxxxxxx> wrote:

You may want to look into https://github.com/digitalocean/pgremapper to get the situation under control first.--

Alex Gorbachev

ISSNot a bad idea.We had a really weird outage today of ceph and I wonder how it came about.The problem seems to have started around midnight, I still need to look if it was to the extend I found it in this morning or if it grew more gradually, but when I found it several osd servers had most or all osd processes down, to the point where our EC 8+3 buckets didn't work anymore.Look at your metrics and systems. Were the OSDs OOMkilled?I see some of our OSDs are coming close to (but not quite) 80-85% full, There are many times when I've seen an overfull error lead to cascading and catastrophic failures. I suspect this may have been one of them.One can (temporarily) raise the backfillfull / full ratios to help get out of a bad situation, but leaving them raised can lead to an even worse situation later.Which brings me to another question, why is our balancer doing so badly at balancing the OSDs?There are certain situations where the bundled balancer is confounded, including CRUSH trees with multiple roots. Subtly, that may include a cluster where some CRUSH rules specify a deviceclass and some don’t, as with the .mgr pool if deployed by Rook. That situation confounds the PG autoscaler for sure. If this is the case, consider modifying CRUSH rules so that all specify a deviceclass, and/or simplifying your CRUSH tree if you have explicit multiple roots.It's configured with upmap mode and it should work great with the amount of PGs per OSD we haveWhich is?, but it is letting some OSD's reach 80% full and others not yet 50% full (we're just over 61% full in total).The current health status is:HEALTH_WARN Low space hindering backfill (add storage if this doesn't resolve itself): 1 pg backfill_toofull

[WRN] PG_BACKFILL_FULL: Low space hindering backfill (add storage if this doesn't resolve itself): 1 pg backfill_toofull

pg 30.3fc is active+remapped+backfill_wait+backfill_toofull, acting [66,105,124,113,89,132,206,242,179]

I've started reweighting again, because the balancer is not doing it's job in our cluster for some reason...Reweighting … are you doing “ceph osd crush reweight”, or “ceph osd reweight / reweight-by-utilization” ? The latter in conjunction with pg-upmap confuses the balancer. If that’s the situation you have, I might* Use pg-remapper or Dan’s https://gitlab.cern.ch/ceph/ceph-scripts/blob/master/tools/upmap/upmap-remapped.py to freeze the PG mappings temporarily.* Temp jack up the backfillfull/full ratios for some working room, say to 95 / 98 %* One at a time, reset the override reweights to 1.0. No data should move.* Remove the manual upmaps one at time, in order of PGs on the most-full OSDs You should see a brief spurt of backfill* Rinse, lather, repeat.* This should progressively get you to a state where you no longer have any old-style override reweights, i.e. all OSDs have 1.00000 for that value.* Proceed removing the manual upmaps one or a few at a time* The balancer should work now* Set the ratios back to the default values______________________________________________________________________________________________Below is our dashboard overview, you can see the start and recovery in the 24h graph...Cheers/Simon

--I'm using my gmail.com address, because the gmail.com dmarc policy is "none", some mail servers will reject this (microsoft?) others will instead allow this when I send mail to a mailling list which has not yet been configured to send mail "on behalf of" the sender, but rather do a kind of "forward". The latter situation causes dkim/dmarc failures and the dmarc policy will be applied. see https://wiki.list.org/DEV/DMARC for more details

ceph-users mailing list -- ceph-users@xxxxxxx

To unsubscribe send an email to ceph-users-leave@xxxxxxx

ceph-users mailing list -- ceph-users@xxxxxxx

To unsubscribe send an email to ceph-users-leave@xxxxxxx

--

I'm using my gmail.com address, because the gmail.com dmarc policy is "none", some mail servers will reject this (microsoft?) others will instead allow this when I send mail to a mailling list which has not yet been configured to send mail "on behalf of" the sender, but rather do a kind of "forward". The latter situation causes dkim/dmarc failures and the dmarc policy will be applied. see https://wiki.list.org/DEV/DMARC for more details

_______________________________________________ ceph-users mailing list -- ceph-users@xxxxxxx To unsubscribe send an email to ceph-users-leave@xxxxxxx