Hi ceph user!

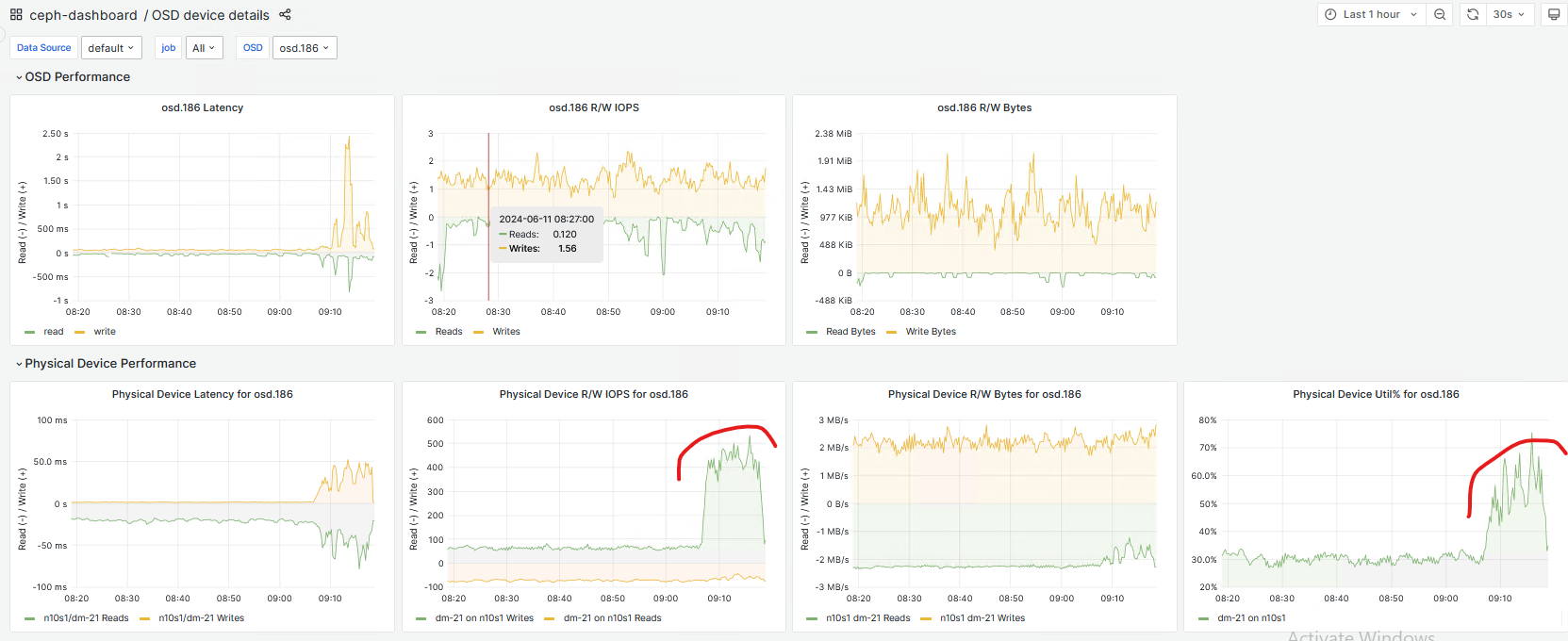

I am encountering a problem with IOPS and disk utilization of OSD. Sometimes, my disk peaks in IOPS and utilization become too high, which affects my cluster and causes slow operations to appear in the logs.

6/6/24 9:51:46 AM[WRN]Health check update: 0 slow ops, oldest one blocked for 36 sec, osd.268 has slow ops (SLOW_OPS)

6/6/24 9:51:37 AM[WRN]Health check update: 0 slow ops, oldest one blocked for 31 sec, osd.268 has slow ops (SLOW_OPS)

This is config tu reduce it, but its not resolve my problem

global advanced osd_mclock_profile custom

global advanced osd_mclock_scheduler_background_best_effort_lim 0.100000

global advanced osd_mclock_scheduler_background_best_effort_res 0.100000

global advanced osd_mclock_scheduler_background_best_effort_wgt 1

global advanced osd_mclock_scheduler_background_recovery_lim 0.100000

global advanced osd_mclock_scheduler_background_recovery_res 0.100000

global advanced osd_mclock_scheduler_background_recovery_wgt 1

global advanced osd_mclock_scheduler_client_lim 0.400000

global advanced osd_mclock_scheduler_client_res 0.400000

global advanced osd_mclock_scheduler_client_wgt 4

global advanced osd_mclock_scheduler_background_best_effort_lim 0.100000

global advanced osd_mclock_scheduler_background_best_effort_res 0.100000

global advanced osd_mclock_scheduler_background_best_effort_wgt 1

global advanced osd_mclock_scheduler_background_recovery_lim 0.100000

global advanced osd_mclock_scheduler_background_recovery_res 0.100000

global advanced osd_mclock_scheduler_background_recovery_wgt 1

global advanced osd_mclock_scheduler_client_lim 0.400000

global advanced osd_mclock_scheduler_client_res 0.400000

global advanced osd_mclock_scheduler_client_wgt 4

Hope someone can help me

Thanks so much!

_______________________________________________ ceph-users mailing list -- ceph-users@xxxxxxx To unsubscribe send an email to ceph-users-leave@xxxxxxx