Is your cluster status OK?

I have encountered the problem of rapid expansion of the mds data pool because the mds log was not pruned in time.

After the cluster status returns to health, the mds pool returns to its original size.

I have encountered the problem of rapid expansion of the mds data pool because the mds log was not pruned in time.

After the cluster status returns to health, the mds pool returns to its original size.

Paul Browne <pfb29@xxxxxxxxx> 于2024年5月11日周六 17:15写道:

_______________________________________________Hello Ceph users,

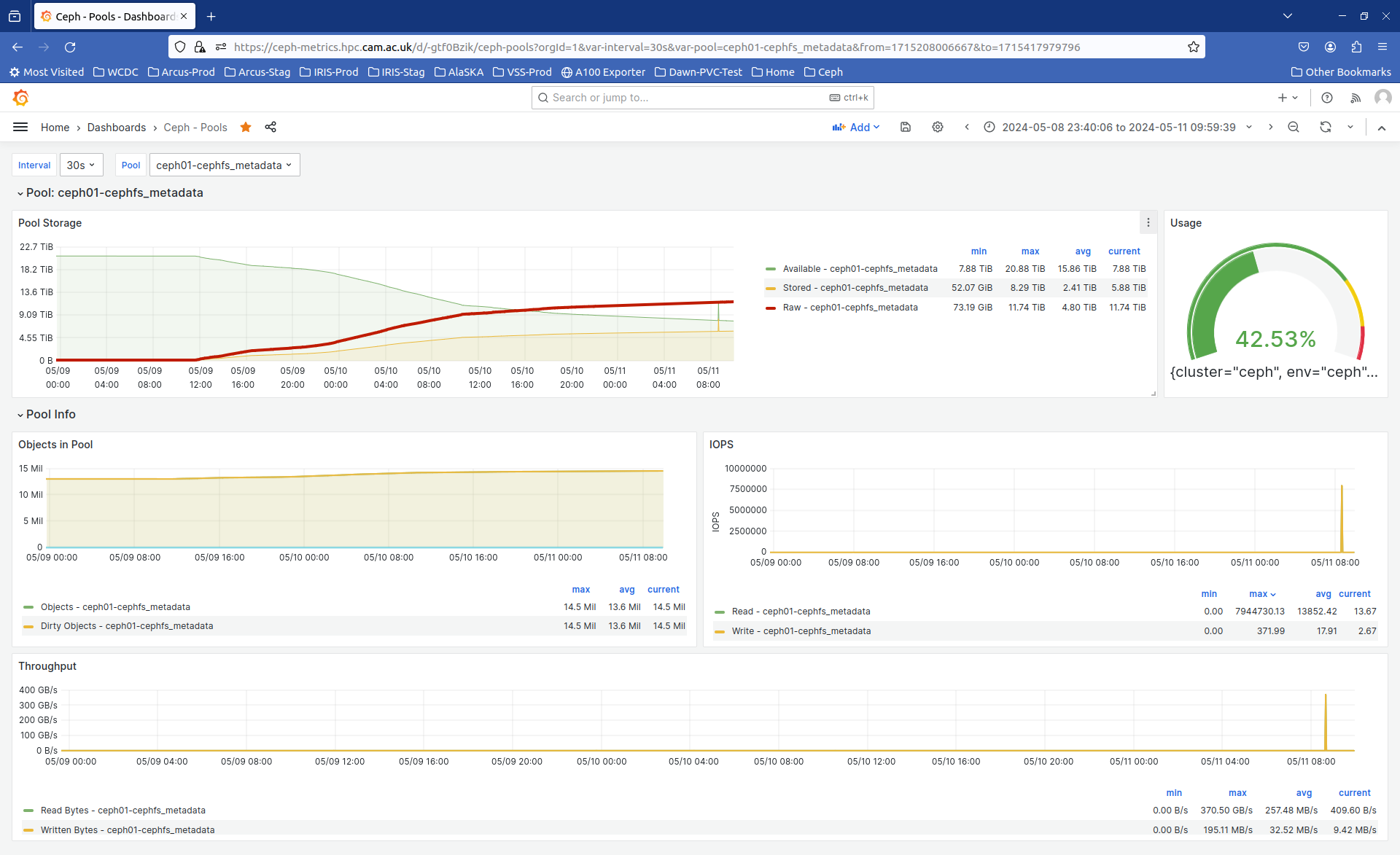

We've recently seen a very massive uptick in the stored capacity of our CephFS metadata pool, 150X the raw stored capacity used in a very short timeframe of only 48 hours or so. The number of stored objects rose by ~1.5 million or so in that timeframe (attached PNG shows the increase)

What I'd really like to be able to determine, but haven't yet figured out how, is to map these newly stored objects (over this limited time window) to inodes/dnodes in the filesystem and from there to individual namespaces being used in the filesystem.

This should then allow me to track back the increased usage to specific projects using the filesystem for research data storage and give them a mild warning about possibly exhausting the available metadata pool capacity.

Would anyone know if there's any capability in CephFs to do something like this, specifically in Nautilus (being run here as Red Hat Ceph Storage 4)?

We've scheduled upgrades to later RHCS releases, but I'd like the cluster and CephFS state to be in a better place first if possible.

Thanks,Paul Browne

*******************Paul BrowneResearch Computing PlatformsUniversity Information ServicesRoger Needham BuildingJJ Thompson AvenueUniversity of CambridgeCambridgeUnited KingdomE-Mail: pfb29@xxxxxxxxxTel: 0044-1223-746548*******************

ceph-users mailing list -- ceph-users@xxxxxxx

To unsubscribe send an email to ceph-users-leave@xxxxxxx

_______________________________________________ ceph-users mailing list -- ceph-users@xxxxxxx To unsubscribe send an email to ceph-users-leave@xxxxxxx