|

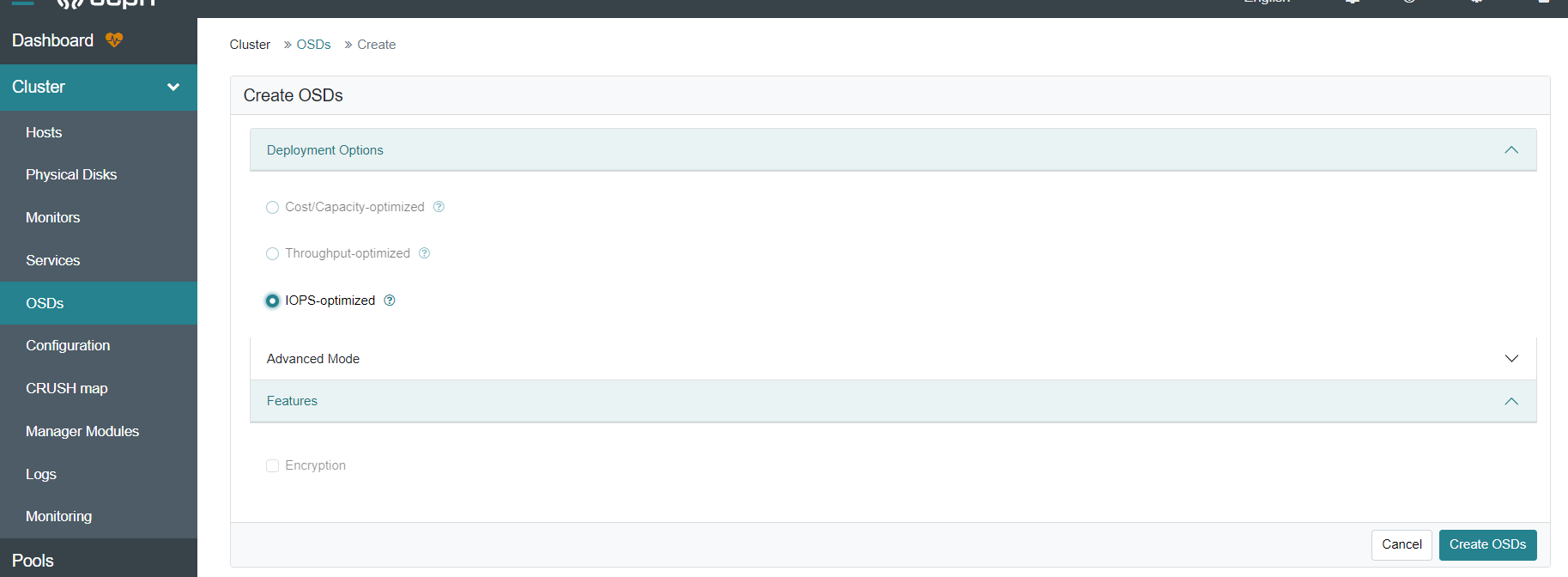

This was a simple step to delete the service /# ceph orch rm osd.iops_optimized WARN goes away Just fyi: ceph orch help does not list rm option Thank you, Anantha From: Adiga, Anantha <anantha.adiga@xxxxxxxxx> Hi, I am not finding any reference to clear this warning AND stop the service. See below After creating OSD with iops_optimized option, this WARN mesg appear. Ceph 17.2.6

6/29/23 4:10:45 PM [WRN] Health check failed: Failed to apply 1 service(s): osd.iops_optimized (CEPHADM_APPLY_SPEC_FAIL) 6/29/23 4:10:45 PM [ERR] Failed to apply osd.iops_optimized spec DriveGroupSpec.from_json(yaml.safe_load('''service_type: osd service_id: iops_optimized service_name: osd.iops_optimized placement: host_pattern: '*' spec: data_devices:

rotational: 0 filter_logic: AND objectstore: bluestore ''')): cephadm exited with an error code: 1, stderr:Inferring config /var/lib/ceph/d0a3b6e0-d2c3-11ed-be05-a7a3a1d7a87e/mon.fl31ca104ja0203/config Non-zero exit code 1 from /usr/bin/docker run --rm --ipc=host

--stop-signal=SIGTERM --net=host --entrypoint /usr/sbin/ceph-volume --privileged --group-add=disk --init -e

CONTAINER_IMAGE=quay.io/ceph/ceph@sha256:af79fedafc42237b7612fe2d18a9c64ca62a0b38ab362e614ad671efa4a0547e -e NODE_NAME=fl31ca104ja0203 -e #:/var/log/ceph# grep optimi cephadm.log cephadm ['--env', 'CEPH_VOLUME_OSDSPEC_AFFINITY=iops_optimized', '--image', 'quay.io/ceph/ceph@sha256:af79fedafc42237b7612fe2d18a9c64ca62a0b38ab362e614ad671efa4a0547e', 'ceph-volume', '--fsid', 'd0a3b6e0-d2c3-11ed-be05-a7a3a1d7a87e',

'--config-json', '-', '--', 'lvm', 'batch', '--no-auto', '/dev/nvme10n1', '/dev/nvme11n1', '/dev/nvme12n1', '/dev/nvme13n1', '/dev/nvme1n1', '/dev/nvme2n1', '/dev/nvme3n1', '/dev/nvme4n1', '/dev/nvme5n1', '/dev/nvme6n1', '/dev/nvme7n1', '/dev/nvme8n1', '/dev/nvme9n1',

'--yes', '--no-systemd'] 2023-06-29 23:06:28,340 7fc2668a7740 INFO Non-zero exit code 1 from /usr/bin/docker run --rm --ipc=host --stop-signal=SIGTERM --net=host --entrypoint /usr/sbin/ceph-volume --privileged --group-add=disk --init

-e

CONTAINER_IMAGE=quay.io/ceph/ceph@sha256:af79fedafc42237b7612fe2d18a9c64ca62a0b38ab362e614ad671efa4a0547e -e NODE_NAME=fl31ca104ja0202 -e CEPH_USE_RANDOM_NONCE=1 -e CEPH_VOLUME_OSDSPEC_AFFINITY=iops_optimized -e CEPH_VOLUME_SKIP_RESTORECON=yes -e CEPH_VOLUME_DEBUG=1

-v /var/run/ceph/d0a3b6e0-d2c3-11ed-be05-a7a3a1d7a87e:/var/run/ceph:z -v /var/log/ceph/d0a3b6e0-d2c3-11ed-be05-a7a3a1d7a87e:/var/log/ceph:z -v /var/lib/ceph/d0a3b6e0-d2c3-11ed-be05-a7a3a1d7a87e/crash:/var/lib/ceph/crash:z -v /dev:/dev -v /run/udev:/run/udev

-v /sys:/sys -v /run/lvm:/run/lvm -v /run/lock/lvm:/run/lock/lvm -v /:/rootfs -v /tmp/ceph-tmp1v09i0jx:/etc/ceph/ceph.conf:z -v /tmp/ceph-tmphy3pnh46:/var/lib/ceph/bootstrap-osd/ceph.keyring:z

quay.io/ceph/ceph@sha256:af79fedafc42237b7612fe2d18a9c64ca62a0b38ab362e614ad671efa4a0547e lvm batch --no-auto /dev/nvme10n1 /dev/nvme11n1 /dev/nvme12n1 /dev/nvme13n1 /dev/nvme1n1 /dev/nvme2n1 /dev/nvme3n1 /dev/nvme4n1 /dev/nvme5n1 /dev/nvme6n1 /dev/nvme7n1

/dev/nvme8n1 /dev/nvme9n1 --yes --no-systemd #:/var/log/ceph# grep optimi cephadm.log cephadm ['--env', 'CEPH_VOLUME_OSDSPEC_AFFINITY=iops_optimized', '--image', 'quay.io/ceph/ceph@sha256:af79fedafc42237b7612fe2d18a9c64ca62a0b38ab362e614ad671efa4a0547e', 'ceph-volume', '--fsid', 'd0a3b6e0-d2c3-11ed-be05-a7a3a1d7a87e',

'--config-json', '-', '--', 'lvm', 'batch', '--no-auto', '/dev/nvme10n1', '/dev/nvme11n1', '/dev/nvme12n1', '/dev/nvme13n1', '/dev/nvme1n1', '/dev/nvme2n1', '/dev/nvme3n1', '/dev/nvme4n1', '/dev/nvme5n1', '/dev/nvme6n1', '/dev/nvme7n1', '/dev/nvme8n1', '/dev/nvme9n1',

'--yes', '--no-systemd'] 2023-06-29 23:06:28,340 7fc2668a7740 INFO Non-zero exit code 1 from /usr/bin/docker run --rm --ipc=host --stop-signal=SIGTERM --net=host --entrypoint /usr/sbin/ceph-volume --privileged --group-add=disk --init

-e

CONTAINER_IMAGE=quay.io/ceph/ceph@sha256:af79fedafc42237b7612fe2d18a9c64ca62a0b38ab362e614ad671efa4a0547e -e NODE_NAME=fl31ca104ja0202 -e CEPH_USE_RANDOM_NONCE=1 -e CEPH_VOLUME_OSDSPEC_AFFINITY=iops_optimized -e CEPH_VOLUME_SKIP_RESTORECON=yes -e CEPH_VOLUME_DEBUG=1

-v /var/run/ceph/d0a3b6e0-d2c3-11ed-be05-a7a3a1d7a87e:/var/run/ceph:z -v /var/log/ceph/d0a3b6e0-d2c3-11ed-be05-a7a3a1d7a87e:/var/log/ceph:z -v /var/lib/ceph/d0a3b6e0-d2c3-11ed-be05-a7a3a1d7a87e/crash:/var/lib/ceph/crash:z -v /dev:/dev -v /run/udev:/run/udev

-v /sys:/sys -v /run/lvm:/run/lvm -v /run/lock/lvm:/run/lock/lvm -v /:/rootfs -v /tmp/ceph-tmp1v09i0jx:/etc/ceph/ceph.conf:z -v /tmp/ceph-tmphy3pnh46:/var/lib/ceph/bootstrap-osd/ceph.keyring:z

quay.io/ceph/ceph@sha256:af79fedafc42237b7612fe2d18a9c64ca62a0b38ab362e614ad671efa4a0547e lvm batch --no-auto /dev/nvme10n1 /dev/nvme11n1 /dev/nvme12n1 /dev/nvme13n1 /dev/nvme1n1 /dev/nvme2n1 /dev/nvme3n1 /dev/nvme4n1 /dev/nvme5n1 /dev/nvme6n1 /dev/nvme7n1

/dev/nvme8n1 /dev/nvme9n1 --yes --no-systemd The warning message clears on its on for a few seconds..ceph health status foes green, but comes back.

I am not finding any reference to clear this msg AND not able to stop the service. /#ceph orch ls node-exporter ?:9100 4/4 8m ago 10w * osd 33 8m ago - <unmanaged> osd.iops_optimized 0 - 2d * /# ceph orch stop --service_name osd.iops_optimized Error EINVAL: No daemons exist under service name "osd.iops_optimized". View currently running services using "ceph orch ls" /# Thank you, Anantha |

_______________________________________________ ceph-users mailing list -- ceph-users@xxxxxxx To unsubscribe send an email to ceph-users-leave@xxxxxxx