Thanks Ulrich, using `ceph config set` instead of specifying in the config file did end up working for me.

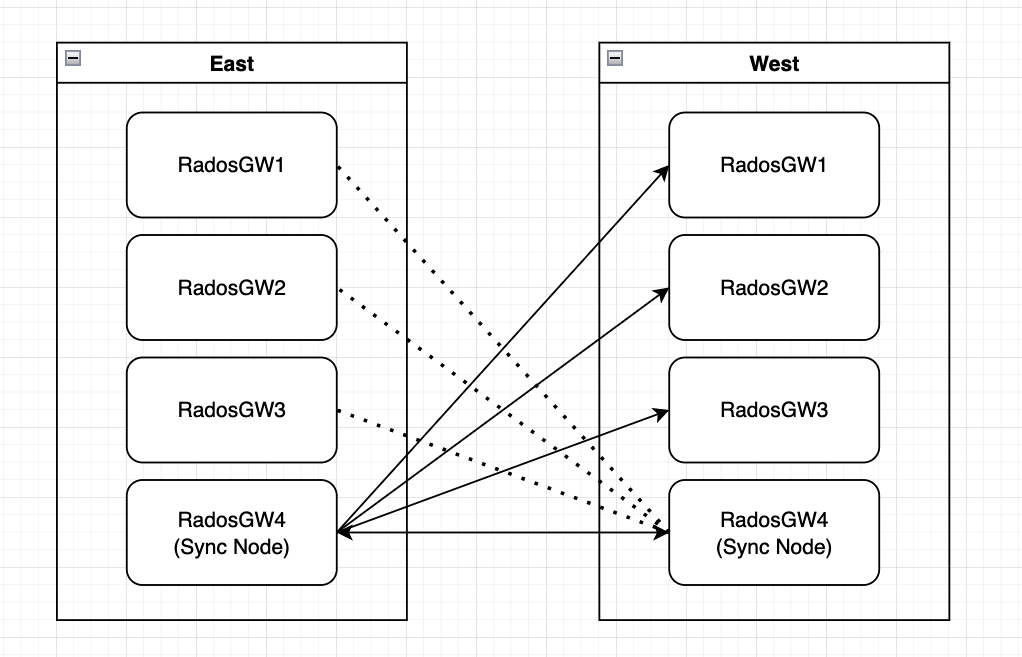

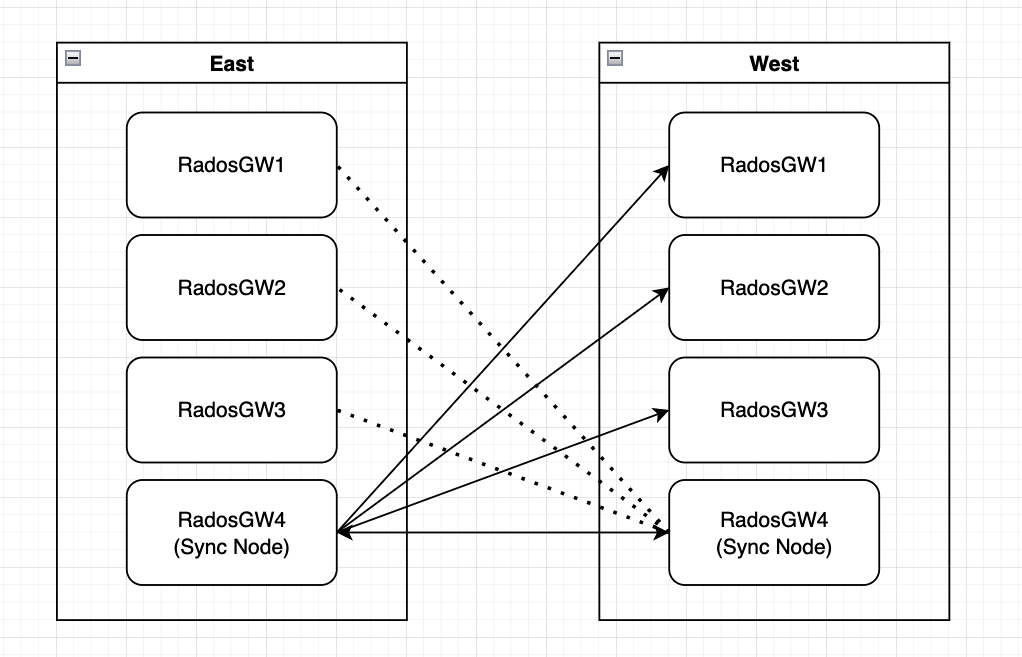

What I expected to happen with these series of changes would be:

RadosGW4 would only sync with RadosGW 4 of the opposing site. Looking at the logs, I see that RadosGW4 talks to WestGW1,2,3,4 instead of just 4 and 4 exclusively. I see this same pattern of activity from both

sides of the zonegroup.

Is this expected?

My expectation was RadosGW4 would exclusivly talk to RadosGW4 to remove sync traffic from client facing radosgw.

I have included a diagram below. I am unsure if pictures come thru the mailing lists

From:

Ulrich Klein <Ulrich.Klein@xxxxxxxxxxxxxx>

Date: Friday, May 19, 2023 at 5:57 AM

To: Tarrago, Eli (RIS-BCT) <Eli.Tarrago@xxxxxxxxxxxxxxxxxx>

Cc: ceph-users <ceph-users@xxxxxxx>

Subject: Re: Dedicated radosgw gateways

[You don't often get email from ulrich.klein@xxxxxxxxxxxxxx. Learn why this is important at

https://aka.ms/LearnAboutSenderIdentification ]

*** External email: use caution ***

Not sure this will help:

I did some experiments on small test clusters with separate RGWs for "customers" and for syncing. Both are Cephadm/containerized clusters.

Cluster A primary with zone A with 4 nodes, one RGW on each, node 1 and 2 behind one HAProxy/ingress URL for syncing via

http://clusterA, node3 and 4 behind one HAPRoxy/ingress for customers via

https://customersA

Cluster B secondary with zone B with 4 nodes, one RGW on each, node 1 and 2 behind one HAProxy/ingress URL for syncing via

http://clusterB, node 3 and 4 behind one HAPRoxy/ingress for customers via

https://customersB

Endpoint for cluster A is http://clusterA

Endpoint for cluster B is http://clusterB

I set in cluster B:

ceph config set client.rgw.clb.node3.nxumka rgw_run_sync_thread false

ceph config set client.rgw.clb.node4.xeeqqq rgw_run_sync_thread false

ceph config set client.rgw.clb.node3.nxumka rgw_enable_lc_threads false

ceph config set client.rgw.clb.node4.xeeqqq rgw_enable_lc_threads false

ceph config set client.rgw.clb.node3.nxumka rgw_enable_gc_threads false

ceph config set client.rgw.clb.node4.xeeqqq rgw_enable_gc_threads false

ceph orch restart rgw.customer # That's the "service" for customer RGW on nodes 3 and 4.

Now I uploaded a 32G file to cluster A (via https://customersA)

Once it's uploaded cluster B pulls it over. As the clusters are otherwise completely idle I can check the network load (via SNMP) on the nodes to see which one is doing the transfers.

Without the restart cluster B seems to use a random RGW for pulling the sync data, for me actually always node 3 or 4.

But after the restart it uses only nodes 1 and 2 for pulling (although I only ran the test 4 times).

So, looks like one has to restart the RGWs to pick up the configuration.

Ciao, Uli

> On 18. May 2023, at 21:14, Tarrago, Eli (RIS-BCT) <Eli.Tarrago@xxxxxxxxxxxxxxxxxx> wrote:

>

> Adding a bit more context to this thread.

>

> I added an additional radosgw to each cluser. Radosgw 1-3 are customer facing. Radosgw #4 is dedicated to syncing

>

> Radosgw 1-3 now have an additional lines:

> rgw_enable_lc_threads = False

> rgw_enable_gc_threads = False

>

> Radosgw4 has the additional line:

> rgw_sync_obj_etag_verify = True

>

> The logs on any of the radosgw’s appear to be identical, but here is an example log that is reflect on any of the servers. Notice the IP addresses are 1-4. Where I expect the traffic to be from only 4.

>

> Is this to be expected?

>

> My understanding of this thread is that this traffic would be regulated to radosgw 04.

>

>

> Example Ceph Conf for a single node, this is RadosGw 01

>

> [client.rgw.west01.rgw0]

> host = west01

> keyring = /var/lib/ceph/radosgw/west-rgw.west01.rgw0/keyring

> log file = /var/log/ceph/west-rgw-west01.rgw0.log

> rgw frontends = beast port=8080 num_threads=500

> rgw_dns_name = west01.example.com

> rgw_max_chunk_size = 67108864

> rgw_obj_stripe_size = 67108864

> rgw_put_obj_min_window_size = 67108864

> rgw_zone = rgw-west

> rgw_enable_lc_threads = False

> rgw_enable_gc_threads = False

>

> ------------

>

> Example Logs:

>

> 2023-05-18T19:06:48.295+0000 7fb295f83700 1 beast: 0x7fb3e82b26f0: 10.10.10.1 - synchronization-user [18/May/2023:19:06:48.107 +0000] "GET /admin/log/?type=data&id=69&marker=1_xxxx&extra-info=true&rgwx-zonegroup=xxxxx HTTP/1.1" 200 44 - - - latency=0.188007131s

> 2023-05-18T19:06:48.371+0000 7fb1dd612700 1 ====== starting new request req=0x7fb3e80ae6f0 =====

> 2023-05-18T19:06:48.567+0000 7fb1dd612700 1 ====== req done req=0x7fb3e80ae6f0 op status=0 http_status=200 latency=0.196007445s ======

> 2023-05-18T19:06:48.567+0000 7fb1dd612700 1 beast: 0x7fb3e80ae6f0: 10.10.10.3 - synchronization-user [18/May/2023:19:06:48.371 +0000] "GET /admin/log/?type=data&id=107&marker=1_xxxx&extra-info=true&rgwx-zonegroup=xxxx HTTP/1.1" 200 44 - - - latency=0.196007445s

> 2023-05-18T19:06:49.023+0000 7fb290f79700 1 ====== starting new request req=0x7fb3e81b06f0 =====

> 2023-05-18T19:06:49.023+0000 7fb28bf6f700 1 ====== req done req=0x7fb3e81b06f0 op status=0 http_status=200 latency=0.000000000s ======

> 2023-05-18T19:06:49.023+0000 7fb28bf6f700 1 beast: 0x7fb3e81b06f0: 10.10.10.2 - synchronization-user [18/May/2023:19:06:49.023 +0000] "GET /admin/log?bucket-instance=ceph-bucketxxx%3A81&format=json&marker=00000020447.3609723.6&type=bucket-index&rgwx-zonegroup=xxx

HTTP/1.1" 200 2 - - - latency=0.000000000s

> 2023-05-18T19:06:49.147+0000 7fb27af4d700 1 ====== starting new request req=0x7fb3e81b06f0 =====

> 2023-05-18T19:06:49.151+0000 7fb27af4d700 1 ====== req done req=0x7fb3e81b06f0 op status=0 http_status=200 latency=0.004000151s ======

> 2023-05-18T19:06:49.475+0000 7fb280f59700 1 beast: 0x7fb3e82316f0: 10.10.10.4 - synchronization-user [18/May/2023:19:06:49.279 +0000] "GET /admin/log/?type=data&id=58&marker=1_xxxx.1&extra-info=true&rgwx-zonegroup=xxxx HTTP/1.1" 200 312 - - - latency=0.196007445s

> 2023-05-18T19:06:49.987+0000 7fb27c750700 1 ====== starting new request req=0x7fb3e81b06f0 =====

>

>

>

>

> radosgw-admin zonegroup get

> {

> "id": "x",

> "name": "eastWestceph",

> "api_name": "EastWestCeph",

> "is_master": "true",

> "endpoints": [

> "https://nam11.safelinks.protection.outlook.com/?url="">",

> "https://nam11.safelinks.protection.outlook.com/?url="">",

> "https://nam11.safelinks.protection.outlook.com/?url="">",

> "https://nam11.safelinks.protection.outlook.com/?url="">",

<< ---- sync node

> "https://nam11.safelinks.protection.outlook.com/?url="">",

> "https://nam11.safelinks.protection.outlook.com/?url="">",

> "https://nam11.safelinks.protection.outlook.com/?url="">",

> "https://nam11.safelinks.protection.outlook.com/?url="">"

<< ---- sync node

> ],

> .......

> ],

> "hostnames_s3website": [],

> "master_zone": "x",

> "zones": [

> {

> "id": "x",

> "name": "rgw-west",

> "endpoints": [

> "https://nam11.safelinks.protection.outlook.com/?url="">",

> "https://nam11.safelinks.protection.outlook.com/?url="">",

> "https://nam11.safelinks.protection.outlook.com/?url="">",

> "https://nam11.safelinks.protection.outlook.com/?url="">"

<< -- sync node

> ],

> "log_meta": "false",

> "log_data": "true",

> "bucket_index_max_shards": 0,

> "read_only": "false",

> "tier_type": "",

> "sync_from_all": "true",

> "sync_from": [],

> "redirect_zone": ""

> },

> {

> "id": "x",

> "name": "rgw-east",

> "endpoints": [

> "https://nam11.safelinks.protection.outlook.com/?url="">",

> "https://nam11.safelinks.protection.outlook.com/?url="">",

> "https://nam11.safelinks.protection.outlook.com/?url="">",

> "https://nam11.safelinks.protection.outlook.com/?url="">"

<< -- sync node

> ....

> From: Ulrich Klein <Ulrich.Klein@xxxxxxxxxxxxxx>

> Date: Tuesday, May 16, 2023 at 10:22 AM

> To: Konstantin Shalygin <k0ste@xxxxxxxx>

> Cc: Michal Strnad <michal.strnad@xxxxxxxxx>, ceph-users <ceph-users@xxxxxxx>

> Subject: Re: Dedicated radosgw gateways

> [You don't often get email from ulrich.klein@xxxxxxxxxxxxxx. Learn why this is important at

https://aka.ms/LearnAboutSenderIdentification ]

>

> *** External email: use caution ***

>

>

>

> Hi,

>

> Might be a dumb question …

> I'm wondering how I can set those config variables in some but not all RGW processes?

>

> I'm on a cephadm 17.2.6. On 3 nodes I have RGWs. The ones on 8080 are behind haproxy for users. the ones one 8081 I'd like for sync only.

>

> # ceph orch ps | grep rgw

> rgw.max.maxvm4.lmjaef maxvm4 *:8080 running (51m) 4s ago 2h 262M - 17.2.6 d007367d0f3c 315f47a4f164

> rgw.max.maxvm4.lwzxpf maxvm4 *:8081 running (51m) 4s ago 2h 199M - 17.2.6 d007367d0f3c 7ae82e5f6ef2

> rgw.max.maxvm5.syxpnb maxvm5 *:8081 running (51m) 4s ago 2h 137M - 17.2.6 d007367d0f3c c0635c09ba8f

> rgw.max.maxvm5.wtpyfk maxvm5 *:8080 running (51m) 4s ago 2h 267M - 17.2.6 d007367d0f3c b4ad91718094

> rgw.max.maxvm6.ostneb maxvm6 *:8081 running (51m) 4s ago 2h 150M - 17.2.6 d007367d0f3c 83b2af8f787a

> rgw.max.maxvm6.qfulra maxvm6 *:8080 running (51m) 4s ago 2h 262M - 17.2.6 d007367d0f3c 81d01bf9e21d

>

> # ceph config show rgw.max.maxvm4.lwzxpf

> Error ENOENT: no config state for daemon rgw.max.maxvm4.lwzxpf

>

> # ceph config set rgw.max.maxvm4.lwzxpf rgw_enable_lc_threads false

> Error EINVAL: unrecognized config target 'rgw.max.maxvm4.lwzxpf'

> (Not surprised)

>

> # ceph tell rgw.max.maxvm4.lmjaef get rgw_enable_lc_threads

> error handling command target: local variable 'poolid' referenced before assignment

>

> # ceph tell rgw.max.maxvm4.lmjaef set rgw_enable_lc_threads false

> error handling command target: local variable 'poolid' referenced before assignment

>

> Is there any way to set the config for specific RGWs in a containerized env?

>

> (ceph.conf doesn't work. Doesn't do anything and gets overwritten with a minimall version at "unpredictable" intervalls)

>

> Thanks for any ideas.

>

> Ciao, Uli

>

>> On 15. May 2023, at 14:15, Konstantin Shalygin <k0ste@xxxxxxxx> wrote:

>>

>> Hi,

>>

>>> On 15 May 2023, at 14:58, Michal Strnad <michal.strnad@xxxxxxxxx> wrote:

>>>

>>> at Cephalocon 2023, it was mentioned several times that for service tasks such as data deletion via garbage collection or data replication in S3 via zoning, it is good to do them on dedicated radosgw gateways and not mix them with gateways used by users.

How can this be achieved? How can we isolate these tasks? Will using dedicated keyrings instead of admin keys be sufficient? How do you operate this in your environment?

>>

>> Just:

>>

>> # don't put client traffic to "dedicated radosgw gateways"

>> # disable lc/gc on "gateways used by users" via `rgw_enable_lc_threads = false` & `rgw_enable_gc_threads = false`

>>

>>

>> k

>> _______________________________________________

>> ceph-users mailing list -- ceph-users@xxxxxxx

>> To unsubscribe send an email to ceph-users-leave@xxxxxxx

> _______________________________________________

> ceph-users mailing list -- ceph-users@xxxxxxx

> To unsubscribe send an email to ceph-users-leave@xxxxxxx

>

> ________________________________

> The information contained in this e-mail message is intended only for the personal and confidential use of the recipient(s) named above. This message may be an attorney-client communication and/or work product and as such is privileged and confidential. If

the reader of this message is not the intended recipient or an agent responsible for delivering it to the intended recipient, you are hereby notified that you have received this document in error and that any review, dissemination, distribution, or copying

of this message is strictly prohibited. If you have received this communication in error, please notify us immediately by e-mail, and delete the original message.

> _______________________________________________

> ceph-users mailing list -- ceph-users@xxxxxxx

> To unsubscribe send an email to ceph-users-leave@xxxxxxx

The information contained in this e-mail message is intended only for the personal and confidential use of the recipient(s) named above. This message may be an attorney-client communication and/or work product and as such is privileged and confidential. If

the reader of this message is not the intended recipient or an agent responsible for delivering it to the intended recipient, you are hereby notified that you have received this document in error and that any review, dissemination, distribution, or copying

of this message is strictly prohibited. If you have received this communication in error, please notify us immediately by e-mail, and delete the original message.

|