Am Mi., 19. Apr. 2023 um 11:02 Uhr schrieb Ilya Dryomov <idryomov@xxxxxxxxx>:

On Wed, Apr 19, 2023 at 10:29 AM Reto Gysi <rlgysi@xxxxxxxxx> wrote:

>

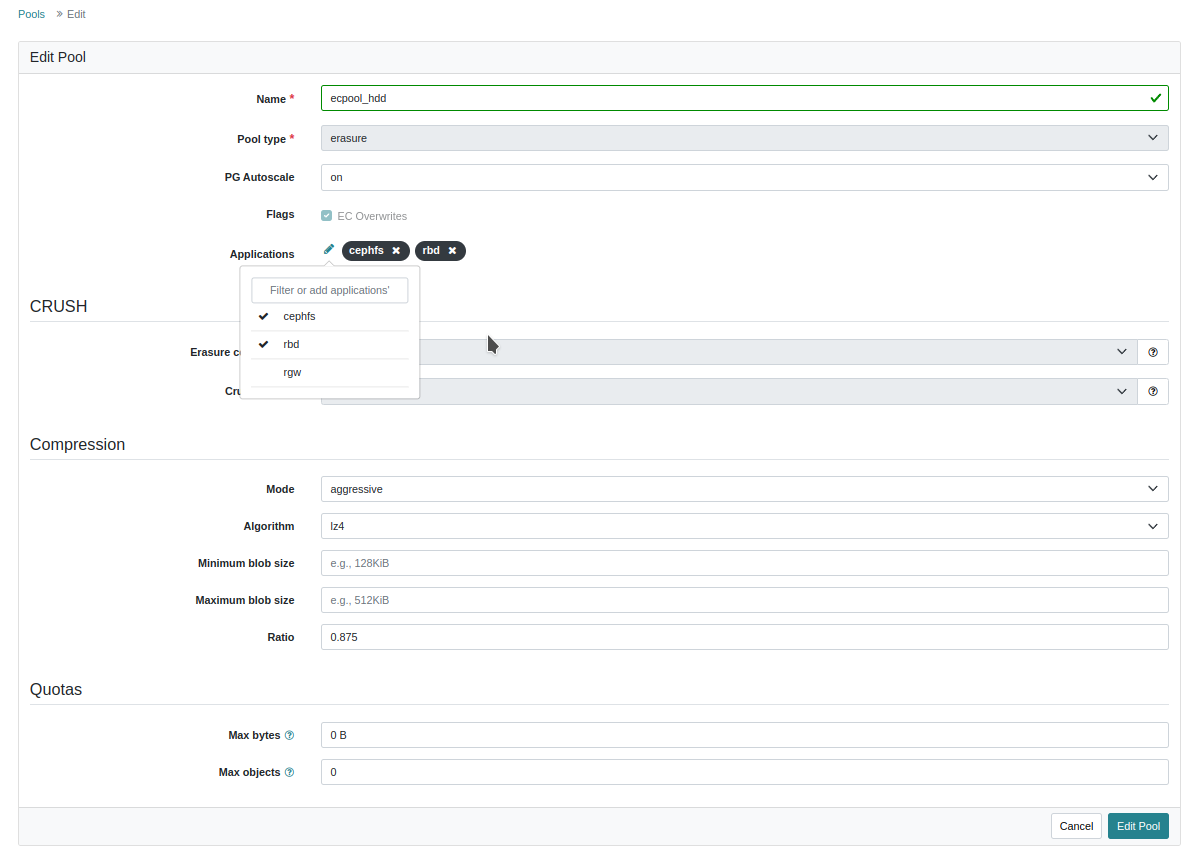

> yes, I used the same ecpool_hdd also for cephfs file systems. The new pool ecpool_test I've created for a test, I've also created it with application profile 'cephfs', but there aren't any cephfs filesystem attached to it.

This is not and has never been supported.

Do you mean 1) using the same erasure coded pool for both rbd and cephfs, or 2) multiple cephfs filesystem using the same erasure coded pool via ceph.dir.layout.pool="ecpool_hdd"?

1)

2)

rgysi cephfs filesystem

rgysi - 5 clients

=====

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active rgysi.debian.uhgqen Reqs: 0 /s 409k 408k 40.8k 16.5k

POOL TYPE USED AVAIL

cephfs.rgysi.meta metadata 1454M 2114G

cephfs.rgysi.data data 4898G 17.6T

ecpool_hdd data 29.3T 29.6T

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active rgysi.debian.uhgqen Reqs: 0 /s 409k 408k 40.8k 16.5k

POOL TYPE USED AVAIL

cephfs.rgysi.meta metadata 1454M 2114G

cephfs.rgysi.data data 4898G 17.6T

ecpool_hdd data 29.3T 29.6T

root@zephir:~# getfattr -n ceph.dir.layout /home/rgysi/am/ecpool/

getfattr: Removing leading '/' from absolute path names

# file: home/rgysi/am/ecpool/

ceph.dir.layout="stripe_unit=4194304 stripe_count=1 object_size=4194304 pool=ecpool_hdd"

root@zephir:~#

getfattr: Removing leading '/' from absolute path names

# file: home/rgysi/am/ecpool/

ceph.dir.layout="stripe_unit=4194304 stripe_count=1 object_size=4194304 pool=ecpool_hdd"

root@zephir:~#

backups cephfs filesystem

backups - 2 clients

=======

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active backups.debian.runngh Reqs: 0 /s 253k 253k 21.3k 899

POOL TYPE USED AVAIL

cephfs.backups.meta metadata 1364M 2114G

cephfs.backups.data data 16.7T 16.4T

ecpool_hdd data 29.3T 29.6T

=======

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active backups.debian.runngh Reqs: 0 /s 253k 253k 21.3k 899

POOL TYPE USED AVAIL

cephfs.backups.meta metadata 1364M 2114G

cephfs.backups.data data 16.7T 16.4T

ecpool_hdd data 29.3T 29.6T

root@zephir:~# getfattr -n ceph.dir.layout /mnt/backups/windows/windows-drives/

getfattr: Removing leading '/' from absolute path names

# file: mnt/backups/windows/windows-drives/

ceph.dir.layout="stripe_unit=4194304 stripe_count=1 object_size=4194304 pool=ecpool_hdd"

root@zephir:~#

getfattr: Removing leading '/' from absolute path names

# file: mnt/backups/windows/windows-drives/

ceph.dir.layout="stripe_unit=4194304 stripe_count=1 object_size=4194304 pool=ecpool_hdd"

root@zephir:~#

So I guess I should use a different ec datapool for rbd and for each of the cephfs filesystems in the future, correct?

Thanks & Cheers

Reto

_______________________________________________ ceph-users mailing list -- ceph-users@xxxxxxx To unsubscribe send an email to ceph-users-leave@xxxxxxx