Hello Everyone,

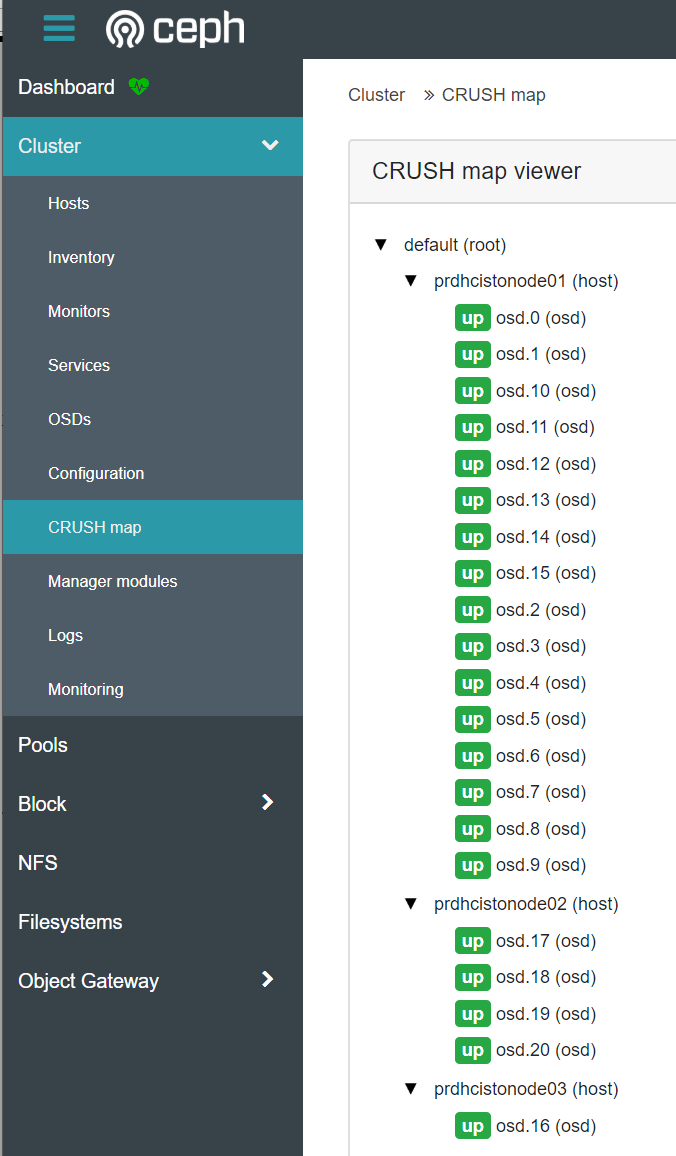

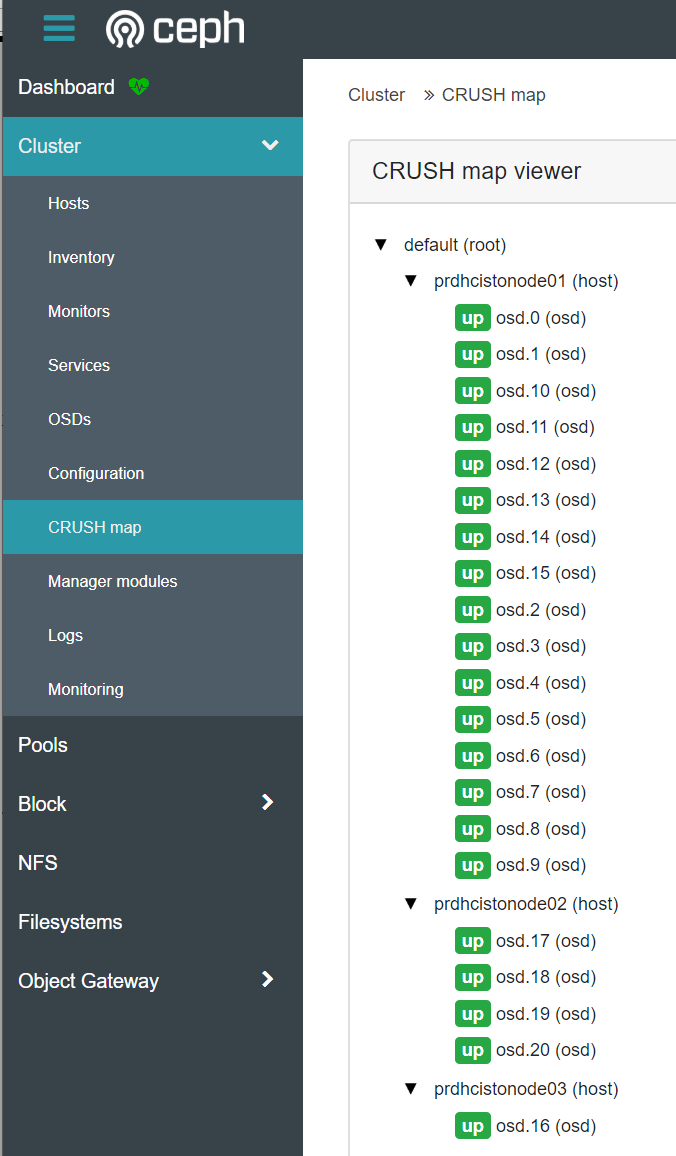

We're working on a new cluster and seeing some oddities. The crush map viewer is not showing all hosts or OSDs. Cluster is NVMe w/4 hosts, each having 8 NVMe. Using 2 OSDs per NVMe and Encryption. Using Max size of 3, Min size of 2:

All OSDs appear to exist in: ceph osd tree

root@prdhcistonode01:~# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 372.60156 root default

-3 93.15039 host prdhcistonode01

0 ssd 5.82190 osd.0 up 1.00000 1.00000

1 ssd 5.82190 osd.1 up 1.00000 1.00000

2 ssd 5.82190 osd.2 up 1.00000 1.00000

3 ssd 5.82190 osd.3 up 1.00000 1.00000

4 ssd 5.82190 osd.4 up 1.00000 1.00000

5 ssd 5.82190 osd.5 up 1.00000 1.00000

6 ssd 5.82190 osd.6 up 1.00000 1.00000

7 ssd 5.82190 osd.7 up 1.00000 1.00000

8 ssd 5.82190 osd.8 up 1.00000 1.00000

9 ssd 5.82190 osd.9 up 1.00000 1.00000

10 ssd 5.82190 osd.10 up 1.00000 1.00000

11 ssd 5.82190 osd.11 up 1.00000 1.00000

12 ssd 5.82190 osd.12 up 1.00000 1.00000

13 ssd 5.82190 osd.13 up 1.00000 1.00000

14 ssd 5.82190 osd.14 up 1.00000 1.00000

15 ssd 5.82190 osd.15 up 1.00000 1.00000

-5 93.15039 host prdhcistonode02

17 ssd 5.82190 osd.17 up 1.00000 1.00000

18 ssd 5.82190 osd.18 up 1.00000 1.00000

19 ssd 5.82190 osd.19 up 1.00000 1.00000

20 ssd 5.82190 osd.20 up 1.00000 1.00000

21 ssd 5.82190 osd.21 up 1.00000 1.00000

22 ssd 5.82190 osd.22 up 1.00000 1.00000

23 ssd 5.82190 osd.23 up 1.00000 1.00000

24 ssd 5.82190 osd.24 up 1.00000 1.00000

25 ssd 5.82190 osd.25 up 1.00000 1.00000

26 ssd 5.82190 osd.26 up 1.00000 1.00000

27 ssd 5.82190 osd.27 up 1.00000 1.00000

28 ssd 5.82190 osd.28 up 1.00000 1.00000

29 ssd 5.82190 osd.29 up 1.00000 1.00000

30 ssd 5.82190 osd.30 up 1.00000 1.00000

48 ssd 5.82190 osd.48 up 1.00000 1.00000

49 ssd 5.82190 osd.49 up 1.00000 1.00000

-7 93.15039 host prdhcistonode03

16 ssd 5.82190 osd.16 up 1.00000 1.00000

31 ssd 5.82190 osd.31 up 1.00000 1.00000

32 ssd 5.82190 osd.32 up 1.00000 1.00000

33 ssd 5.82190 osd.33 up 1.00000 1.00000

34 ssd 5.82190 osd.34 up 1.00000 1.00000

35 ssd 5.82190 osd.35 up 1.00000 1.00000

36 ssd 5.82190 osd.36 up 1.00000 1.00000

37 ssd 5.82190 osd.37 up 1.00000 1.00000

38 ssd 5.82190 osd.38 up 1.00000 1.00000

39 ssd 5.82190 osd.39 up 1.00000 1.00000

40 ssd 5.82190 osd.40 up 1.00000 1.00000

41 ssd 5.82190 osd.41 up 1.00000 1.00000

42 ssd 5.82190 osd.42 up 1.00000 1.00000

43 ssd 5.82190 osd.43 up 1.00000 1.00000

44 ssd 5.82190 osd.44 up 1.00000 1.00000

45 ssd 5.82190 osd.45 up 1.00000 1.00000

-9 93.15039 host prdhcistonode04

46 ssd 5.82190 osd.46 up 1.00000 1.00000

47 ssd 5.82190 osd.47 up 1.00000 1.00000

50 ssd 5.82190 osd.50 up 1.00000 1.00000

51 ssd 5.82190 osd.51 up 1.00000 1.00000

52 ssd 5.82190 osd.52 up 1.00000 1.00000

53 ssd 5.82190 osd.53 up 1.00000 1.00000

54 ssd 5.82190 osd.54 up 1.00000 1.00000

55 ssd 5.82190 osd.55 up 1.00000 1.00000

56 ssd 5.82190 osd.56 up 1.00000 1.00000

57 ssd 5.82190 osd.57 up 1.00000 1.00000

58 ssd 5.82190 osd.58 up 1.00000 1.00000

59 ssd 5.82190 osd.59 up 1.00000 1.00000

60 ssd 5.82190 osd.60 up 1.00000 1.00000

61 ssd 5.82190 osd.61 up 1.00000 1.00000

62 ssd 5.82190 osd.62 up 1.00000 1.00000

63 ssd 5.82190 osd.63 up 1.00000 1.00000

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 372.60156 root default

-3 93.15039 host prdhcistonode01

0 ssd 5.82190 osd.0 up 1.00000 1.00000

1 ssd 5.82190 osd.1 up 1.00000 1.00000

2 ssd 5.82190 osd.2 up 1.00000 1.00000

3 ssd 5.82190 osd.3 up 1.00000 1.00000

4 ssd 5.82190 osd.4 up 1.00000 1.00000

5 ssd 5.82190 osd.5 up 1.00000 1.00000

6 ssd 5.82190 osd.6 up 1.00000 1.00000

7 ssd 5.82190 osd.7 up 1.00000 1.00000

8 ssd 5.82190 osd.8 up 1.00000 1.00000

9 ssd 5.82190 osd.9 up 1.00000 1.00000

10 ssd 5.82190 osd.10 up 1.00000 1.00000

11 ssd 5.82190 osd.11 up 1.00000 1.00000

12 ssd 5.82190 osd.12 up 1.00000 1.00000

13 ssd 5.82190 osd.13 up 1.00000 1.00000

14 ssd 5.82190 osd.14 up 1.00000 1.00000

15 ssd 5.82190 osd.15 up 1.00000 1.00000

-5 93.15039 host prdhcistonode02

17 ssd 5.82190 osd.17 up 1.00000 1.00000

18 ssd 5.82190 osd.18 up 1.00000 1.00000

19 ssd 5.82190 osd.19 up 1.00000 1.00000

20 ssd 5.82190 osd.20 up 1.00000 1.00000

21 ssd 5.82190 osd.21 up 1.00000 1.00000

22 ssd 5.82190 osd.22 up 1.00000 1.00000

23 ssd 5.82190 osd.23 up 1.00000 1.00000

24 ssd 5.82190 osd.24 up 1.00000 1.00000

25 ssd 5.82190 osd.25 up 1.00000 1.00000

26 ssd 5.82190 osd.26 up 1.00000 1.00000

27 ssd 5.82190 osd.27 up 1.00000 1.00000

28 ssd 5.82190 osd.28 up 1.00000 1.00000

29 ssd 5.82190 osd.29 up 1.00000 1.00000

30 ssd 5.82190 osd.30 up 1.00000 1.00000

48 ssd 5.82190 osd.48 up 1.00000 1.00000

49 ssd 5.82190 osd.49 up 1.00000 1.00000

-7 93.15039 host prdhcistonode03

16 ssd 5.82190 osd.16 up 1.00000 1.00000

31 ssd 5.82190 osd.31 up 1.00000 1.00000

32 ssd 5.82190 osd.32 up 1.00000 1.00000

33 ssd 5.82190 osd.33 up 1.00000 1.00000

34 ssd 5.82190 osd.34 up 1.00000 1.00000

35 ssd 5.82190 osd.35 up 1.00000 1.00000

36 ssd 5.82190 osd.36 up 1.00000 1.00000

37 ssd 5.82190 osd.37 up 1.00000 1.00000

38 ssd 5.82190 osd.38 up 1.00000 1.00000

39 ssd 5.82190 osd.39 up 1.00000 1.00000

40 ssd 5.82190 osd.40 up 1.00000 1.00000

41 ssd 5.82190 osd.41 up 1.00000 1.00000

42 ssd 5.82190 osd.42 up 1.00000 1.00000

43 ssd 5.82190 osd.43 up 1.00000 1.00000

44 ssd 5.82190 osd.44 up 1.00000 1.00000

45 ssd 5.82190 osd.45 up 1.00000 1.00000

-9 93.15039 host prdhcistonode04

46 ssd 5.82190 osd.46 up 1.00000 1.00000

47 ssd 5.82190 osd.47 up 1.00000 1.00000

50 ssd 5.82190 osd.50 up 1.00000 1.00000

51 ssd 5.82190 osd.51 up 1.00000 1.00000

52 ssd 5.82190 osd.52 up 1.00000 1.00000

53 ssd 5.82190 osd.53 up 1.00000 1.00000

54 ssd 5.82190 osd.54 up 1.00000 1.00000

55 ssd 5.82190 osd.55 up 1.00000 1.00000

56 ssd 5.82190 osd.56 up 1.00000 1.00000

57 ssd 5.82190 osd.57 up 1.00000 1.00000

58 ssd 5.82190 osd.58 up 1.00000 1.00000

59 ssd 5.82190 osd.59 up 1.00000 1.00000

60 ssd 5.82190 osd.60 up 1.00000 1.00000

61 ssd 5.82190 osd.61 up 1.00000 1.00000

62 ssd 5.82190 osd.62 up 1.00000 1.00000

63 ssd 5.82190 osd.63 up 1.00000 1.00000

Any suggestions would be greatly appreciated.

Thanks,

Marco

Marco

_______________________________________________ ceph-users mailing list -- ceph-users@xxxxxxx To unsubscribe send an email to ceph-users-leave@xxxxxxx