Thanks to all! I might have found the reason.

It is look like relate to the below bug.

https://bugs.launchpad.net/nova/+bug/1773449

At 2018-12-04 23:42:15, "Ouyang Xu" <xu.ouyang@xxxxxxx> wrote:

Hi linghucongsong:

I have got this issue before, you can try to fix it as below:

1. use rbd lock ls to get the lock for the vm

2. use rbd lock rm to remove that lock for the vm

3. start vm again

hope that can help you.

regards,

Ouyang

On 2018/12/4 下午4:48, linghucongsong wrote:

HI all!

I have a ceph test envirment use ceph with openstack. There are some vms run on the openstack. It is just a test envirment.

my ceph version is 12.2.4. Last day I reboot all the ceph hosts before this I do not shutdown the vms on the openstack.

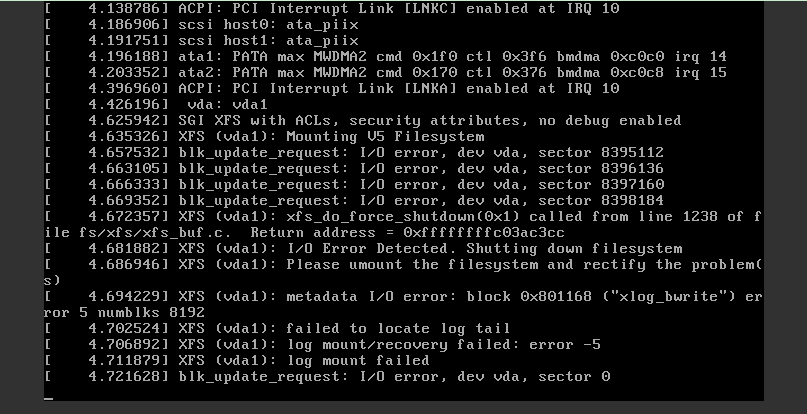

When all the hosts boot up and the ceph become healthy. I found all the vms can not start up. All the vms have the

below xfs error. Even I use xfs_repair also can not repair this problem .

It is just a test envrement so the data is not important to me. I know the ceph version 12.2..4 is not stable

enough but how does it have so serious problems. Mind to other people care about this. Thanks to all. :)

_______________________________________________ ceph-users mailing list ceph-users@xxxxxxxxxxxxxx http://lists.ceph.com/listinfo.cgi/ceph-users-ceph.com

_______________________________________________ ceph-users mailing list ceph-users@xxxxxxxxxxxxxx http://lists.ceph.com/listinfo.cgi/ceph-users-ceph.com