Den tis 4 dec. 2018 kl 09:49 skrev linghucongsong <linghucongsong@xxxxxxx>:

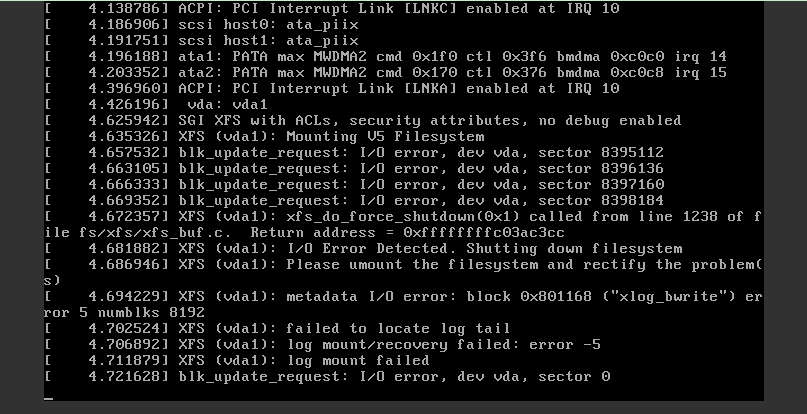

HI all!I have a ceph test envirment use ceph with openstack. There are some vms run on the openstack. It is just a test envirment.my ceph version is 12.2.4. Last day I reboot all the ceph hosts before this I do not shutdown the vms on the openstack.When all the hosts boot up and the ceph become healthy. I found all the vms can not start up. All the vms have thebelow xfs error. Even I use xfs_repair also can not repair this problem .

So you removed the underlying storage while the machines were running. What did you expect would happen?

If you do this to a physical machine, or guests running with some other kind of remote storage like iscsi, what

do you think will happen to running machines in that case?

May the most significant bit of your life be positive.

_______________________________________________ ceph-users mailing list ceph-users@xxxxxxxxxxxxxx http://lists.ceph.com/listinfo.cgi/ceph-users-ceph.com