I've just seen that send_pg_creates command is obsolete and has already been removed in 6cbdd6750cf330047d52817b9ee9af31a7d318ae

So I guess it doesn't do too much :)

Tnx,

Ivan

On Wed, Oct 5, 2016 at 2:37 AM, Ivan Grcic <ivan.grcic@xxxxxxxxx> wrote:

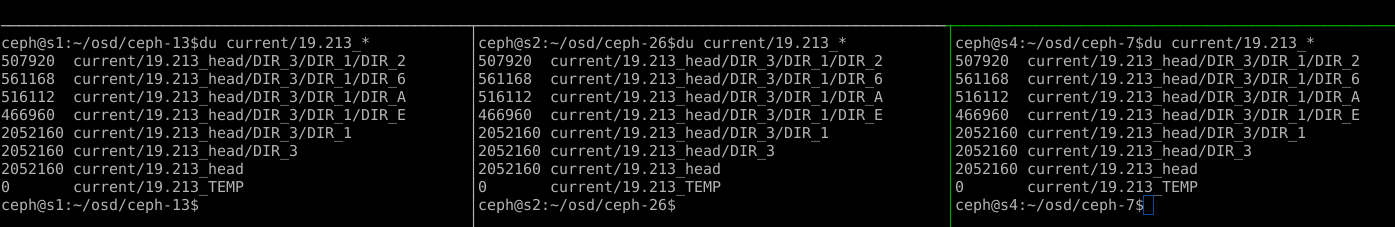

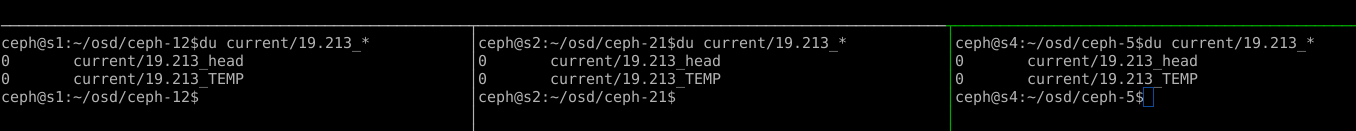

Hi everybody,I am trying to understand why am I keep on getting remapped+wait_backfill pg statuses, when doing some cluster pg shuffling. Sometimes it happens just by doing small reweight-by-utilization operation, and sometimes when I modify the crushmap (bigger movement of data).Taking look at ceph health detail and investigating some of the pgs by using pg #ipg_id query, I can see that all of the "acting" pgs are healthy and of the same size. "Up" pgs do have a pg folder created, but dont have any data inside (empty head + TEMP).I dont have any (near)full pgs, and ceph pg debug unfound_objects_exist yields FALSE.Cluster is also 100% functional(but in WARN state), and I can see that if I write some data, acting pgs are all happily syncing between each other.Acting pgsUp pgs

I can simply recover form this following this steps:

- set noout, norecover, norebalance to avoid unnecessary data movement

- stopping all actingbackfill pgs (active + up) at the same time

- remove empty "up" pgs

- start all the pgs again

- unset noout, norecover, norebalance

After that new "up" pgs are recreated in remapped+backfilling state, and marked as active+clean after some time.I have also tried to "kick the cluster in the head" with ceph pg send_pg_creates (as stated here https://www.mail-archive.com/ceph-devel@xxxxxxxxxxxxxxx/ ), but I get:msg12287.html $ ceph pg send_pg_createsError EINVAL: (22) Invalid argumentBTW What is send_pg_creates really supposed to do?Does anyone have some hints is this occurring?Thank you,IvanJewel 12.2.2size 3min_size 2#I havent been playing with tunables# begin crush maptunable choose_local_tries 0tunable choose_local_fallback_tries 0tunable choose_total_tries 50tunable chooseleaf_descend_once 1tunable chooseleaf_vary_r 1tunable straw_calc_version 1#standard rulesetrule replicated_ruleset {ruleset 0type replicatedmin_size 1max_size 10step take dcstep chooseleaf firstn 0 type hoststep emit}

_______________________________________________ ceph-users mailing list ceph-users@xxxxxxxxxxxxxx http://lists.ceph.com/listinfo.cgi/ceph-users-ceph.com