Hi John, Thanks for the help, it was related to the calamari branch of diamond not working with the latest version of ceph

On Mon, Feb 1, 2016 at 11:22 PM, John Spray <jspray@xxxxxxxxxx> wrote:

The "assert path[-1] == 'type'" is the error you get when using the

calamari diamond branch with a >= infernalis version of Ceph (where

new fields were added to the perf schema output). No idea if anyone

has worked on updating Calamari+Diamond for latest ceph.

John

> _______________________________________________

On Mon, Feb 1, 2016 at 12:09 PM, Daniel Rolfe <daniel.rolfe.au@xxxxxxxxx> wrote:

> I can see the is ok files are there

>

> root@ceph1:/var/run/ceph# ls -la

> total 0

> drwxrwx--- 2 ceph ceph 80 Feb 1 10:51 .

> drwxr-xr-x 18 root root 640 Feb 1 10:52 ..

> srwxr-xr-x 1 ceph ceph 0 Feb 1 10:51 ceph-mon.ceph1.asok

> srwxr-xr-x 1 root root 0 Jan 27 15:08 ceph-osd.0.asok

> root@ceph1:/var/run/ceph#

> root@ceph1:/var/run/ceph#

> root@ceph1:/var/run/ceph#

>

>

> Running diamond in debug show the below

>

> [2016-02-01 10:55:23,774] [Thread-1] Collecting data from: NetworkCollector

> [2016-02-01 10:56:23,484] [Thread-1] Collecting data from: CPUCollector

> [2016-02-01 10:56:23,487] [Thread-6] Collecting data from: MemoryCollector

> [2016-02-01 10:56:23,489] [Thread-7] Collecting data from: SockstatCollector

> [2016-02-01 10:56:23,768] [Thread-1] Collecting data from: CephCollector

> [2016-02-01 10:56:23,768] [Thread-1] gathering service stats for

> /var/run/ceph/ceph-mon.ceph1.asok

> [2016-02-01 10:56:24,094] [Thread-1] Traceback (most recent call last):

> File "/usr/lib/pymodules/python2.7/diamond/collector.py", line 412, in

> _run

> self.collect()

> File "/usr/share/diamond/collectors/ceph/ceph.py", line 464, in collect

> self._collect_service_stats(path)

> File "/usr/share/diamond/collectors/ceph/ceph.py", line 450, in

> _collect_service_stats

> self._publish_stats(counter_prefix, stats, schema, GlobalName)

> File "/usr/share/diamond/collectors/ceph/ceph.py", line 305, in

> _publish_stats

> assert path[-1] == 'type'

> AssertionError

>

> [2016-02-01 10:56:24,096] [Thread-8] Collecting data from:

> LoadAverageCollector

> [2016-02-01 10:56:24,098] [Thread-1] Collecting data from: VMStatCollector

> [2016-02-01 10:56:24,099] [Thread-1] Collecting data from:

> DiskUsageCollector

> [2016-02-01 10:56:24,104] [Thread-9] Collecting data from:

> DiskSpaceCollector

>

>

>

> Check the md5 on the file returns the below:

>

> root@ceph1:/var/run/ceph# md5sum /usr/share/diamond/collectors/ceph/ceph.py

> aeb3915f8ac7fdea61495805d2c99f33 /usr/share/diamond/collectors/ceph/ceph.py

> root@ceph1:/var/run/ceph#

>

>

>

> I've found that replacing the ceph.py file with the below stops the diamond

> error

>

>

> https://raw.githubusercontent.com/BrightcoveOS/Diamond/master/src/collectors/ceph/ceph.py

>

> root@ceph1:/usr/share/diamond/collectors/ceph# md5sum ceph.py

> 13ac74ce0df39a5def879cb5fc530015 ceph.py

>

>

> [2016-02-01 11:14:33,116] [Thread-42] Collecting data from: MemoryCollector

> [2016-02-01 11:14:33,117] [Thread-1] Collecting data from: CPUCollector

> [2016-02-01 11:14:33,123] [Thread-43] Collecting data from:

> SockstatCollector

> [2016-02-01 11:14:35,453] [Thread-1] Collecting data from: CephCollector

> [2016-02-01 11:14:35,454] [Thread-1] checking

> /var/run/ceph/ceph-mon.ceph1.asok

> [2016-02-01 11:14:35,552] [Thread-1] checking /var/run/ceph/ceph-osd.0.asok

> [2016-02-01 11:14:35,685] [Thread-44] Collecting data from:

> LoadAverageCollector

> [2016-02-01 11:14:35,686] [Thread-1] Collecting data from: VMStatCollector

> [2016-02-01 11:14:35,687] [Thread-1] Collecting data from:

> DiskUsageCollector

> [2016-02-01 11:14:35,692] [Thread-45] Collecting data from:

> DiskSpaceCollector

>

>

> But after all that it's still NOT working

>

> What diamond version are you running ?

>

> I'm running Diamond version 3.4.67

>

> On Mon, Feb 1, 2016 at 11:01 PM, Daniel Rolfe <daniel.rolfe.au@xxxxxxxxx>

> wrote:

>>

>> I can see the is ok files are there

>>

>> root@ceph1:/var/run/ceph# ls -la

>> total 0

>> drwxrwx--- 2 ceph ceph 80 Feb 1 10:51 .

>> drwxr-xr-x 18 root root 640 Feb 1 10:52 ..

>> srwxr-xr-x 1 ceph ceph 0 Feb 1 10:51 ceph-mon.ceph1.asok

>> srwxr-xr-x 1 root root 0 Jan 27 15:08 ceph-osd.0.asok

>> root@ceph1:/var/run/ceph#

>> root@ceph1:/var/run/ceph#

>> root@ceph1:/var/run/ceph#

>>

>>

>> Running diamond in debug show the below

>>

>> [2016-02-01 10:55:23,774] [Thread-1] Collecting data from:

>> NetworkCollector

>> [2016-02-01 10:56:23,484] [Thread-1] Collecting data from: CPUCollector

>> [2016-02-01 10:56:23,487] [Thread-6] Collecting data from: MemoryCollector

>> [2016-02-01 10:56:23,489] [Thread-7] Collecting data from:

>> SockstatCollector

>> [2016-02-01 10:56:23,768] [Thread-1] Collecting data from: CephCollector

>> [2016-02-01 10:56:23,768] [Thread-1] gathering service stats for

>> /var/run/ceph/ceph-mon.ceph1.asok

>> [2016-02-01 10:56:24,094] [Thread-1] Traceback (most recent call last):

>> File "/usr/lib/pymodules/python2.7/diamond/collector.py", line 412, in

>> _run

>> self.collect()

>> File "/usr/share/diamond/collectors/ceph/ceph.py", line 464, in collect

>> self._collect_service_stats(path)

>> File "/usr/share/diamond/collectors/ceph/ceph.py", line 450, in

>> _collect_service_stats

>> self._publish_stats(counter_prefix, stats, schema, GlobalName)

>> File "/usr/share/diamond/collectors/ceph/ceph.py", line 305, in

>> _publish_stats

>> assert path[-1] == 'type'

>> AssertionError

>>

>> [2016-02-01 10:56:24,096] [Thread-8] Collecting data from:

>> LoadAverageCollector

>> [2016-02-01 10:56:24,098] [Thread-1] Collecting data from: VMStatCollector

>> [2016-02-01 10:56:24,099] [Thread-1] Collecting data from:

>> DiskUsageCollector

>> [2016-02-01 10:56:24,104] [Thread-9] Collecting data from:

>> DiskSpaceCollector

>>

>>

>>

>> Check the md5 on the file returns the below:

>>

>> root@ceph1:/var/run/ceph# md5sum

>> /usr/share/diamond/collectors/ceph/ceph.py

>> aeb3915f8ac7fdea61495805d2c99f33

>> /usr/share/diamond/collectors/ceph/ceph.py

>> root@ceph1:/var/run/ceph#

>>

>>

>>

>> I've found that replacing the ceph.py file with the below stops the

>> diamond error

>>

>>

>>

>> https://raw.githubusercontent.com/BrightcoveOS/Diamond/master/src/collectors/ceph/ceph.py

>>

>> root@ceph1:/usr/share/diamond/collectors/ceph# md5sum ceph.py

>> 13ac74ce0df39a5def879cb5fc530015 ceph.py

>>

>>

>> [2016-02-01 11:14:33,116] [Thread-42] Collecting data from:

>> MemoryCollector

>> [2016-02-01 11:14:33,117] [Thread-1] Collecting data from: CPUCollector

>> [2016-02-01 11:14:33,123] [Thread-43] Collecting data from:

>> SockstatCollector

>> [2016-02-01 11:14:35,453] [Thread-1] Collecting data from: CephCollector

>> [2016-02-01 11:14:35,454] [Thread-1] checking

>> /var/run/ceph/ceph-mon.ceph1.asok

>> [2016-02-01 11:14:35,552] [Thread-1] checking

>> /var/run/ceph/ceph-osd.0.asok

>> [2016-02-01 11:14:35,685] [Thread-44] Collecting data from:

>> LoadAverageCollector

>> [2016-02-01 11:14:35,686] [Thread-1] Collecting data from: VMStatCollector

>> [2016-02-01 11:14:35,687] [Thread-1] Collecting data from:

>> DiskUsageCollector

>> [2016-02-01 11:14:35,692] [Thread-45] Collecting data from:

>> DiskSpaceCollector

>>

>>

>> But after all that it's still now working

>>

>> What diamond version are you running ?

>>

>> I'm running Diamond version 3.4.67

>>

>>

>> On Mon, Feb 1, 2016 at 12:24 PM, hnuzhoulin <hnuzhoulin2@xxxxxxxxx> wrote:

>>>

>>> Yes,in my environment I fix it.

>>> BTW,I check the md5 of ceph collection file.It is correct.

>>>

>>> 在 Sun, 31 Jan 2016 22:46:42 +0800,Daniel Rolfe

>>> <daniel.rolfe.au@xxxxxxxxx> 写道:

>>>

>>> Hi, thanks for the reply

>>>

>>> Just to confirm , did you manage to fix this issue ?

>>>

>>> I've restarted the whole ceph cluster a few times.

>>>

>>> Sent from my iPhone

>>>

>>> On 1 Feb 2016, at 1:26 AM, hnuzhoulin <hnuzhoulin2@xxxxxxxxx> wrote:

>>>

>>> I just face the same problem.

>>>

>>> The problem is my cluster missing the asok files of mons although the

>>> cluster works well.

>>>

>>> so kill mon process and restart it may fix it.(using service command to

>>> restart mon daemon may do not work)

>>>

>>>

>>> 在 Sun, 31 Jan 2016 10:35:25 +0800,Daniel Rolfe

>>> <daniel.rolfe.au@xxxxxxxxx> 写道:

>>>

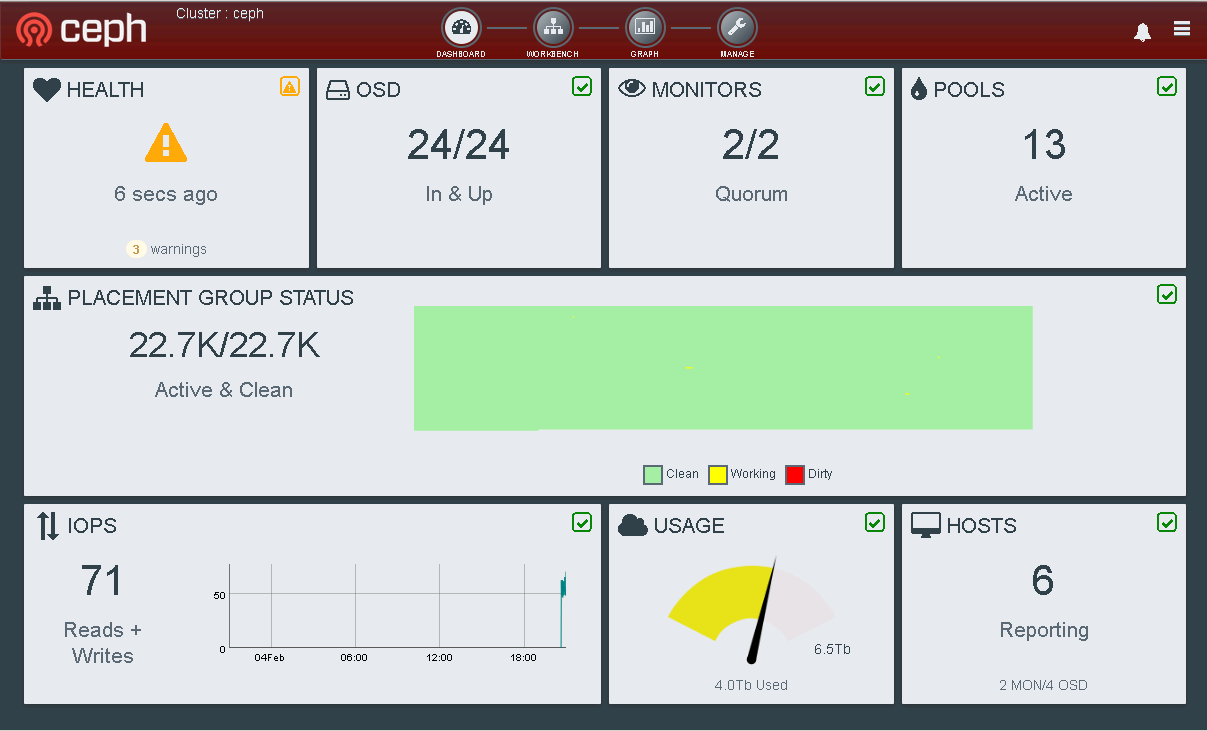

>>> Seem to be having an issue with global ceph stats getting back to

>>> calamari

>>>

>>> Individual node and osd stats are working

>>>

>>> If anyone can point me into the right direction that would be great

>>>

>>> https://github.com/ceph/calamari/issues/384

>>>

>>>

>>>

>>>

>>>

>>>

>>>

>>> --

>>> -------------------------

>>> hnuzhoulin2@xxxxxxxxx

>>>

>>>

>>>

>>>

>>> --

>>> -------------------------

>>> hnuzhoulin@xxxxxxxxx

>>

>>

>

>

> ceph-users mailing list

> ceph-users@xxxxxxxxxxxxxx

> http://lists.ceph.com/listinfo.cgi/ceph-users-ceph.com

>

_______________________________________________ ceph-users mailing list ceph-users@xxxxxxxxxxxxxx http://lists.ceph.com/listinfo.cgi/ceph-users-ceph.com