I'm positive the client I sent you the log is 94. We do have one client still on 87.

On Tue, Sep 29, 2015, 6:42 AM John Spray <jspray@xxxxxxxxxx> wrote:

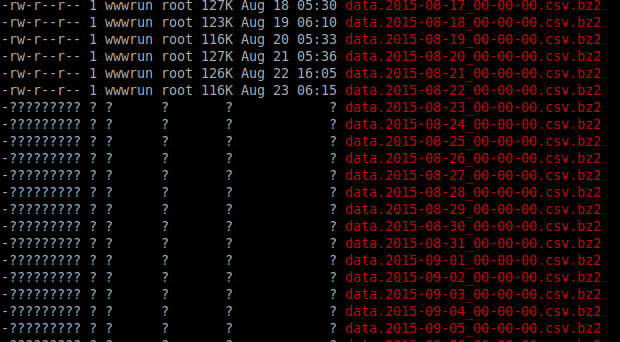

Hmm, so apparently a similar bug was fixed in 0.87: Scott, can you confirm that your *clients* were 0.94 (not just the servers)?Thanks,JohnOn Tue, Sep 29, 2015 at 11:56 AM, John Spray <jspray@xxxxxxxxxx> wrote:Ah, this is a nice clear log!I've described the bug here:In the short term, you may be able to mitigate this by increasing client_cache_size (on the client) if your RAM allows it.JohnOn Tue, Sep 29, 2015 at 12:58 AM, Scottix <scottix@xxxxxxxxx> wrote:I know this is an old one but I got a log in ceph-fuse for it.I got this on OpenSuse 12.13.1.10-1.29-desktopUsing ceph-fuseceph version 0.94.3 (95cefea9fd9ab740263bf8bb4796fd864d9afe2b)I am running an rsync in the background and then doing a simple ls -la so the log is large.I am guessing this is the problem. The file is there and if I list the directory again it shows up properly.2015-09-28 16:34:21.548631 7f372effd700 3 client.28239198 ll_lookup 0x7f370d1b1c50 data.2015-08-23_00-00-00.csv.bz22015-09-28 16:34:21.548635 7f372effd700 10 client.28239198 _lookup concluded ENOENT locally for 100009d72a1.head(ref=4 ll_ref=5 cap_refs={} open={} mode=42775 size=0/0 mtime=2015-09-28 05:57:57.259306 caps=pAsLsXsFs(0=pAsLsXsFs) COMPLETE parents=0x7f3732ff97c0 0x7f370d1b1c50) dn 'data.2015-08-23_00-00-00.csv.bz2'It seems to show up more if multiple things are access the ceph mount, just my observations.Best,ScottOn Tue, Mar 3, 2015 at 3:05 PM Scottix <scottix@xxxxxxxxx> wrote:Ya we are not at 0.87.1 yet, possibly tomorrow. I'll let you know if it still reports the same.

Thanks John,--ScottieOn Tue, Mar 3, 2015 at 2:57 PM John Spray <john.spray@xxxxxxxxxx> wrote:On 03/03/2015 22:35, Scottix wrote:

> I was testing a little bit more and decided to run the cephfs-journal-tool

>

> I ran across some errors

>

> $ cephfs-journal-tool journal inspect

> 2015-03-03 14:18:54.453981 7f8e29f86780 -1 Bad entry start ptr

> (0x2aeb0000f6) at 0x2aeb32279b

> 2015-03-03 14:18:54.539060 7f8e29f86780 -1 Bad entry start ptr

> (0x2aeb000733) at 0x2aeb322dd8

> 2015-03-03 14:18:54.584539 7f8e29f86780 -1 Bad entry start ptr

> (0x2aeb000d70) at 0x2aeb323415

> 2015-03-03 14:18:54.669991 7f8e29f86780 -1 Bad entry start ptr

> (0x2aeb0013ad) at 0x2aeb323a52

> 2015-03-03 14:18:54.707724 7f8e29f86780 -1 Bad entry start ptr

> (0x2aeb0019ea) at 0x2aeb32408f

> Overall journal integrity: DAMAGED

I expect this is http://tracker.ceph.com/issues/9977, which is fixed in

master.

You are in *very* bleeding edge territory here, and I'd suggest using

the latest development release if you want to experiment with the latest

CephFS tooling.

Cheers,

John

_______________________________________________ ceph-users mailing list ceph-users@xxxxxxxxxxxxxx http://lists.ceph.com/listinfo.cgi/ceph-users-ceph.com