Hi, Sage & Folks

As our osd sometimes crashed when the load of the cluster is heavy. The two log snippet is as below:

Crash Log1:

-2> 2014-07-31 22:25:48.852417 7fa7c569d700 2 -- 10.193.207.180:6816/3057244 >> 10.193.207.182:6898/2075802 pipe(0x55bad180 sd=99 :60119 s=1 pgs=15831 cs=4 l=0 c=0x3083e160). discarding previously sent 76 pg_notify(14.2d5cs3(35) epoch 3703) v5

-1> 2014-07-31 22:25:48.852499 7fa7c569d700 2 -- 10.193.207.180:6816/3057244 >> 10.193.207.182:6898/2075802 pipe(0x55bad180 sd=99 :60119 s=1 pgs=15831 cs=4 l=0 c=0x3083e160). discarding previously sent 77 pg_notify(14.83es3(42) epoch 3703) v5

0> 2014-07-31 22:25:48.865930 7fa7c569d700 -1 msg/Pipe.cc: In function 'int Pipe::connect()' thread 7fa7c569d700 time 2014-07-31 22:25:48.852589 msg/Pipe.cc: 1080: FAILED assert(m)

ceph version 0.80.4 (7c241cfaa6c8c068bc9da8578ca00b9f4fc7567f)

1: (Pipe::connect()+0x3f83) [0xb26043]

2: (Pipe::writer()+0x9f3) [0xb2eec3]

3: (Pipe::Writer::entry()+0xd) [0xb3247d]

4: /lib64/libpthread.so.0() [0x3ddca07851]

5: (clone()+0x6d) [0x3ddc6e890d]

Crash Log2:

-278> 2014-08-20 11:04:28.609192 7f89636c8700 10 osd.11 783 OSD::ms_get_authorizer type=osd

-277> 2014-08-20 11:04:28.609783 7f89636c8700 2 -- 10.193.207.117:6816/44281 >> 10.193.207.125:6804/2022817 pipe(0x7ef2280 sd=105 :42657 s=1 pgs=236754 cs=4 l=0 c=0x44318c0). got newly_acked_seq 546 vs out_seq 0

-276> 2014-08-20 11:04:28.609810 7f89636c8700 2 -- 10.193.207.117:6816/44281 >> 10.193.207.125:6804/2022817 pipe(0x7ef2280 sd=105 :42657 s=1 pgs=236754 cs=4 l=0 c=0x44318c0). discarding previously sent 1 osd_map(727..755 src has 1..755) v3

-275> 2014-08-20 11:04:28.609859 7f89636c8700 2 -- 10.193.207.117:6816/44281 >> 10.193.207.125:6804/2022817 pipe(0x7ef2280 sd=105 :42657 s=1 pgs=236754 cs=4 l=0 c=0x44318c0). discarding previously sent 2 pg_notify(1.2b(22),2.2c(23) epoch 755) v5

2014-08-20 11:04:28.608141 7f89629bb700 0 -- 10.193.207.117:6816/44281 >> 10.193.207.125:6804/2022817 pipe(0x7ef2280 sd=134 :6816 s=2 pgs=236754 cs=3 l=0 c=0x44318c0).fault, initiating reconnect

2014-08-20 11:04:28.609192 7f89636c8700 10 osd.11 783 OSD::ms_get_authorizer type=osd

2014-08-20 11:04:28.666895 7f89636c8700 -1 msg/Pipe.cc: In function 'int Pipe::connect()' thread 7f89636c8700 time 2014-08-20 11:04:28.618536 msg/Pipe.cc: 1080: FAILED assert(m)

I think there may be some bug in Pipe.cc file. After reading the source code, and I find a obvious discrepancy in two place in the file:

Taking the newest code base in github as example(our cluster is v0.80.4, but the part of the file has not been changed):

In file src/msg/simple/Pipe.cc

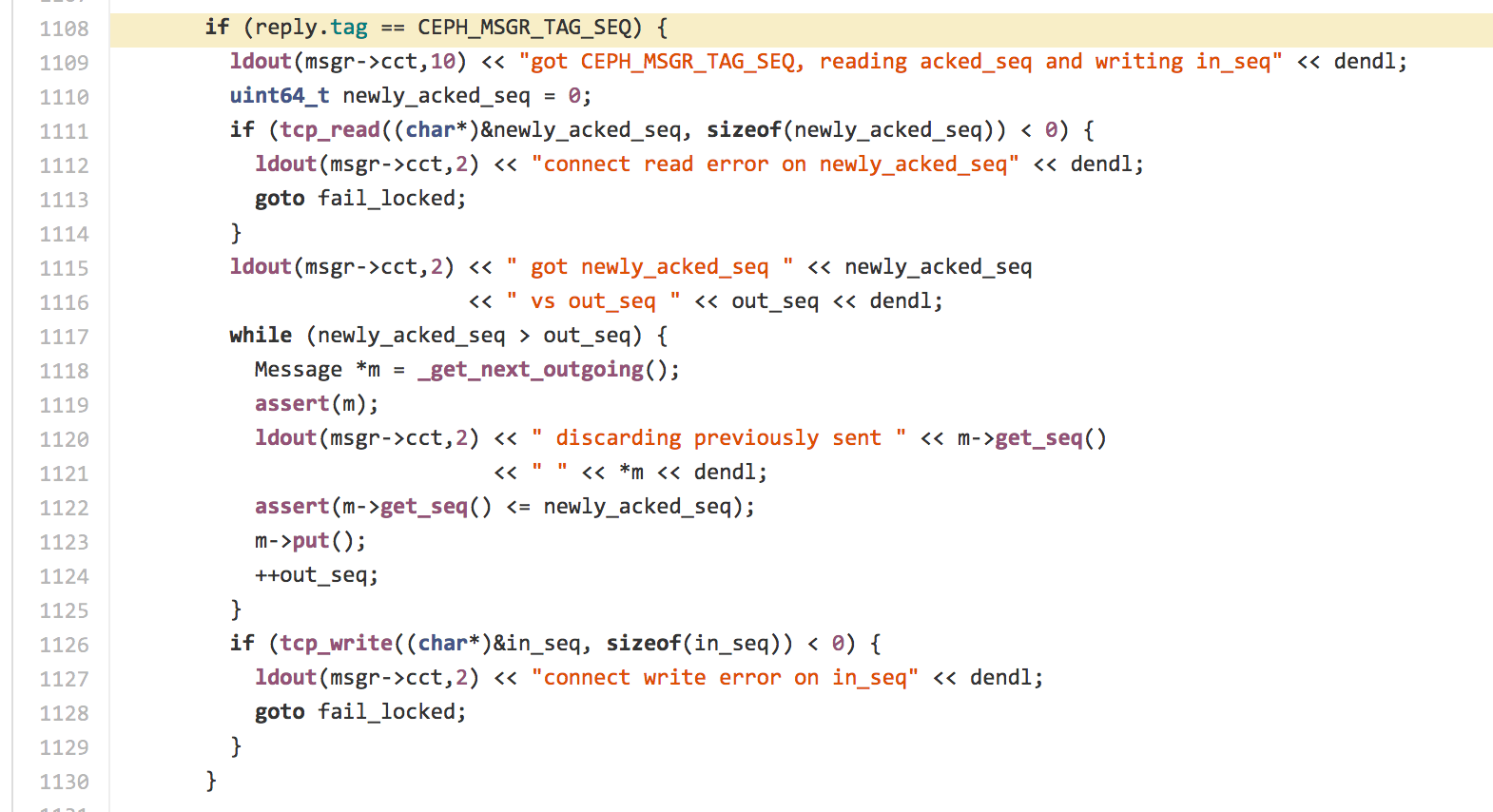

In Pipe::connect(), from Line1108 ~ Line1130:

It is when the client get the CEPH_MSGR_TAG_SEQ reply, it will reuse the connection, and discard the the previous sent. But when coming to this routine, out_seq is 0, it will do much more loop to discard the previous sent than expected. So, the when m is null in the loop, the osd will crash.

Reading the corresponding sever side source code in the same file.

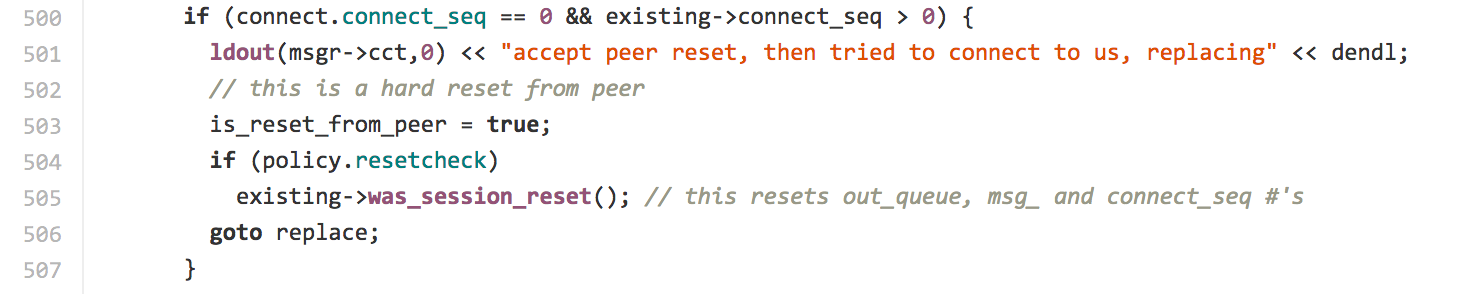

Pipe::accept(), Line500 ~ Line507:

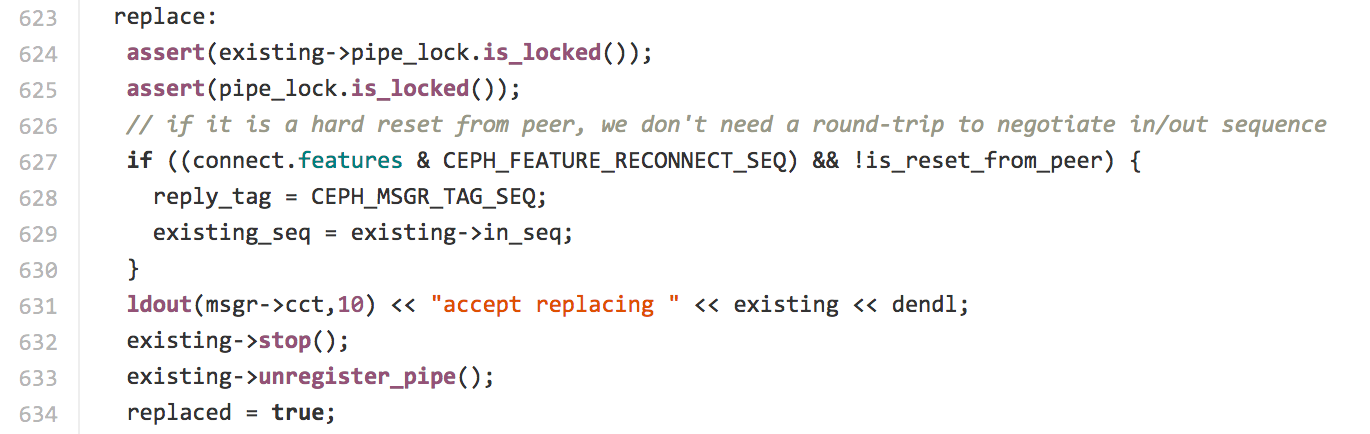

And Pipe::accept(), Line623 ~ Line634:

From the code, when the server side get the hard reset request, it will mark

Line503: is_reset_from_peer = true

then goes to replace routine.

But in the replace routine:

Line627: if ((connect.features & CEPH_FEATURE_RECONNECT_SEQ) && !is_reset_from_peer)

It imply when the is_reset_from_peer is false the server side then reply the tag CEPH_MSGR_TAG_SEQ, which implies that the reply is for hard reset. Which conflict with Line500 ~ Line507. I think the correct _expression_ in Line 627 should be:

Line627: if ((connect.features & CEPH_FEATURE_RECONNECT_SEQ) && is_reset_from_peer)

Without the exclamation mark.

I think it may have something to do with our crash issue. I don’t know if I mis-understand the source code or not. If it is not the truth, please feel free to inform me.

Thanks

Wenjun

_______________________________________________ ceph-users mailing list ceph-users@xxxxxxxxxxxxxx http://lists.ceph.com/listinfo.cgi/ceph-users-ceph.com